6 Supervised learning

6.1 Linear regression

6.1.1 Correlation

Before we start with regression analysis, we will review the basic concept of correlation first. Correlation helps us to determine the degree to which the variation in one variable, X, is related to the variation in another variable, Y.

6.1.1.1 Correlation coefficient

The correlation coefficient summarizes the strength of the linear relationship between two metric (interval or ratio scaled) variables. Let’s consider a simple example. Say you conduct a survey to investigate the relationship between the attitude towards a city and the duration of residency. The “Attitude” variable can take values between 1 (very unfavorable) and 12 (very favorable), and the “duration of residency” is measured in years. Let’s further assume for this example that the attitude measurement represents an interval scale (although it is usually not realistic to assume that the scale points on an itemized rating scale have the same distance). To keep it simple, let’s further assume that you only asked 12 people. We can create a short data set like this:

library(psych)

attitude <- c(6, 9, 8, 3, 10, 4, 5, 2, 11, 9, 10, 2)

duration <- c(10, 12, 12, 4, 12, 6, 8, 2, 18, 9, 17,

2)

att_data <- data.frame(attitude, duration)

att_data <- att_data[order(-attitude), ]

att_data$respodentID <- c(1:12)

str(att_data)## 'data.frame': 12 obs. of 3 variables:

## $ attitude : num 11 10 10 9 9 8 6 5 4 3 ...

## $ duration : num 18 12 17 12 9 12 10 8 6 4 ...

## $ respodentID: int 1 2 3 4 5 6 7 8 9 10 ...psych::describe(att_data[, c("attitude", "duration")])## vars n mean sd median trimmed mad min max range skew kurtosis

## attitude 1 12 6.58 3.32 7.0 6.6 4.45 2 11 9 -0.14 -1.74

## duration 2 12 9.33 5.26 9.5 9.2 4.45 2 18 16 0.10 -1.27

## se

## attitude 0.96

## duration 1.52att_data## attitude duration respodentID

## 9 11 18 1

## 5 10 12 2

## 11 10 17 3

## 2 9 12 4

## 10 9 9 5

## 3 8 12 6

## 1 6 10 7

## 7 5 8 8

## 6 4 6 9

## 4 3 4 10

## 8 2 2 11

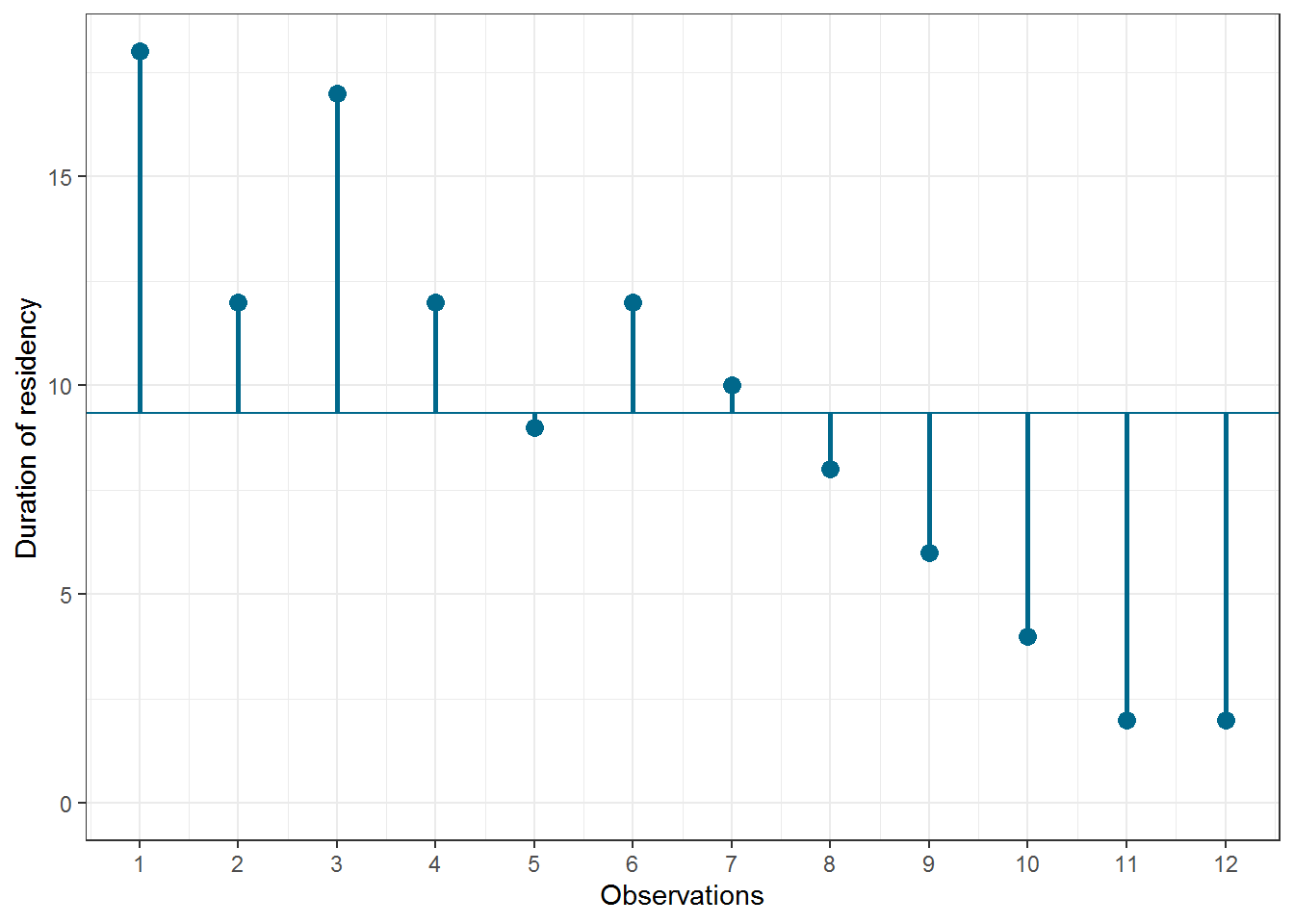

## 12 2 2 12Let’s look at the data first. The following graph shows the individual data points for the “duration of residency”” variable, where the y-axis shows the duration of residency in years and the x-axis shows the respondent ID. The blue horizontal line represents the mean of the variable (9.33) and the vertical lines show the distance of the individual data points from the mean.

Figure 1.3: Scores for duration of residency variable

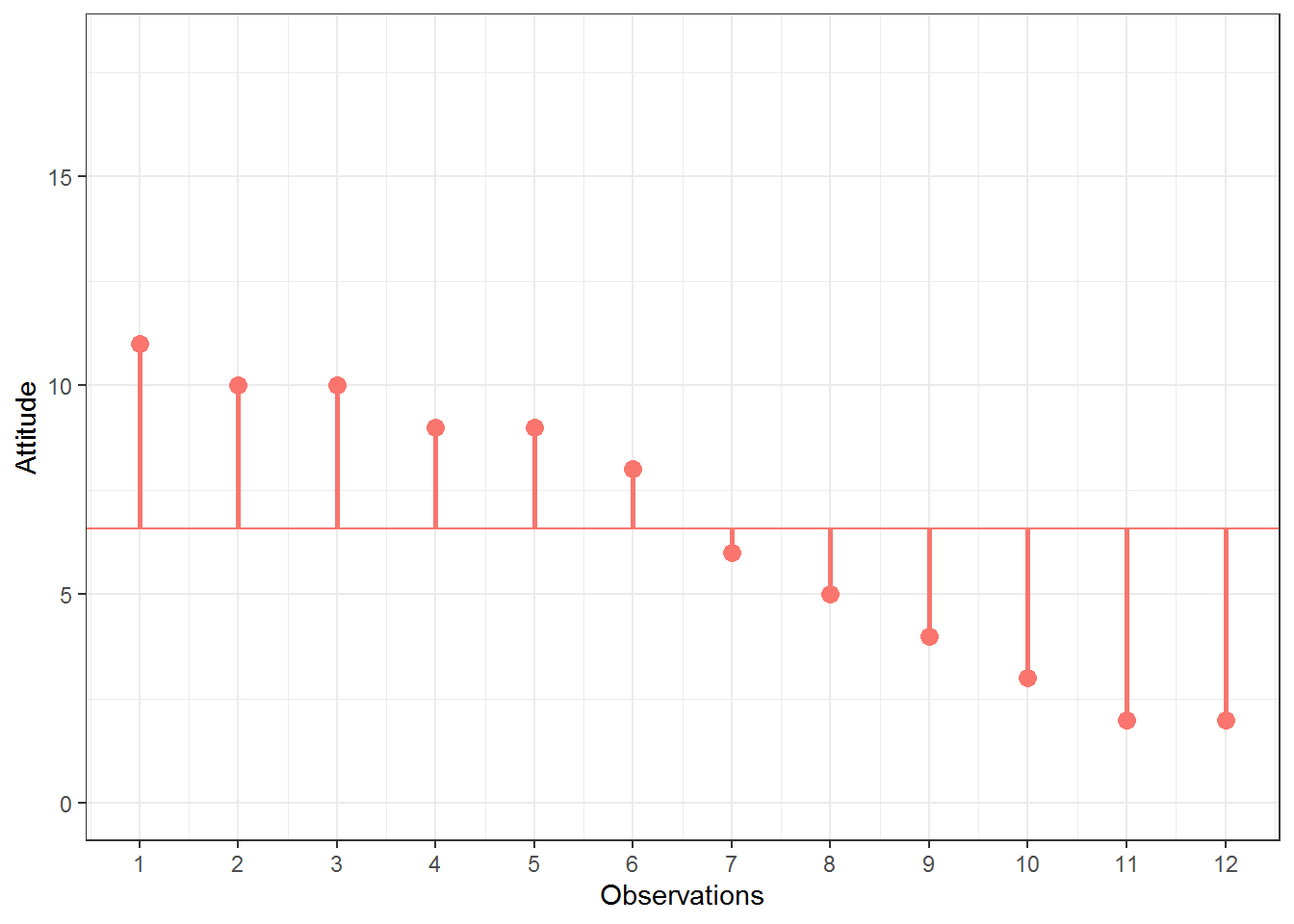

You can see that there are some respondents that have been living in the city longer than average and some respondents that have been living in the city shorter than average. Let’s do the same for the second variable (“Attitude”). Again, the y-axis shows the observed scores for this variable and the x-axis shows the respondent ID.

Figure 1.4: Scores for attitude variable

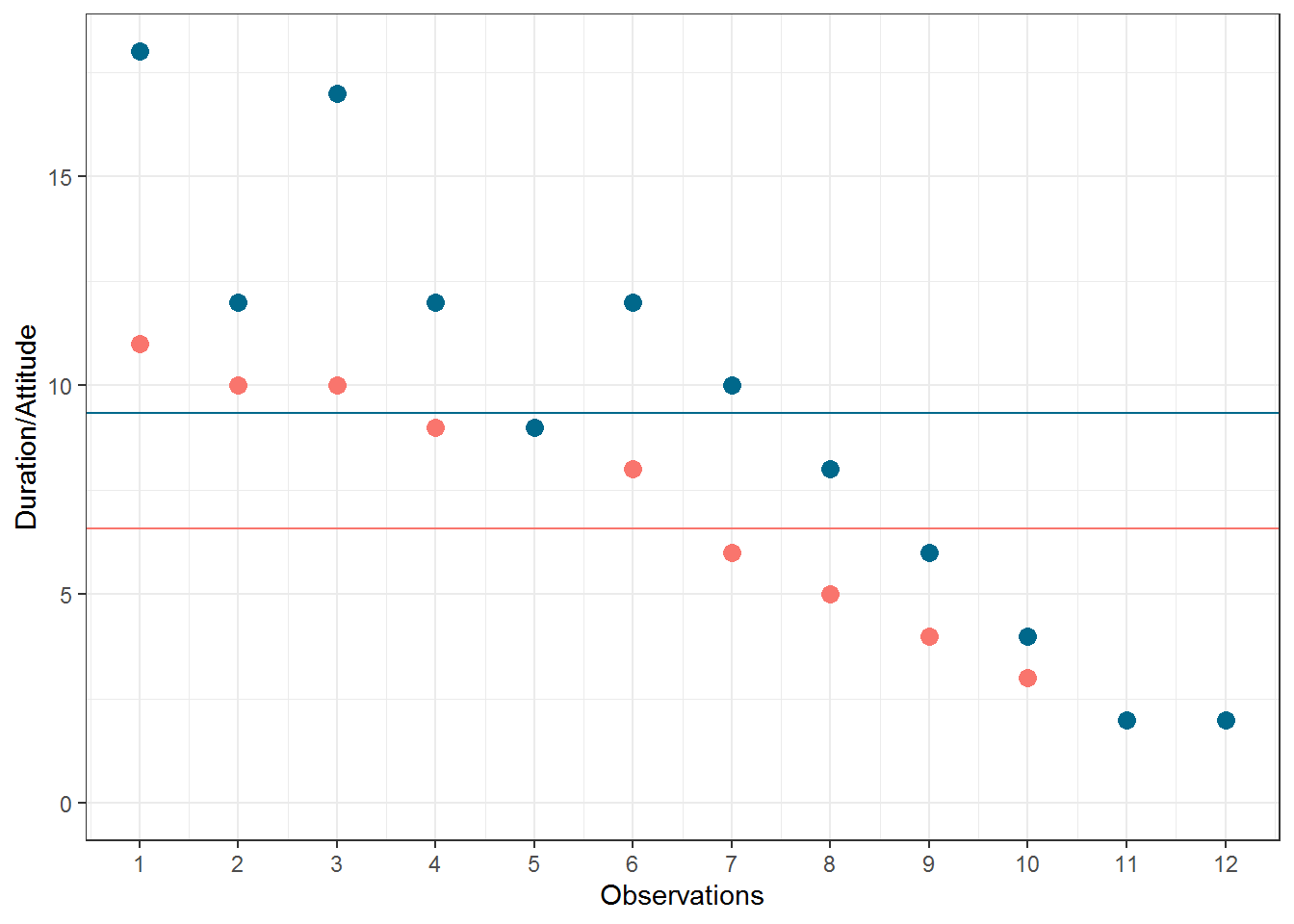

Again, we can see that some respondents have an above average attitude towards the city (more favorable) and some respondents have a below average attitude towards the city. Let’s combine both variables in one graph now to see if there is some co-movement:

Figure 5.1: Scores for attitude and duration of residency variables

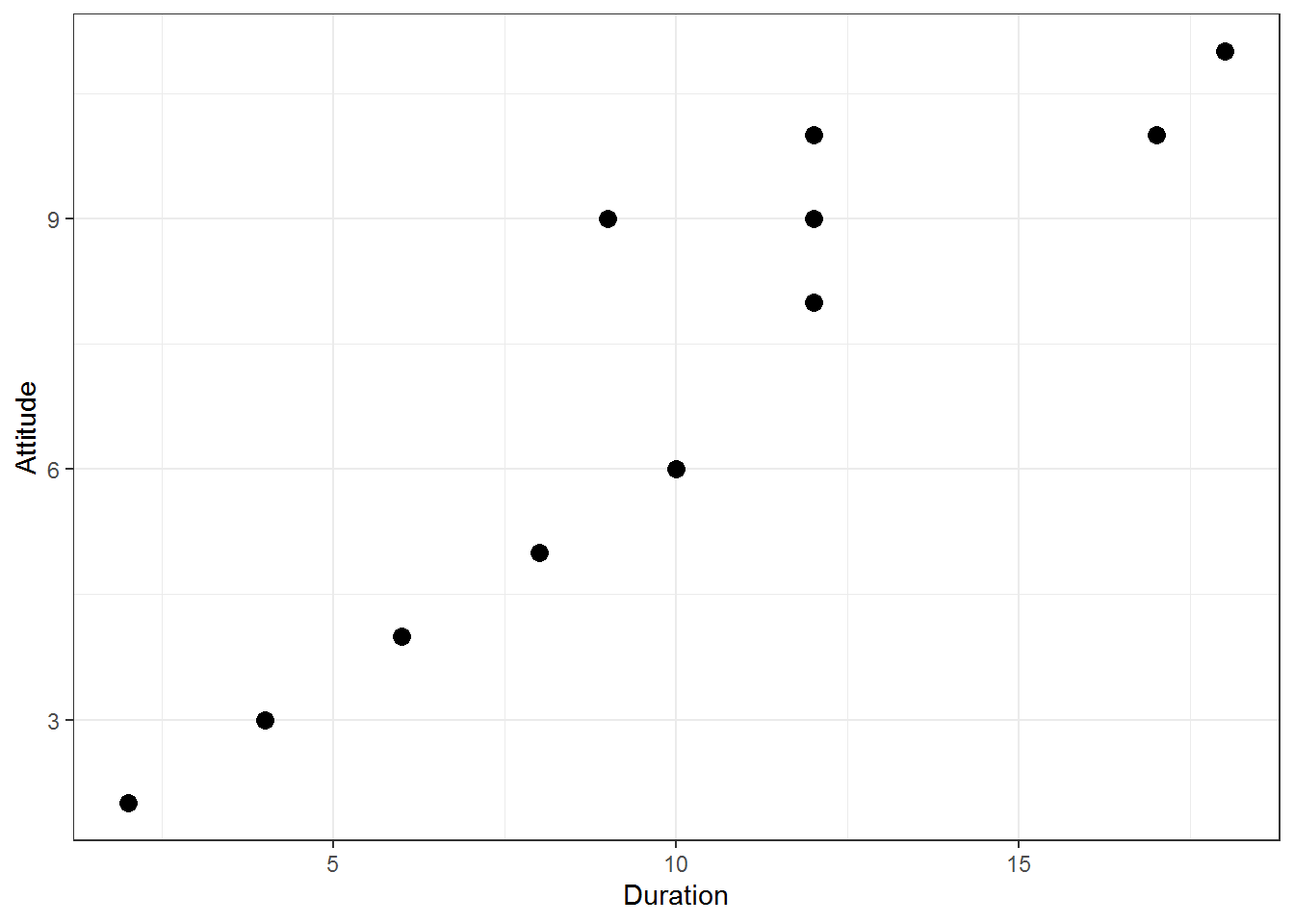

We can see that there is indeed some co-movement here. The variables covary because respondents who have an above (below) average attitude towards the city also appear to have been living in the city for an above (below) average amount of time and vice versa. Correlation helps us to quantify this relationship. Before you proceed to compute the correlation coefficient, you should first look at the data. We usually use a scatterplot to visualize the relationship between two metric variables:

Figure 1.5: Scatterplot for durationand attitute variables

How can we compute the correlation coefficient? Remember that the variance measures the average deviation from the mean of a variable:

\[\begin{equation} \begin{split} s_x^2&=\frac{\sum_{i=1}^{N} (X_i-\overline{X})^2}{N-1} \\ &= \frac{\sum_{i=1}^{N} (X_i-\overline{X})*(X_i-\overline{X})}{N-1} \end{split} \tag{6.1} \end{equation}\]

When we consider two variables, we multiply the deviation for one variable by the respective deviation for the second variable:

\((X_i-\overline{X})*(Y_i-\overline{Y})\)

This is called the cross-product deviation. Then we sum the cross-product deviations:

\(\sum_{i=1}^{N}(X_i-\overline{X})*(Y_i-\overline{Y})\)

… and compute the average of the sum of all cross-product deviations to get the covariance:

\[\begin{equation} Cov(x, y) =\frac{\sum_{i=1}^{N}(X_i-\overline{X})*(Y_i-\overline{Y})}{N-1} \tag{6.2} \end{equation}\]

You can easily compute the covariance manually as follows

x <- att_data$duration

x_bar <- mean(att_data$duration)

y <- att_data$attitude

y_bar <- mean(att_data$attitude)

N <- nrow(att_data)

cov <- (sum((x - x_bar) * (y - y_bar)))/(N - 1)

cov## [1] 16.333333Or you simply use the built-in cov() function:

cov(att_data$duration, att_data$attitude) # apply the cov function ## [1] 16.333333A positive covariance indicates that as one variable deviates from the mean, the other variable deviates in the same direction. A negative covariance indicates that as one variable deviates from the mean (e.g., increases), the other variable deviates in the opposite direction (e.g., decreases).

However, the size of the covariance depends on the scale of measurement. Larger scale units will lead to larger covariance. To overcome the problem of dependence on measurement scale, we need to convert the covariance to a standard set of units through standardization by dividing the covariance by the standard deviation (similar to how we compute z-scores).

With two variables, there are two standard deviations. We simply multiply the two standard deviations. We then divide the covariance by the product of the two standard deviations to get the standardized covariance, which is known as a correlation coefficient r:

\[\begin{equation} r=\frac{Cov_{xy}}{s_x*s_y} \tag{6.3} \end{equation}\]

This is known as the product moment correlation (r) and it is straight-forward to compute:

x_sd <- sd(att_data$duration)

y_sd <- sd(att_data$attitude)

r <- cov/(x_sd * y_sd)

r## [1] 0.93607782Or you could just use the cor() function:

cor(att_data[, c("attitude", "duration")], method = "pearson",

use = "complete")## attitude duration

## attitude 1.00000000 0.93607782

## duration 0.93607782 1.00000000The properties of the correlation coefficient (‘r’) are:

- ranges from -1 to + 1

- +1 indicates perfect linear relationship

- -1 indicates perfect negative relationship

- 0 indicates no linear relationship

- ± .1 represents small effect

- ± .3 represents medium effect

- ± .5 represents large effect

6.1.1.2 Significance testing

How can we determine if our two variables are significantly related? To test this, we denote the population moment correlation ρ. Then we test the null of no relationship between variables:

\[H_0:\rho=0\] \[H_1:\rho\ne0\]

The test statistic is:

\[\begin{equation} t=\frac{r*\sqrt{N-2}}{\sqrt{1-r^2}} \tag{6.4} \end{equation}\]

It has a t distribution with n - 2 degrees of freedom. Then, we follow the usual procedure of calculating the test statistic and comparing the test statistic to the critical value of the underlying probability distribution. If the calculated test statistic is larger than the critical value, the null hypothesis of no relationship between X and Y is rejected.

t_calc <- r * sqrt(N - 2)/sqrt(1 - r^2) #calculated test statistic

t_calc## [1] 8.4144314df <- (N - 2) #degrees of freedom

t_crit <- qt(0.975, df) #critical value

t_crit## [1] 2.2281389pt(q = t_calc, df = df, lower.tail = F) * 2 #p-value ## [1] 0.0000075451612Or you can simply use the cor.test() function, which also produces the 95% confidence interval:

cor.test(att_data$attitude, att_data$duration, alternative = "two.sided",

method = "pearson", conf.level = 0.95)##

## Pearson's product-moment correlation

##

## data: att_data$attitude and att_data$duration

## t = 8.41443, df = 10, p-value = 0.0000075452

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## 0.78260411 0.98228152

## sample estimates:

## cor

## 0.93607782To determine the linear relationship between variables, the data only needs to be measured using interval scales. If you want to test the significance of the association, the sampling distribution needs to be normally distributed (we usually assume this when our data are normally distributed or when N is large). If parametric assumptions are violated, you should use non-parametric tests:

- Spearman’s correlation coefficient: requires ordinal data and ranks the data before applying Pearson’s equation.

- Kendall’s tau: use when N is small or the number of tied ranks is large.

cor.test(att_data$attitude, att_data$duration, alternative = "two.sided",

method = "spearman", conf.level = 0.95)##

## Spearman's rank correlation rho

##

## data: att_data$attitude and att_data$duration

## S = 14.1969, p-value = 0.0000021833

## alternative hypothesis: true rho is not equal to 0

## sample estimates:

## rho

## 0.95036059cor.test(att_data$attitude, att_data$duration, alternative = "two.sided",

method = "kendall", conf.level = 0.95)##

## Kendall's rank correlation tau

##

## data: att_data$attitude and att_data$duration

## z = 3.90948, p-value = 0.000092496

## alternative hypothesis: true tau is not equal to 0

## sample estimates:

## tau

## 0.89602867Report the results:

A Pearson product-moment correlation coefficient was computed to assess the relationship between the duration of residence in a city and the attitude toward the city. There was a positive correlation between the two variables, r = 0.936, n = 12, p < 0.05. A scatterplot summarizes the results (Figure XY).

A note on the interpretation of correlation coefficients:

As we have already seen in chapter 1, correlation coefficients give no indication of the direction of causality. In our example, we can conclude that the attitude toward the city is more positive as the years of residence increases. However, we cannot say that the years of residence cause the attitudes to be more positive. There are two main reasons for caution when interpreting correlations:

- Third-variable problem: there may be other unobserved factors that affect both the ‘attitude towards a city’ and the ‘duration of residency’ variables

- Direction of causality: Correlations say nothing about which variable causes the other to change (reverse causality: attitudes may just as well cause the years of residence variable).

6.1.2 Regression analysis

Correlations measure relationships between variables (i.e., how much two variables covary). Using regression analysis we can predict the outcome of a dependent variable (Y) from one or more independent variables (X). For example, we could be interested in how many products will we will sell if we increase the advertising expenditures by 1000 Euros? In regression analysis, we fit a model to our data and use it to predict the values of the dependent variable from one predictor variable (bivariate regression) or several predictor variables (multiple regression). The following table shows a comparison of correlation and regression analysis:

| Correlation | Regression | |

|---|---|---|

| Estimated coefficient | Coefficient of correlation (bounded between -1 and +1) | Regression coefficient (not bounded a priori) |

| Interpretation | Linear association between two variables; Association is bidirectional | (Linear) relation between one or more independent variables and dependent variable; Relation is directional |

| Role of theory | Theory neither required nor testable | Theory required and testable |

6.1.2.1 Simple linear regression

In simple linear regression, we assess the relationship between one dependent (regressand) and one independent (regressor) variable. The goal is to fit a line through a scatterplot of observations in order to find the line that best describes the data (scatterplot).

Suppose you are a marketing research analyst at a music label and your task is to suggest, on the basis of historical data, a marketing plan for the next year that will maximize product sales. The data set that is available to you includes information on the sales of music downloads (thousands of units), advertising expenditures (in Euros), the number of radio plays an artist received per week (airplay), the number of previous releases of an artist (starpower), repertoire origin (country; 0 = local, 1 = international), and genre (1 = rock, 2 = pop, 3 = electronic). Let’s load and inspect the data first:

regression <- read.table("https://raw.githubusercontent.com/IMSMWU/Teaching/master/MRDA2017/music_sales_regression.dat",

sep = "\t", header = TRUE) #read in data

regression$country <- factor(regression$country, levels = c(0:1),

labels = c("local", "international")) #convert grouping variable to factor

regression$genre <- factor(regression$genre, levels = c(1:3),

labels = c("rock", "pop", "electronic")) #convert grouping variable to factor

head(regression)psych::describe(regression) #descriptive statistics using psych## vars n mean sd median trimmed mad min max range

## sales 1 200 193.20 80.70 200.00 192.69 88.96 10.0 360.00 350.00

## adspend 2 200 614.41 485.66 531.92 560.81 489.09 9.1 2271.86 2262.76

## airplay 3 200 27.50 12.27 28.00 27.46 11.86 0.0 63.00 63.00

## starpower 4 200 6.77 1.40 7.00 6.88 1.48 1.0 10.00 9.00

## genre* 5 200 2.40 0.79 3.00 2.50 0.00 1.0 3.00 2.00

## country* 6 200 1.17 0.38 1.00 1.09 0.00 1.0 2.00 1.00

## skew kurtosis se

## sales 0.04 -0.72 5.71

## adspend 0.84 0.17 34.34

## airplay 0.06 -0.09 0.87

## starpower -1.27 3.56 0.10

## genre* -0.83 -0.91 0.06

## country* 1.74 1.05 0.03As stated above, regression analysis may be used to relate a quantitative response (“dependent variable”) to one or more predictor variables (“independent variables”). In a simple linear regression, we have one dependent and one independent variable and we regress the dependent variable on the independent variable.

Here are a few important questions that we might seek to address based on the data:

- Is there a relationship between advertising budget and sales?

- How strong is the relationship between advertising budget and sales?

- Which other variables contribute to sales?

- How accurately can we estimate the effect of each variable on sales?

- How accurately can we predict future sales?

- Is the relationship linear?

- Is there synergy among the advertising activities?

We may use linear regression to answer these questions. We will see later that the interpretation of the results strongly depends on the goal of the analysis - whether you would like to simply predict an outcome variable or you would like to explain the causal effect of the independent variable on the dependent variable (see chapter 1). Let’s start with the first question and investigate the relationship between advertising and sales.

6.1.2.1.1 Estimating the coefficients

A simple linear regression model only has one predictor and can be written as:

\[\begin{equation} Y=\beta_0+\beta_1X+\epsilon \tag{6.5} \end{equation}\]

In our specific context, let’s consider only the influence of advertising on sales for now:

\[\begin{equation} Sales=\beta_0+\beta_1*adspend+\epsilon \tag{6.6} \end{equation}\]

The word “adspend” represents data on advertising expenditures that we have observed and β1 (the “slope”“) represents the unknown relationship between advertising expenditures and sales. It tells you by how much sales will increase for an additional Euro spent on advertising. β0 (the”intercept”) is the number of sales we would expect if no money is spent on advertising. Together, β0 and β1 represent the model coefficients or parameters. The error term (ε) captures everything that we miss by using our model, including, (1) misspecifications (the true relationship might not be linear), (2) omitted variables (other variables might drive sales), and (3) measurement error (our measurement of the variables might be imperfect).

Once we have used our training data to produce estimates for the model coefficients, we can predict future sales on the basis of a particular value of advertising expenditures by computing:

\[\begin{equation} \hat{Sales}=\hat{\beta_0}+\hat{\beta_1}*adspend \tag{6.7} \end{equation}\]

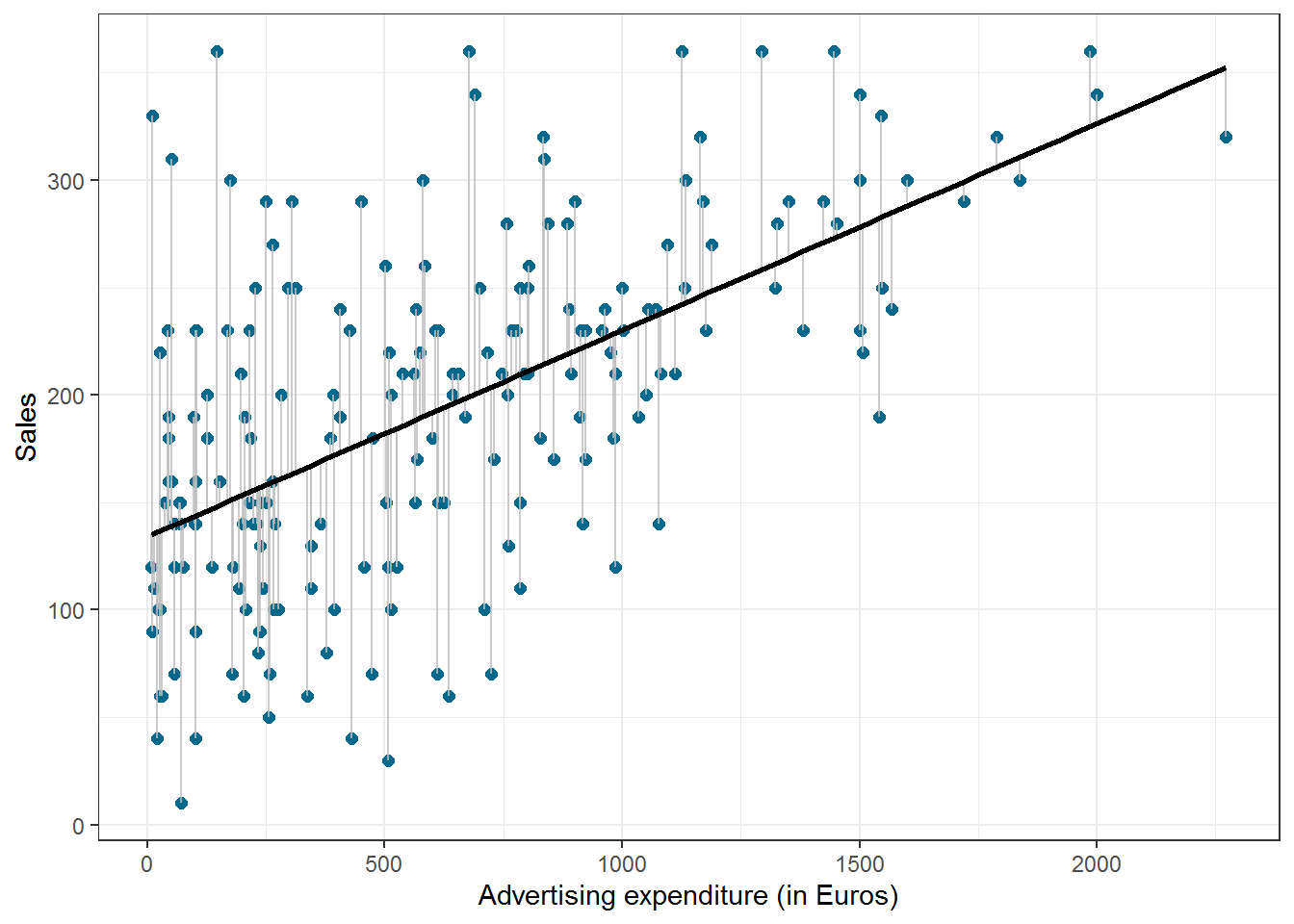

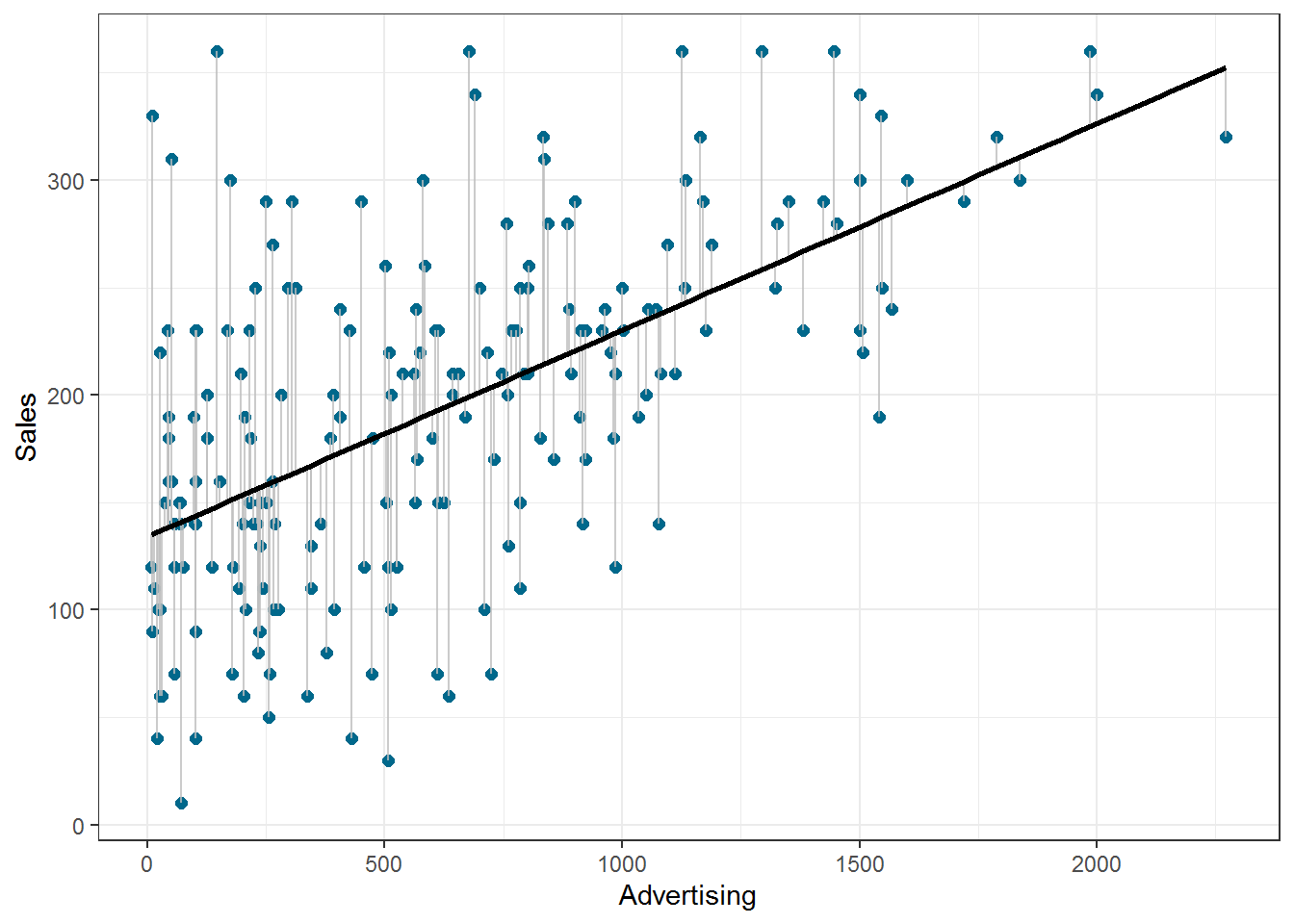

We use the hat symbol, ^, to denote the estimated value for an unknown parameter or coefficient, or to denote the predicted value of the response (sales). In practice, β0 and β1 are unknown and must be estimated from the data to make predictions. In the case of our advertising example, the data set consists of the advertising budget and product sales of 200 music songs (n = 200). Our goal is to obtain coefficient estimates such that the linear model fits the available data well. In other words, we fit a line through the scatterplot of observations and try to find the line that best describes the data. The following graph shows the scatterplot for our data, where the black line shows the regression line. The grey vertical lines shows the difference between the predicted values (the regression line) and the observed values. This difference is referred to as the residuals (“e”).

Figure 1.14: Ordinary least squares (OLS)

The estimation of the regression function is based on the idea of the method of least squares (OLS = ordinary least squares). The first step is to calculate the residuals by subtracting the observed values from the predicted values.

\(e_i = Y_i-(\beta_0+\beta_1X_i)\)

This difference is then minimized by minimizing the sum of the squared residuals:

\[\begin{equation} \sum_{i=1}^{N} e_i^2= \sum_{i=1}^{N} [Y_i-(\beta_0+\beta_1X_i)]^2\rightarrow min! \tag{6.8} \end{equation}\]

ei: Residuals (i = 1,2,…,N)

Yi: Values of the dependent variable (i = 1,2,…,N)

β0: Intercept

β1: Regression coefficient / slope parameters

Xni: Values of the nth independent variables and the ith observation

N: Number of observations

This is also referred to as the residual sum of squares (RSS), which you may still remember from the previous chapter on ANOVA. Now we need to choose the values for β0 and β1 that minimize RSS. So how can we derive these values for the regression coefficient? The equation for β1 is given by:

\[\begin{equation} \hat{\beta_1}=\frac{COV_{XY}}{s_x^2} \tag{6.9} \end{equation}\]

The exact mathematical derivation of this formula is beyond the scope of this script, but the intuition is to calculate the first derivative of the squared residuals with respect to β1 and set it to zero, thereby finding the β1 that minimizes the term. Using the above formula, you can easily compute β1 using the following code:

cov_y_x <- cov(regression$adspend, regression$sales)

cov_y_x## [1] 22672.016var_x <- var(regression$adspend)

var_x## [1] 235860.98beta_1 <- cov_y_x/var_x

beta_1## [1] 0.096124486The interpretation of β1 is as follows:

For every extra Euro spent on advertising, sales can be expected to increase by 0.096 units. Or, in other words, if we increase our marketing budget by 1,000 Euros, sales can be expected to increase by 96 units.

Using the estimated coefficient for β1, it is easy to compute β0 (the intercept) as follows:

\[\begin{equation} \hat{\beta_0}=\overline{Y}-\hat{\beta_1}\overline{X} \tag{6.10} \end{equation}\]

The R code for this is:

beta_0 <- mean(regression$sales) - beta_1 * mean(regression$adspend)

beta_0## [1] 134.13994The interpretation of β0 is as follows:

If we spend no money on advertising, we would expect to sell 134.14 units.

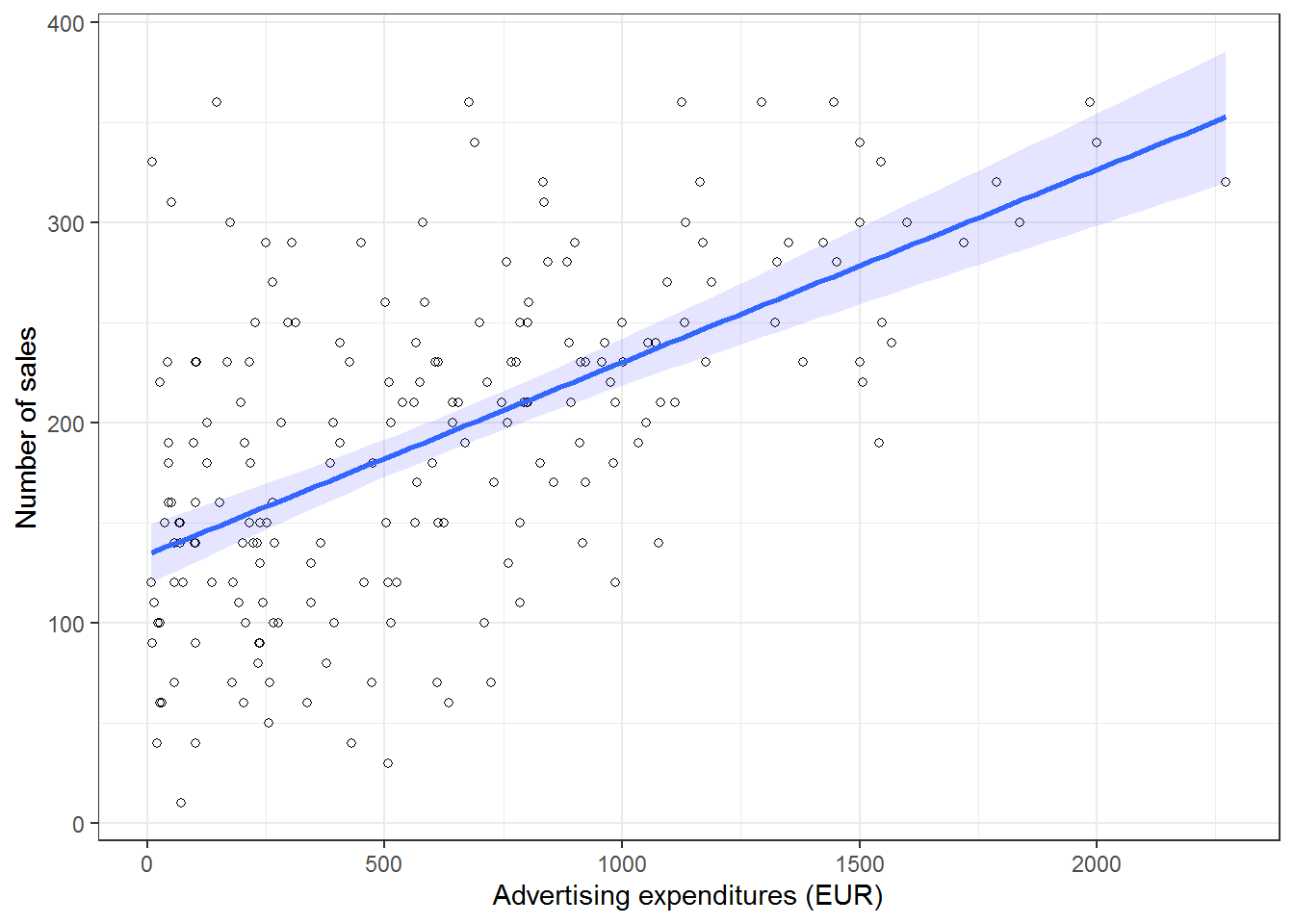

You may also verify this based on a scatterplot of the data. The following plot shows the scatterplot including the regression line, which is estimated using OLS.

ggplot(regression, mapping = aes(adspend, sales)) +

geom_point(shape = 1) + geom_smooth(method = "lm",

fill = "blue", alpha = 0.1) + labs(x = "Advertising expenditures (EUR)",

y = "Number of sales") + theme_bw()

Figure 1.17: Scatterplot

You can see that the regression line intersects with the y-axis at 134.14, which corresponds to the expected sales level when advertising expenditure (on the x-axis) is zero (i.e., the intercept β0). The slope coefficient (β1) tells you by how much sales (on the y-axis) would increase if advertising expenditures (on the x-axis) are increased by one unit.

6.1.2.1.2 Significance testing

In a next step, we assess if the effect of advertising on sales is statistically significant. This means that we test the null hypothesis H0: “There is no relationship between advertising and sales” versus the alternative hypothesis H1: “The is some relationship between advertising and sales”. Or, to state this formally:

\[H_0:\beta_1=0\] \[H_1:\beta_1\ne0\]

How can we test if the effect is statistically significant? Recall the generalized equation to derive a test statistic:

\[\begin{equation} test\ statistic = \frac{effect}{error} \tag{6.11} \end{equation}\]

The effect is given by the β1 coefficient in this case. To compute the test statistic, we need to come up with a measure of uncertainty around this estimate (the error). This is because we use information from a sample to estimate the least squares line to make inferences regarding the regression line in the entire population. Since we only have access to one sample, the regression line will be slightly different every time we take a different sample from the population. This is sampling variation and it is perfectly normal! It just means that we need to take into account the uncertainty around the estimate, which is achieved by the standard error. Thus, the test statistic for our hypothesis is given by:

\[\begin{equation} t = \frac{\hat{\beta_1}}{SE(\hat{\beta_1})} \tag{6.12} \end{equation}\]

After calculating the test statistic, we compare its value to the values that we would expect to find if there was no effect based on the t-distribution. In a regression context, the degrees of freedom are given by N - p - 1 where N is the sample size and p is the number of predictors. In our case, we have 200 observations and one predictor. Thus, the degrees of freedom is 200 - 1 - 1 = 198. In the regression output below, R provides the exact probability of observing a t value of this magnitude (or larger) if the null hypothesis was true. This probability - as we already saw in chapter 6 - is the p-value. A small p-value indicates that it is unlikely to observe such a substantial association between the predictor and the outcome variable due to chance in the absence of any real association between the predictor and the outcome.

To estimate the regression model in R, you can use the lm() function. Within the function, you first specify the dependent variable (“sales”) and independent variable (“adspend”) separated by a ~ (tilde). As mentioned previously, this is known as formula notation in R. The data = regression argument specifies that the variables come from the data frame named “regression”. Strictly speaking, you use the lm() function to create an object called “simple_regression,” which holds the regression output. You can then view the results using the summary() function:

simple_regression <- lm(sales ~ adspend, data = regression) #estimate linear model

summary(simple_regression) #summary of results##

## Call:

## lm(formula = sales ~ adspend, data = regression)

##

## Residuals:

## Min 1Q Median 3Q Max

## -152.9493 -43.7961 -0.3933 37.0404 211.8658

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 134.1399378 7.5365747 17.7985 < 0.00000000000000022 ***

## adspend 0.0961245 0.0096324 9.9793 < 0.00000000000000022 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 65.991 on 198 degrees of freedom

## Multiple R-squared: 0.33465, Adjusted R-squared: 0.33129

## F-statistic: 99.587 on 1 and 198 DF, p-value: < 0.000000000000000222Note that the estimated coefficients for β0 (134.14) and β1 (0.096) correspond to the results of our manual computation above. The associated t-values and p-values are given in the output. The t-values are larger than the critical t-values for the 95% confidence level, since the associated p-values are smaller than 0.05. In case of the coefficient for β1, this means that the probability of an association between the advertising and sales of the observed magnitude (or larger) is smaller than 0.05, if the value of β1 was, in fact, 0. This finding leads us to reject the null hypothesis of no association between advertising and sales.

The coefficients associated with the respective variables represent point estimates. To obtain a better understanding of the range of values that the coefficients could take, it is helpful to compute confidence intervals. A 95% confidence interval is defined as a range of values such that with a 95% probability, the range will contain the true unknown value of the parameter. For example, for β1, the confidence interval can be computed as.

\[\begin{equation} CI = \hat{\beta_1}\pm(t_{1-\frac{\alpha}{2}}*SE(\beta_1)) \tag{6.13} \end{equation}\]

It is easy to compute confidence intervals in R using the confint() function. You just have to provide the name of you estimated model as an argument:

confint(simple_regression)## 2.5 % 97.5 %

## (Intercept) 119.277680821 149.00219480

## adspend 0.077129291 0.11511968For our model, the 95% confidence interval for β0 is [119.28,149], and the 95% confidence interval for β1 is [0.08,0.12]. Thus, we can conclude that when we do not spend any money on advertising, sales will be somewhere between 119 and 149 units on average. In addition, for each increase in advertising expenditures by one Euro, there will be an average increase in sales of between 0.08 and 0.12. If you revisit the graphic depiction of the regression model above, the uncertainty regarding the intercept and slope parameters can be seen in the confidence bounds (blue area) around the regression line.

6.1.2.1.3 Assessing model fit

Once we have rejected the null hypothesis in favor of the alternative hypothesis, the next step is to investigate how well the model represents (“fits”) the data. How can we assess the model fit?

- First, we calculate the fit of the most basic model (i.e., the mean)

- Then, we calculate the fit of the best model (i.e., the regression model)

- A good model should fit the data significantly better than the basic model

- R2: Represents the percentage of the variation in the outcome that can be explained by the model

- The F-ratio measures how much the model has improved the prediction of the outcome compared to the level of inaccuracy in the model

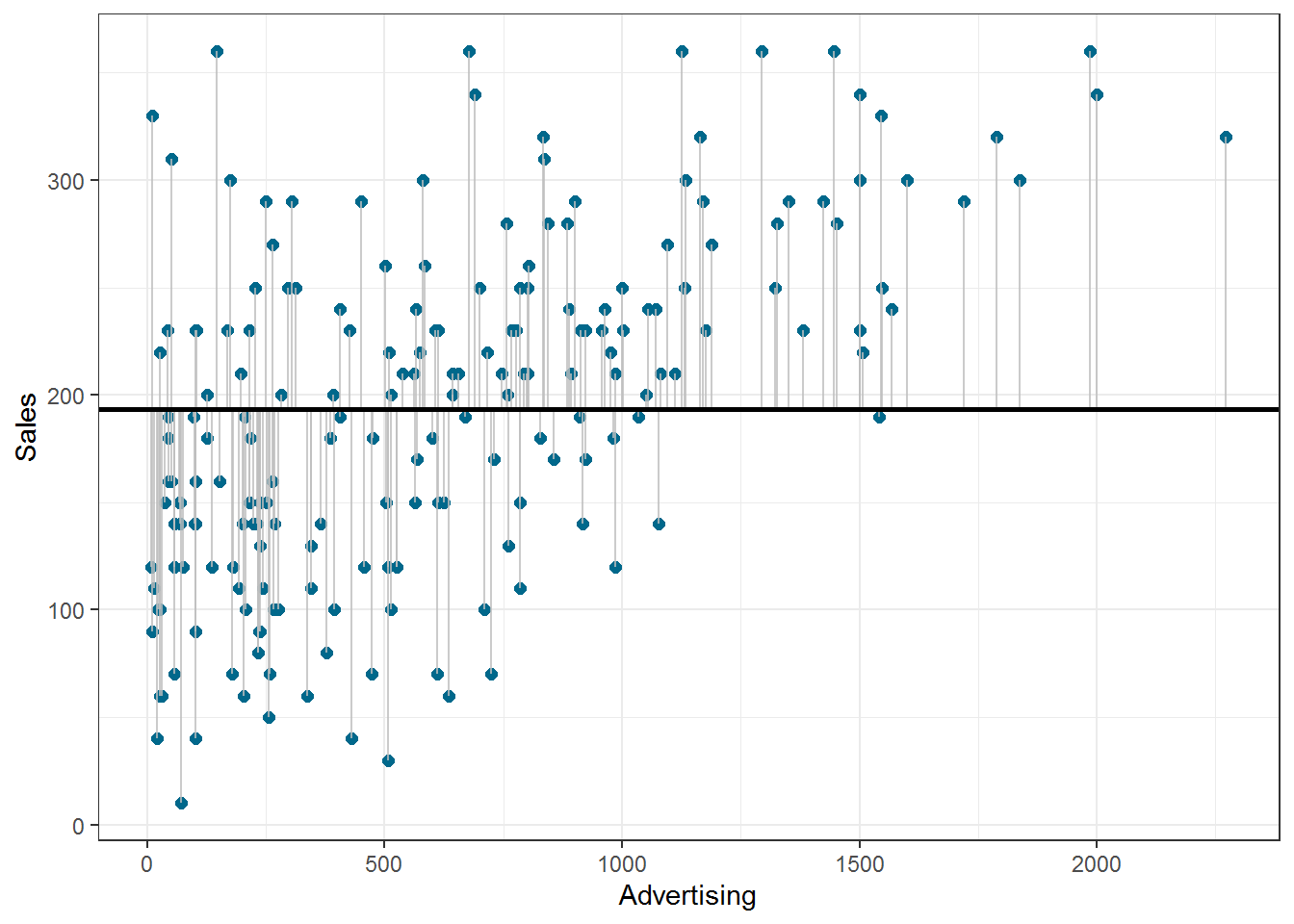

Similar to ANOVA, the calculation of model fit statistics relies on estimating the different sum of squares values. SST is the difference between the observed data and the mean value of Y (aka. total variation). In the absence of any other information, the mean value of Y (\(\overline{Y}\)) represents the best guess on where a particular observation \(Y_{i}\) at a given level of advertising will fall:

\[\begin{equation} SS_T= \sum_{i=1}^{N} (Y_i-\overline{Y})^2 \tag{6.14} \end{equation}\]

The following graph shows the total sum of squares:

Figure 1.20: Total sum of squares

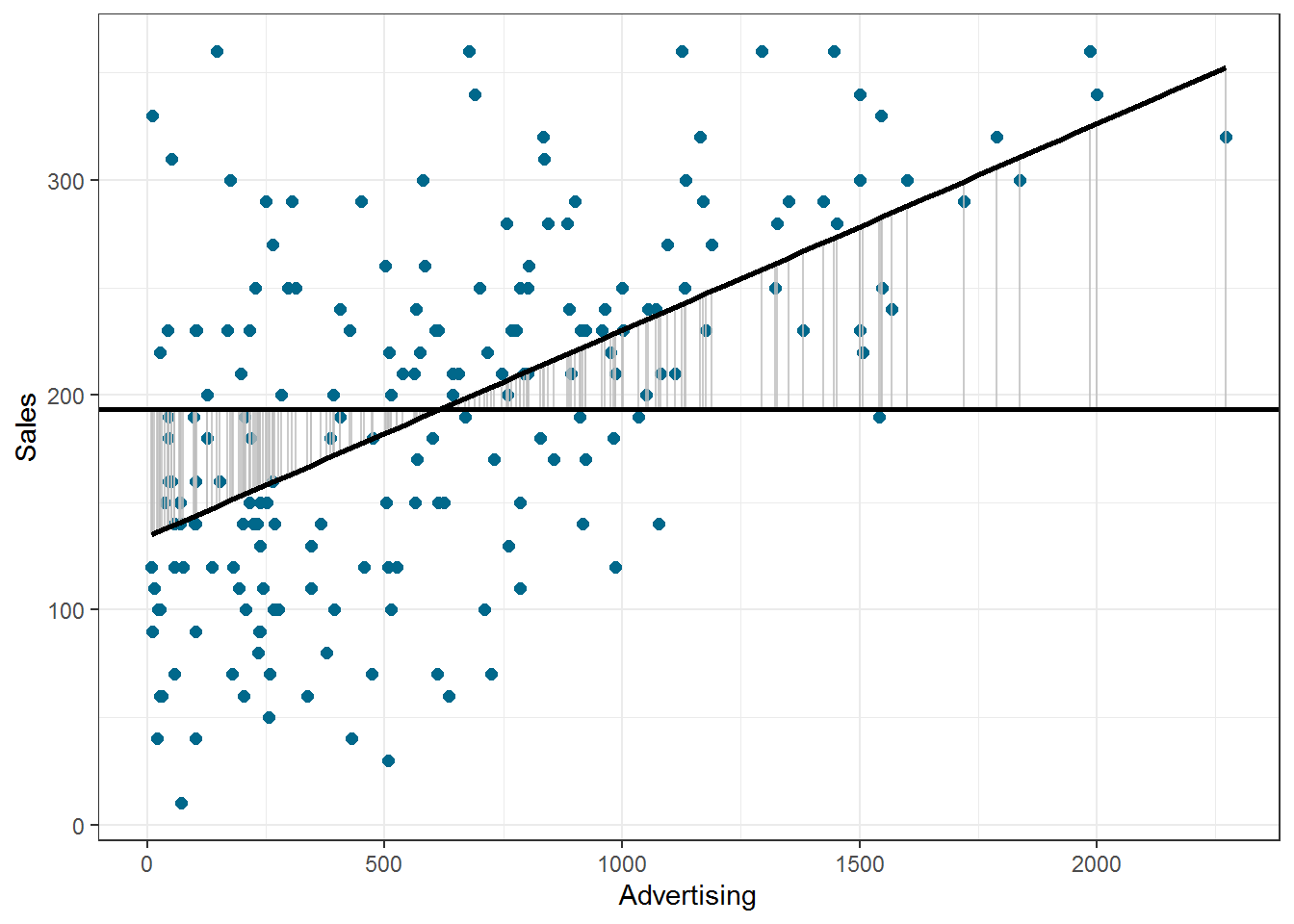

Based on our linear model, the best guess about the sales level at a given level of advertising is the predicted value \(\hat{Y}_i\). The model sum of squares (SSM) therefore has the mathematical representation:

\[\begin{equation} SS_M= \sum_{i=1}^{N} (\hat{Y}_i-\overline{Y})^2 \tag{6.15} \end{equation}\]

The model sum of squares represents the improvement in prediction resulting from using the regression model rather than the mean of the data. The following graph shows the model sum of squares for our example:

Figure 1.21: Ordinary least squares (OLS)

The residual sum of squares (SSR) is the difference between the observed data points (\(Y_{i}\)) and the predicted values along the regression line (\(\hat{Y}_{i}\)), i.e., the variation not explained by the model.

\[\begin{equation} SS_R= \sum_{i=1}^{N} ({Y}_{i}-\hat{Y}_{i})^2 \tag{6.16} \end{equation}\]

The following graph shows the residual sum of squares for our example:

Figure 1.22: Ordinary least squares (OLS)

Based on these statistics, we can determine have well the model fits the data as we will see next.

R-squared

The R2 statistic represents the proportion of variance that is explained by the model and is computed as:

\[\begin{equation} R^2= \frac{SS_M}{SS_T} \tag{6.16} \end{equation}\]

It takes values between 0 (very bad fit) and 1 (very good fit). Note that when the goal of your model is to predict future outcomes, a “too good” model fit can pose severe challenges. The reason is that the model might fit your specific sample so well, that it will only predict well within the sample but not generalize to other samples. This is called overfitting and it shows that there is a trade-off between model fit and out-of-sample predictive ability of the model, if the goal is to predict beyond the sample. We will come back to this point later in this chapter.

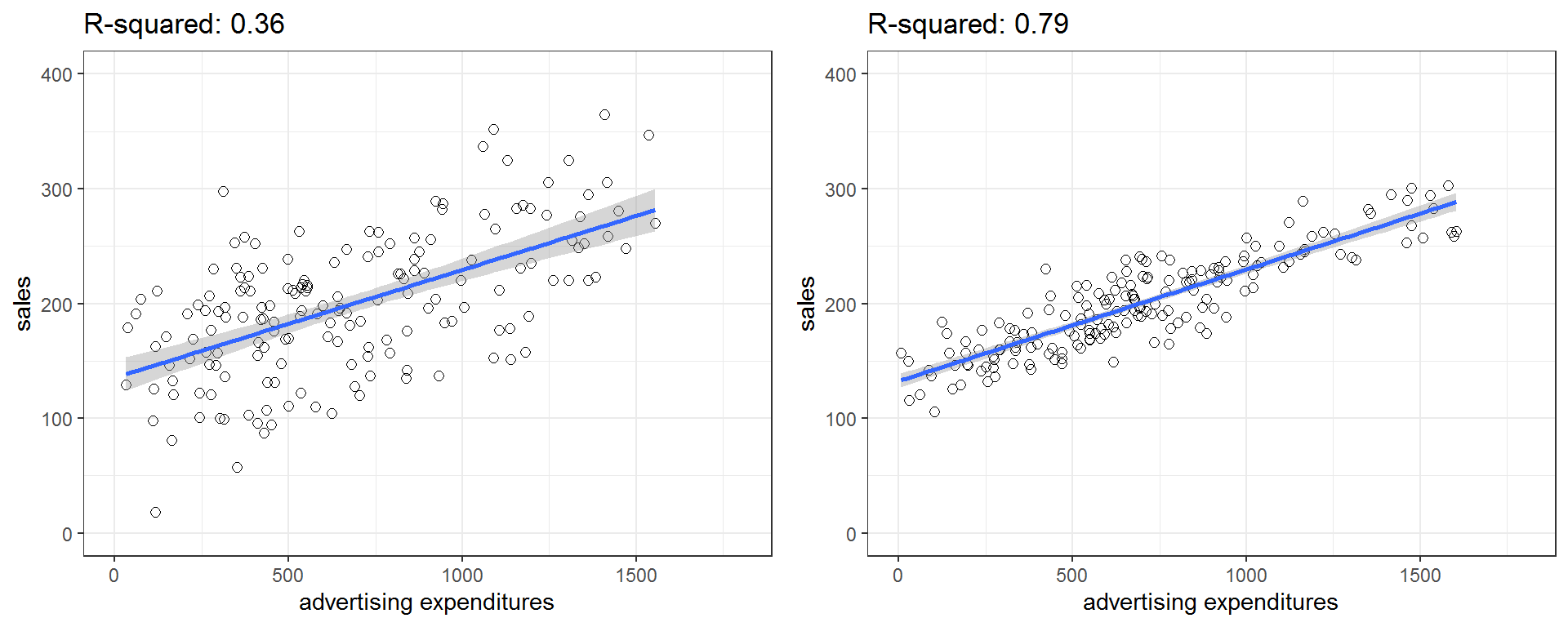

You can get a first impression of the fit of the model by inspecting the scatter plot as can be seen in the plot below. If the observations are highly dispersed around the regression line (left plot), the fit will be lower compared to a data set where the values are less dispersed (right plot).

Figure 1.23: Good vs. bad model fit

The R2 statistic is reported in the regression output (see above). However, you could also extract the relevant sum of squares statistics from the regression object using the anova() function to compute it manually:

anova(simple_regression) #anova results## Analysis of Variance Table

##

## Response: sales

## Df Sum Sq Mean Sq F value Pr(>F)

## adspend 1 433688 433688 99.6 <0.0000000000000002 ***

## Residuals 198 862264 4355

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Now we can compute R2 in the same way that we have computed Eta2 in the last section:

r2 <- anova(simple_regression)$"Sum Sq"[1]/(anova(simple_regression)$"Sum Sq"[1] +

anova(simple_regression)$"Sum Sq"[2]) #compute R2

r2## [1] 0.33Adjusted R-squared

Due to the way the R2 statistic is calculated, it will never decrease if a new explanatory variable is introduced into the model. This means that every new independent variable either doesn’t change the R2 or increases it, even if there is no real relationship between the new variable and the dependent variable. Hence, one could be tempted to just add as many variables as possible to increase the R2 and thus obtain a “better” model. However, this actually only leads to more noise and therefore a worse model.

To account for this, there exists a test statistic closely related to the R2, the adjusted R2. It can be calculated as follows:

\[\begin{equation} \overline{R^2} = 1 - (1 - R^2)\frac{n-1}{n - k - 1} \tag{6.17} \end{equation}\]

where n is the total number of observations and k is the total number of explanatory variables. The adjusted R2 is equal to or less than the regular R2 and can be negative. It will only increase if the added variable adds more explanatory power than one would expect by pure chance. Essentially, it contains a “penalty” for including unnecessary variables and therefore favors more parsimonious models. As such, it is a measure of suitability, good for comparing different models and is very useful in the model selection stage of a project. In R, the standard lm() function automatically also reports the adjusted R2 as you can see above.

F-test

Similar to the ANOVA in chapter 6, another significance test is the F-test, which tests the null hypothesis:

\[H_0:R^2=0\]

Or, to state it slightly differently:

\[H_0:\beta_1=\beta_2=\beta_3=\beta_k=0\]

This means that, similar to the ANOVA, we test whether any of the included independent variables has a significant effect on the dependent variable. So far, we have only included one independent variable, but we will extend the set of predictor variables below.

The F-test statistic is calculated as follows:

\[\begin{equation} F=\frac{\frac{SS_M}{k}}{\frac{SS_R}{(n-k-1)}}=\frac{MS_M}{MS_R} \tag{6.16} \end{equation}\]

which has a F distribution with k number of predictors and n degrees of freedom. In other words, you divide the systematic (“explained”) variation due to the predictor variables by the unsystematic (“unexplained”) variation.

The result of the F-test is provided in the regression output. However, you might manually compute the F-test using the ANOVA results from the model:

anova(simple_regression) #anova results## Analysis of Variance Table

##

## Response: sales

## Df Sum Sq Mean Sq F value Pr(>F)

## adspend 1 433688 433688 99.6 <0.0000000000000002 ***

## Residuals 198 862264 4355

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1f_calc <- anova(simple_regression)$"Mean Sq"[1]/anova(simple_regression)$"Mean Sq"[2] #compute F

f_calc## [1] 100f_crit <- qf(0.95, df1 = 1, df2 = 100) #critical value

f_crit## [1] 3.9f_calc > f_crit #test if calculated test statistic is larger than critical value## [1] TRUE6.1.2.1.4 Using the model

After fitting the model, we can use the estimated coefficients to predict sales for different values of advertising. Suppose you want to predict sales for a new product, and the company plans to spend 800 Euros on advertising. How much will it sell? You can easily compute this either by hand:

\[\hat{sales}=134.134 + 0.09612*800=211\]

… or by extracting the estimated coefficients from the model summary:

summary(simple_regression)$coefficients[1,1] + # the intercept

summary(simple_regression)$coefficients[2,1]*800 # the slope * 800## [1] 211The predicted value of the dependent variable is 211 units, i.e., the product will (on average) sell 211 units.

6.1.2.2 Multiple linear regression

Multiple linear regression is a statistical technique that simultaneously tests the relationships between two or more independent variables and an interval-scaled dependent variable. The general form of the equation is given by:

\[\begin{equation} Y=(\beta_0+\beta_1*X_1+\beta_2*X_2+\beta_n*X_n)+\epsilon \tag{6.5} \end{equation}\]

Again, we aim to find the linear combination of predictors that correlate maximally with the outcome variable. Note that if you change the composition of predictors, the partial regression coefficient of an independent variable will be different from that of the bivariate regression coefficient. This is because the regressors are usually correlated, and any variation in Y that was shared by X1 and X2 was attributed to X1. The interpretation of the partial regression coefficients is the expected change in Y when X is changed by one unit and all other predictors are held constant.

Let’s extend the previous example. Say, in addition to the influence of advertising, you are interested in estimating the influence of radio airplay on the number of album downloads. The corresponding equation would then be given by:

\[\begin{equation} Sales=\beta_0+\beta_1*adspend+\beta_2*airplay+\epsilon \tag{6.6} \end{equation}\]

The words “adspend” and “airplay” represent data that we have observed on advertising expenditures and number of radio plays, and β1 and β2 represent the unknown relationship between sales and advertising expenditures and radio airplay, respectively. The corresponding coefficients tell you by how much sales will increase for an additional Euro spent on advertising (when radio airplay is held constant) and by how much sales will increase for an additional radio play (when advertising expenditures are held constant). Thus, we can make predictions about album sales based not only on advertising spending, but also on radio airplay.

With several predictors, the partitioning of sum of squares is the same as in the bivariate model, except that the model is no longer a 2-D straight line. With two predictors, the regression line becomes a 3-D regression plane. In our example:

Figure 4.1: Regression plane

Like in the bivariate case, the plane is fitted to the data with the aim to predict the observed data as good as possible. The deviation of the observations from the plane represent the residuals (the error we make in predicting the observed data from the model). Note that this is conceptually the same as in the bivariate case, except that the computation is more complex (we won’t go into details here). The model is fairly easy to plot using a 3-D scatterplot, because we only have two predictors. While multiple regression models that have more than two predictors are not as easy to visualize, you may apply the same principles when interpreting the model outcome:

- Total sum of squares (SST) is still the difference between the observed data and the mean value of Y (total variation)

- Residual sum of squares (SSR) is still the difference between the observed data and the values predicted by the model (unexplained variation)

- Model sum of squares (SSM) is still the difference between the values predicted by the model and the mean value of Y (explained variation)

- R measures the multiple correlation between the predictors and the outcome

- R2 is the amount of variation in the outcome variable explained by the model

Estimating multiple regression models is straightforward using the lm() function. You just need to separate the individual predictors on the right hand side of the equation using the + symbol. For example, the model:

\[\begin{equation} Sales=\beta_0+\beta_1*adspend+\beta_2*airplay+\beta_3*starpower+\epsilon \tag{6.6} \end{equation}\]

could be estimated as follows:

multiple_regression <- lm(sales ~ adspend + airplay +

starpower, data = regression) #estimate linear model

summary(multiple_regression) #summary of results##

## Call:

## lm(formula = sales ~ adspend + airplay + starpower, data = regression)

##

## Residuals:

## Min 1Q Median 3Q Max

## -121.32 -28.34 -0.45 28.97 144.13

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -26.61296 17.35000 -1.53 0.13

## adspend 0.08488 0.00692 12.26 < 0.0000000000000002 ***

## airplay 3.36743 0.27777 12.12 < 0.0000000000000002 ***

## starpower 11.08634 2.43785 4.55 0.0000095 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 47 on 196 degrees of freedom

## Multiple R-squared: 0.665, Adjusted R-squared: 0.66

## F-statistic: 129 on 3 and 196 DF, p-value: <0.0000000000000002The interpretation of the coefficients is as follows:

- adspend (β1): when advertising expenditures increase by 1 Euro, sales will increase by 0.085 units

- airplay (β2): when radio airplay increases by 1 play per week, sales will increase by 3.367 units

- starpower (β3): when the number of previous albums increases by 1, sales will increase by 11.086 units

The associated t-values and p-values are also given in the output. You can see that the p-values are smaller than 0.05 for all three coefficients. Hence, all effects are “significant”. This means that if the null hypothesis was true (i.e., there was no effect between the variables and sales), the probability of observing associations of the estimated magnitudes (or larger) is very small (e.g., smaller than 0.05).

Again, to get a better feeling for the range of values that the coefficients could take, it is helpful to compute confidence intervals.

confint(multiple_regression)## 2.5 % 97.5 %

## (Intercept) -60.830 7.604

## adspend 0.071 0.099

## airplay 2.820 3.915

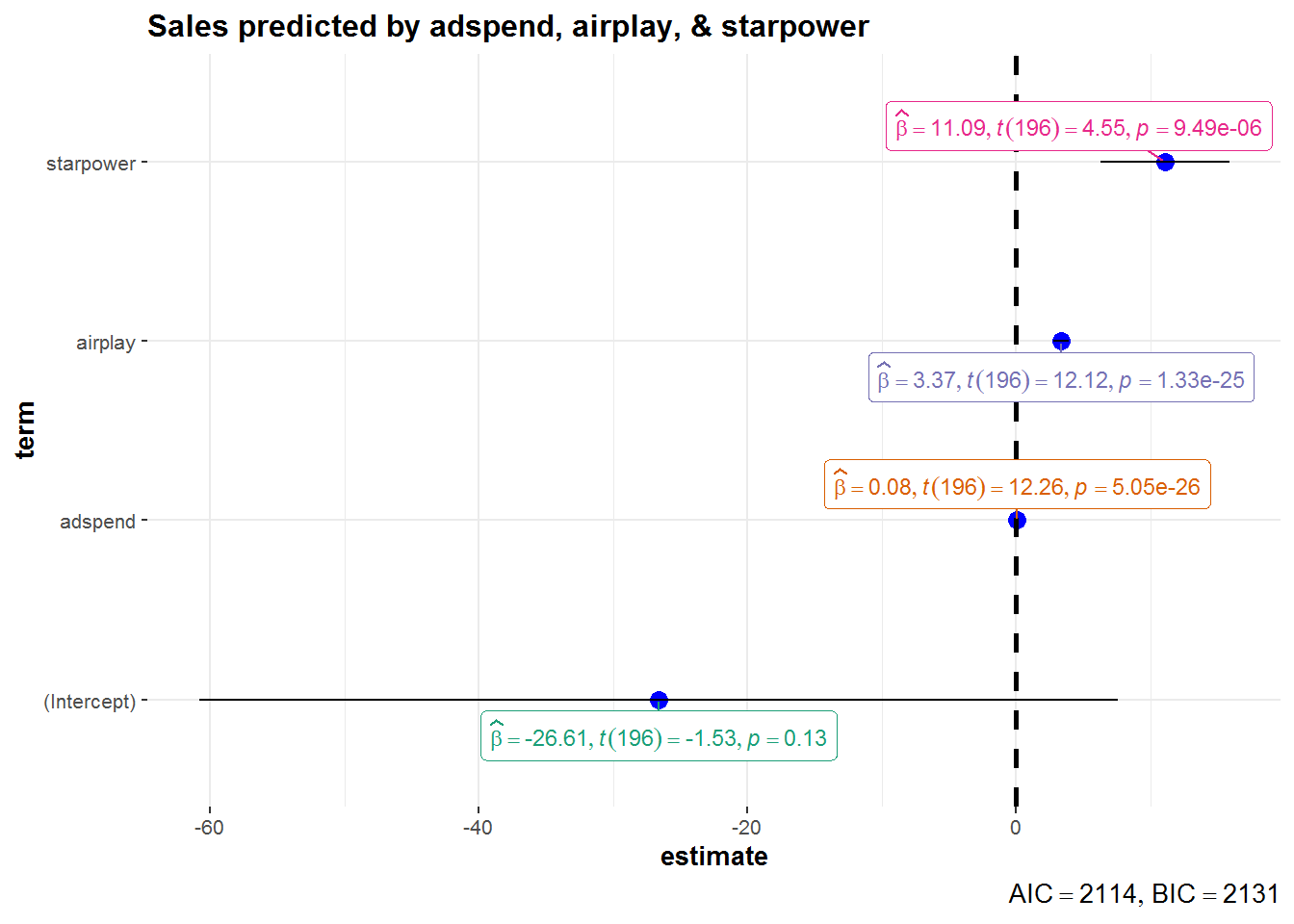

## starpower 6.279 15.894What does this tell you? Recall that a 95% confidence interval is defined as a range of values such that with a 95% probability, the range will contain the true unknown value of the parameter. For example, for β3, the confidence interval is [6.2785522,15.8941182]. Thus, although we have computed a point estimate of 11.086 for the effect of starpower on sales based on our sample, the effect might actually just as well take any other value within this range, considering the sample size and the variability in our data. You could also visualize the output from your regression model including the confidence intervals using the ggstatsplot package as follows:

library(ggstatsplot)

ggcoefstats(x = multiple_regression, title = "Sales predicted by adspend, airplay, & starpower")

Figure 4.3: Confidence intervals for regression model

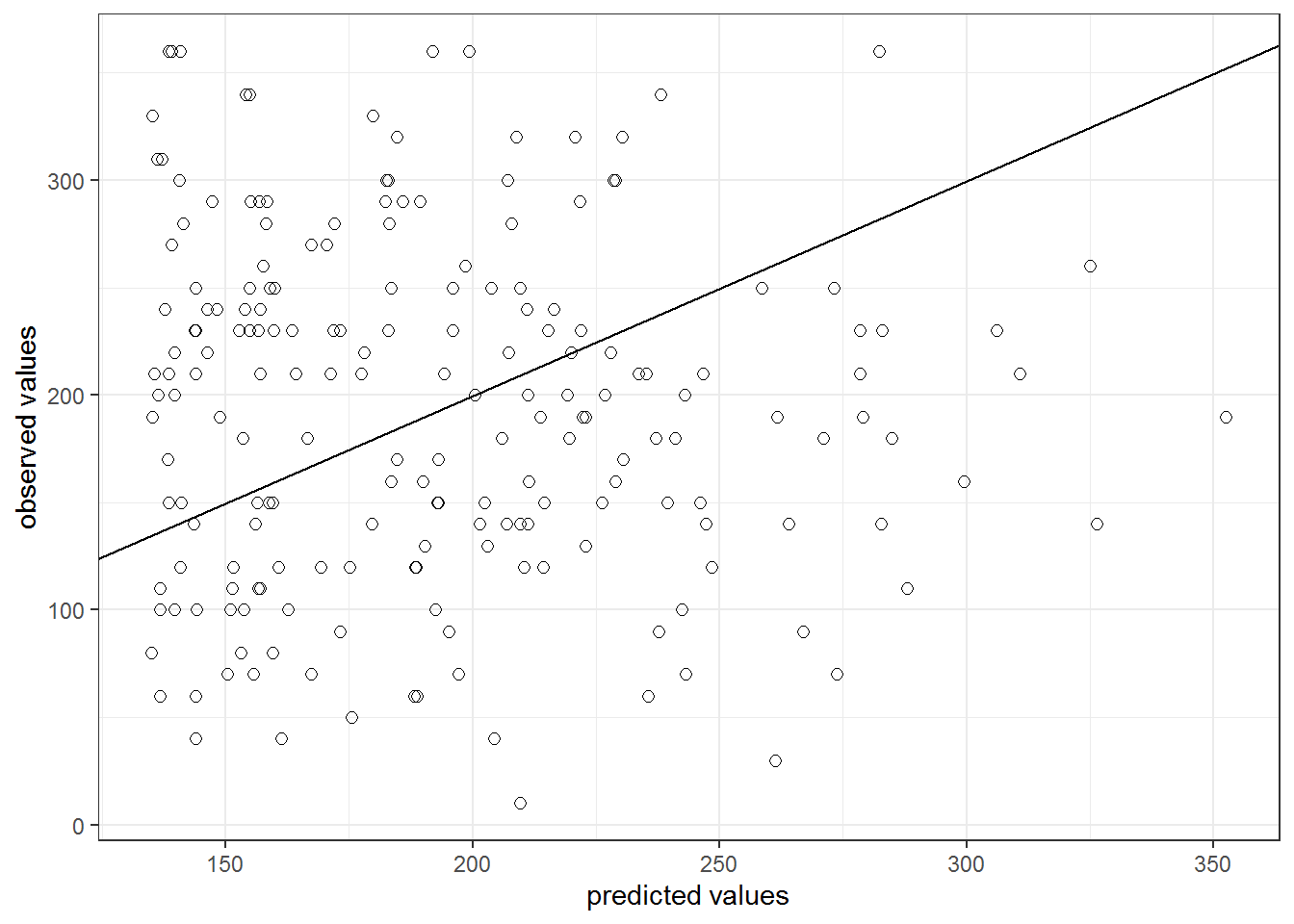

The output also tells us that 66.4667687% of the variation can be explained by our model. You may also visually inspect the fit of the model by plotting the predicted values against the observed values. We can extract the predicted values using the predict() function. So let’s create a new variable yhat, which contains those predicted values.

regression$yhat <- predict(simple_regression)We can now use this variable to plot the predicted values against the observed values. In the following plot, the model fit would be perfect if all points would fall on the diagonal line. The larger the distance between the points and the line, the worse the model fit. In other words, if all points would fall exactly on the diagonal line, the model would perfectly predict the observed values.

ggplot(regression,aes(yhat,sales)) +

geom_point(size=2,shape=1) + #Use hollow circles

scale_x_continuous(name="predicted values") +

scale_y_continuous(name="observed values") +

geom_abline(intercept = 0, slope = 1) +

theme_bw()

Figure 6.1: Model fit

Partial plots

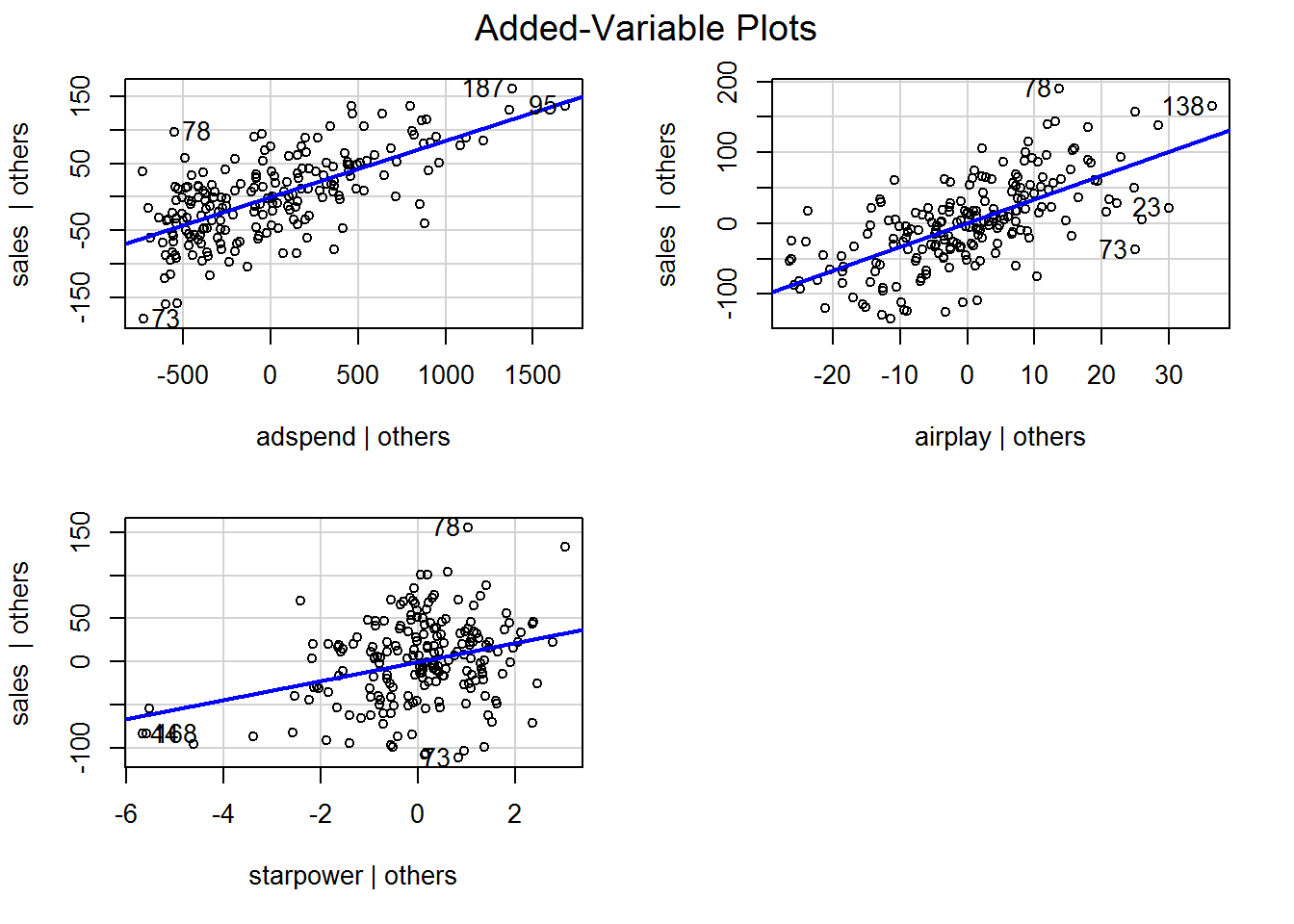

In the context of a simple linear regression (i.e., with a single independent variable), a scatter plot of the dependent variable against the independent variable provides a good indication of the nature of the relationship. If there is more than one independent variable, however, things become more complicated. The reason is that although the scatter plot still show the relationship between the two variables, it does not take into account the effect of the other independent variables in the model. Partial regression plot show the effect of adding another variable to a model that already controls for the remaining variables in the model. In other words, it is a scatterplot of the residuals of the outcome variable and each predictor when both variables are regressed separately on the remaining predictors. As an example, consider the effect of advertising expenditures on sales. In this case, the partial plot would show the effect of adding advertising expenditures as an explanatory variable while controlling for the variation that is explained by airplay and starpower in both variables (sales and advertising). Think of it as the purified relationship between advertising and sales that remains after controlling for other factors. The partial plots can easily be created using the avPlots() function from the car package:

library(car)

avPlots(multiple_regression)

Figure 6.2: Partial plots

Using the model

After fitting the model, we can use the estimated coefficients to predict sales for different values of advertising, airplay, and starpower. Suppose you would like to predict sales for a new music album with advertising expenditures of 800, airplay of 30 and starpower of 5. How much will it sell?

\[\hat{sales}=−26.61 + 0.084 * 800 + 3.367*30 + 11.08 ∗ 5= 197.74\]

… or by extracting the estimated coefficients:

summary(multiple_regression)$coefficients[1, 1] + summary(multiple_regression)$coefficients[2,

1] * 800 + summary(multiple_regression)$coefficients[3,

1] * 30 + summary(multiple_regression)$coefficients[4,

1] * 5## [1] 198The predicted value of the dependent variable is 198 units, i.e., the product will sell 198 units.

Comparing effects

Using the output from the regression model above, it is difficult to compare the effects of the independent variables because they are all measured on different scales (Euros, radio plays, releases). Standardized regression coefficients can be used to judge the relative importance of the predictor variables. Standardization is achieved by multiplying the unstandardized coefficient by the ratio of the standard deviations of the independent and dependent variables:

\[\begin{equation} B_{k}=\beta_{k} * \frac{s_{x_k}}{s_y} \tag{6.18} \end{equation}\]

Hence, the standardized coefficient will tell you by how many standard deviations the outcome will change as a result of a one standard deviation change in the predictor variable. Standardized coefficients can be easily computed using the lm.beta() function from the lm.beta package.

library(lm.beta)

lm.beta(multiple_regression)##

## Call:

## lm(formula = sales ~ adspend + airplay + starpower, data = regression)

##

## Standardized Coefficients::

## (Intercept) adspend airplay starpower

## NA 0.51 0.51 0.19The results show that for adspend and airplay, a change by one standard deviation will result in a 0.51 standard deviation change in sales, whereas for starpower, a one standard deviation change will only lead to a 0.19 standard deviation change in sales. Hence, while the effects of adspend and airplay are comparable in magnitude, the effect of starpower is less strong.

6.1.3 Categorical predictors

6.1.3.1 Two categories

Suppose, you wish to investigate the effect of the variable “country” on sales, which is a categorical variable that can only take two levels (i.e., 0 = local artist, 1 = international artist). Categorical variables with two levels are also called binary predictors. It is straightforward to include these variables in your model as “dummy” variables. Dummy variables are factor variables that can only take two values. For our “country” variable, we can create a new predictor variable that takes the form:

\[\begin{equation} x_4 = \begin{cases} 1 & \quad \text{if } i \text{th artist is international}\\ 0 & \quad \text{if } i \text{th artist is local} \end{cases} \tag{6.19} \end{equation}\]

This new variable is then added to our regression equation from before, so that the equation becomes

\[\begin{align} Sales =\beta_0 &+\beta_1*adspend\\ &+\beta_2*airplay\\ &+\beta_3*starpower\\ &+\beta_4*international+\epsilon \end{align}\]

where “international” represents the new dummy variable and \(\beta_4\) is the coefficient associated with this variable. Estimating the model is straightforward - you just need to include the variable as an additional predictor variable. Note that the variable needs to be specified as a factor variable before including it in your model. If you haven’t converted it to a factor variable before, you could also use the wrapper function as.factor() within the equation.

multiple_regression_bin <- lm(sales ~ adspend + airplay +

starpower + country, data = regression) #estimate linear model

summary(multiple_regression_bin) #summary of results##

## Call:

## lm(formula = sales ~ adspend + airplay + starpower + country,

## data = regression)

##

## Residuals:

## Min 1Q Median 3Q Max

## -109.20 -24.30 -1.82 29.19 156.31

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -16.40060 16.39540 -1.00 0.32

## adspend 0.08146 0.00653 12.48 < 0.0000000000000002 ***

## airplay 3.03766 0.26809 11.33 < 0.0000000000000002 ***

## starpower 10.08100 2.29546 4.39 0.00001843 ***

## countryinternational 45.67274 8.69117 5.26 0.00000039 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 44 on 195 degrees of freedom

## Multiple R-squared: 0.706, Adjusted R-squared: 0.7

## F-statistic: 117 on 4 and 195 DF, p-value: <0.0000000000000002You can see that we now have an additional coefficient in the regression output, which tells us the effect of the binary predictor. The dummy variable can generally be interpreted as the average difference in the dependent variable between the two groups (similar to a t-test), conditional on the other variables you have included in your model. In this case, the coefficient tells you the difference in sales between international and local artists, and whether this difference is significant. Specifically, it means that international artists on average sell 45.67 units more than local artists, and this difference is significant (i.e., p < 0.05).

6.1.3.2 More than two categories

Predictors with more than two categories, like our “genre”” variable, can also be included in your model. However, in this case one dummy variable cannot represent all possible values, since there are three genres (i.e., 1 = Rock, 2 = Pop, 3 = Electronic). Thus, we need to create additional dummy variables. For example, for our “genre” variable, we create two dummy variables as follows:

\[\begin{equation} x_5 = \begin{cases} 1 & \quad \text{if } i \text{th product is from Pop genre}\\ 0 & \quad \text{if } i \text{th product is from Rock genre} \end{cases} \tag{6.20} \end{equation}\]

\[\begin{equation} x_6 = \begin{cases} 1 & \quad \text{if } i \text{th product is from Electronic genre}\\ 0 & \quad \text{if } i \text{th product is from Rock genre} \end{cases} \tag{6.21} \end{equation}\]

We would then add these variables as additional predictors in the regression equation and obtain the following model

\[\begin{align} Sales =\beta_0 &+\beta_1*adspend\\ &+\beta_2*airplay\\ &+\beta_3*starpower\\ &+\beta_4*international\\ &+\beta_5*Pop\\ &+\beta_6*Electronic+\epsilon \end{align}\]

where “Pop” and “Rock” represent our new dummy variables, and \(\beta_5\) and \(\beta_6\) represent the associated regression coefficients.

The interpretation of the coefficients is as follows: \(\beta_5\) is the difference in average sales between the genres “Rock” and “Pop”, while \(\beta_6\) is the difference in average sales between the genres “Rock” and “Electro”. Note that the level for which no dummy variable is created is also referred to as the baseline. In our case, “Rock” would be the baseline genre. This means that there will always be one fewer dummy variable than the number of levels.

You don’t have to create the dummy variables manually as R will do this automatically when you add the variable to your equation:

multiple_regression <- lm(sales ~ adspend + airplay +

starpower + country + genre, data = regression) #estimate linear model

summary(multiple_regression) #summary of results##

## Call:

## lm(formula = sales ~ adspend + airplay + starpower + country +

## genre, data = regression)

##

## Residuals:

## Min 1Q Median 3Q Max

## -116.18 -26.54 0.05 27.98 154.56

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -30.67901 16.59989 -1.85 0.06611 .

## adspend 0.07233 0.00657 11.00 < 0.0000000000000002 ***

## airplay 2.71418 0.26824 10.12 < 0.0000000000000002 ***

## starpower 10.49628 2.19380 4.78 0.0000034 ***

## countryinternational 40.87988 8.40868 4.86 0.0000024 ***

## genrepop 47.69640 10.48717 4.55 0.0000095 ***

## genreelectronic 27.62034 8.17223 3.38 0.00088 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 42 on 193 degrees of freedom

## Multiple R-squared: 0.735, Adjusted R-squared: 0.727

## F-statistic: 89.2 on 6 and 193 DF, p-value: <0.0000000000000002How can we interpret the coefficients? It is estimated based on our model that products from the “Pop” genre will on average sell 47.69 units more than products from the “Rock” genre, and that products from the “Electronic” genre will sell on average 27.62 units more than the products from the “Rock” genre. The p-value of both variables is smaller than 0.05, suggesting that there is statistical evidence for a real difference in sales between the genres.

The level of the baseline category is arbitrary. As you have seen, R simply selects the first level as the baseline. If you would like to use a different baseline category, you can use the relevel() function and set the reference category using the ref argument. The following would estimate the same model using the second category as the baseline:

multiple_regression <- lm(sales ~ adspend + airplay +

starpower + country + relevel(genre, ref = 2),

data = regression) #estimate linear model

summary(multiple_regression) #summary of results##

## Call:

## lm(formula = sales ~ adspend + airplay + starpower + country +

## relevel(genre, ref = 2), data = regression)

##

## Residuals:

## Min 1Q Median 3Q Max

## -116.18 -26.54 0.05 27.98 154.56

##

## Coefficients:

## Estimate Std. Error t value

## (Intercept) 17.01739 18.19704 0.94

## adspend 0.07233 0.00657 11.00

## airplay 2.71418 0.26824 10.12

## starpower 10.49628 2.19380 4.78

## countryinternational 40.87988 8.40868 4.86

## relevel(genre, ref = 2)rock -47.69640 10.48717 -4.55

## relevel(genre, ref = 2)electronic -20.07606 7.98747 -2.51

## Pr(>|t|)

## (Intercept) 0.351

## adspend < 0.0000000000000002 ***

## airplay < 0.0000000000000002 ***

## starpower 0.0000034 ***

## countryinternational 0.0000024 ***

## relevel(genre, ref = 2)rock 0.0000095 ***

## relevel(genre, ref = 2)electronic 0.013 *

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 42 on 193 degrees of freedom

## Multiple R-squared: 0.735, Adjusted R-squared: 0.727

## F-statistic: 89.2 on 6 and 193 DF, p-value: <0.0000000000000002Note that while your choice of the baseline category impacts the coefficients and the significance level, the prediction for each group will be the same regardless of this choice.

6.2 Logistic regression

6.2.1 Motivation and intuition

In the last section we saw how to predict continuous outcomes (sales, height, etc.) via linear regression models. Another interesting case is that of binary outcomes, i.e. when the variable we want to model can only take two values (yes or no, group 1 or group 2, dead or alive, etc.). To this end we would like to estimate how our predictor variables change the probability of a value being 0 or 1. In this case we can technically still use a linear model (e.g. OLS). However, its predictions will most likely not be particularly useful. A more useful method is the logistic regression. In particular we are going to have a look at the logit model. In the following dataset we are trying to predict whether a song will be a top-10 hit on a popular music streaming platform. In a first step we are going to use only the danceability index as a predictor. Later we are going to add more independent variables.

library(ggplot2)

library(gridExtra)

chart_data <- read.delim2("https://raw.githubusercontent.com/IMSMWU/MRDA2018/master/data/chart_data_logistic.dat",

header = T, sep = "\t", stringsAsFactors = F, dec = ".")

# Create a new dummy variable 'top10', which is 1

# if a song made it to the top10 and 0 else:

chart_data$top10 <- ifelse(chart_data$rank < 11, 1,

0)

# Inspect data

head(chart_data)str(chart_data)## 'data.frame': 422 obs. of 27 variables:

## $ artistName : chr "dj mustard" "bing crosby" "post malone" "chris brown" ...

## $ trackID : chr "01gNiOqg8u7vT90uVgOVmz" "01h424WG38dgY34vkI3Yd0" "02opp1cycqiFNDpLd2o1J3" "02yRHV9Cgk8CUS2fx9lKVC" ...

## $ trackName : chr "Whole Lotta Lovin'" "White Christmas" "Big Lie" "Anyway" ...

## $ rank : int 120 70 129 130 182 163 12 86 67 77 ...

## $ streams : int 917710 1865526 1480436 894216 642784 809256 3490456 1737890 1914768 1056689 ...

## $ frequency : int 3 9 1 1 1 2 2 12 17 11 ...

## $ danceability : num 0.438 0.225 0.325 0.469 0.286 0.447 0.337 0.595 0.472 0.32 ...

## $ energy : num 0.399 0.248 0.689 0.664 0.907 0.795 0.615 0.662 0.746 0.752 ...

## $ key : int 4 9 6 7 8 8 9 11 6 6 ...

## $ loudness : num -8.75 -15.87 -4.95 -7.16 -4.74 ...

## $ speechiness : num 0.0623 0.0337 0.243 0.121 0.113 0.0443 0.0937 0.0362 0.119 0.056 ...

## $ acousticness : num 0.154 0.912 0.197 0.0566 0.0144 0.211 0.0426 0.0178 0.072 0.289 ...

## $ instrumentalness: num 0.00000845 0.000143 0 0.00000158 0 0.00169 0.0000167 0 0 0.000101 ...

## $ liveness : num 0.0646 0.404 0.0722 0.482 0.268 0.0725 0.193 0.0804 0.116 0.102 ...

## $ valence : num 0.382 0.185 0.225 0.267 0.271 0.504 0.0729 0.415 0.442 0.398 ...

## $ tempo : num 160.2 96 77.9 124.7 75.6 ...

## $ duration_ms : int 299160 183613 207680 211413 266640 397093 199973 218447 196040 263893 ...

## $ time_signature : int 5 4 4 4 4 4 4 4 4 4 ...

## $ isrc : chr "QMJMT1500808" "USMC14750470" "USUM71614468" "USRC11502943" ...

## $ spotifyArtistID : chr "0YinUQ50QDB7ZxSCLyQ40k" "6ZjFtWeHP9XN7FeKSUe80S" "246dkjvS1zLTtiykXe5h60" "7bXgB6jMjp9ATFy66eO08Z" ...

## $ releaseDate : chr "08.01.2016" "27.08.2007" "09.12.2016" "11.12.2015" ...

## $ daysSinceRelease: int 450 1000 114 478 527 429 506 132 291 556 ...

## $ spotifyFollowers: int 139718 123135 629600 4077185 2221348 9687258 8713999 39723 4422933 3462797 ...

## $ mbid : chr "0612bcce-e351-40be-b3d7-2bb5e1c23479" "2437980f-513a-44fc-80f1-b90d9d7fcf8f" "b1e26560-60e5-4236-bbdb-9aa5a8d5ee19" "c234fa42-e6a6-443e-937e-2f4b073538a3" ...

## $ artistCountry : chr "US" "US" "0" "US" ...

## $ indicator : int 1 1 1 1 1 1 1 1 1 1 ...

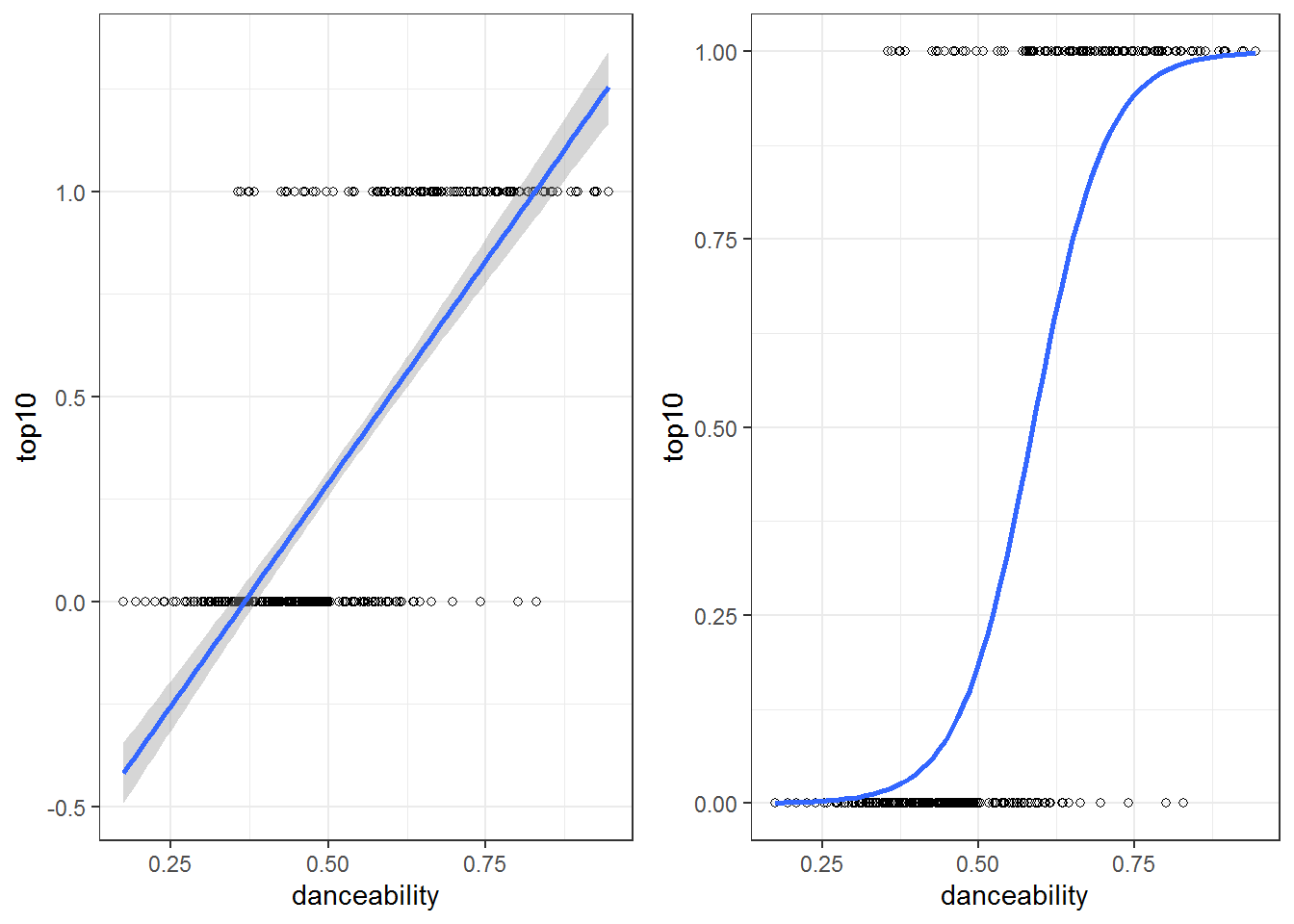

## $ top10 : num 0 0 0 0 0 0 0 0 0 0 ...Below are two attempts to model the data. The left assumes a linear probability model (calculated with the same methods that we used in the last chapter), while the right model is a logistic regression model. As you can see, the linear probability model produces probabilities that are above 1 and below 0, which are not valid probabilities, while the logistic model stays between 0 and 1. Notice that songs with a higher danceability index (on the right of the x-axis) seem to cluster more at \(1\) and those with a lower more at \(0\) so we expect a positive influence of danceability on the probability of a song to become a top-10 hit.

Figure 6.3: The same binary data explained by two models; A linear probability model (on the left) and a logistic regression model (on the right)

A key insight at this point is that the connection between \(\mathbf{X}\) and \(Y\) is non-linear in the logistic regression model. As we can see in the plot, the probability of success is most strongly affected by danceability around values of \(0.5\), while higher and lower values have a smaller marginal effect. This obviously also has consequences for the interpretation of the coefficients later on.

6.2.2 Technical details of the model

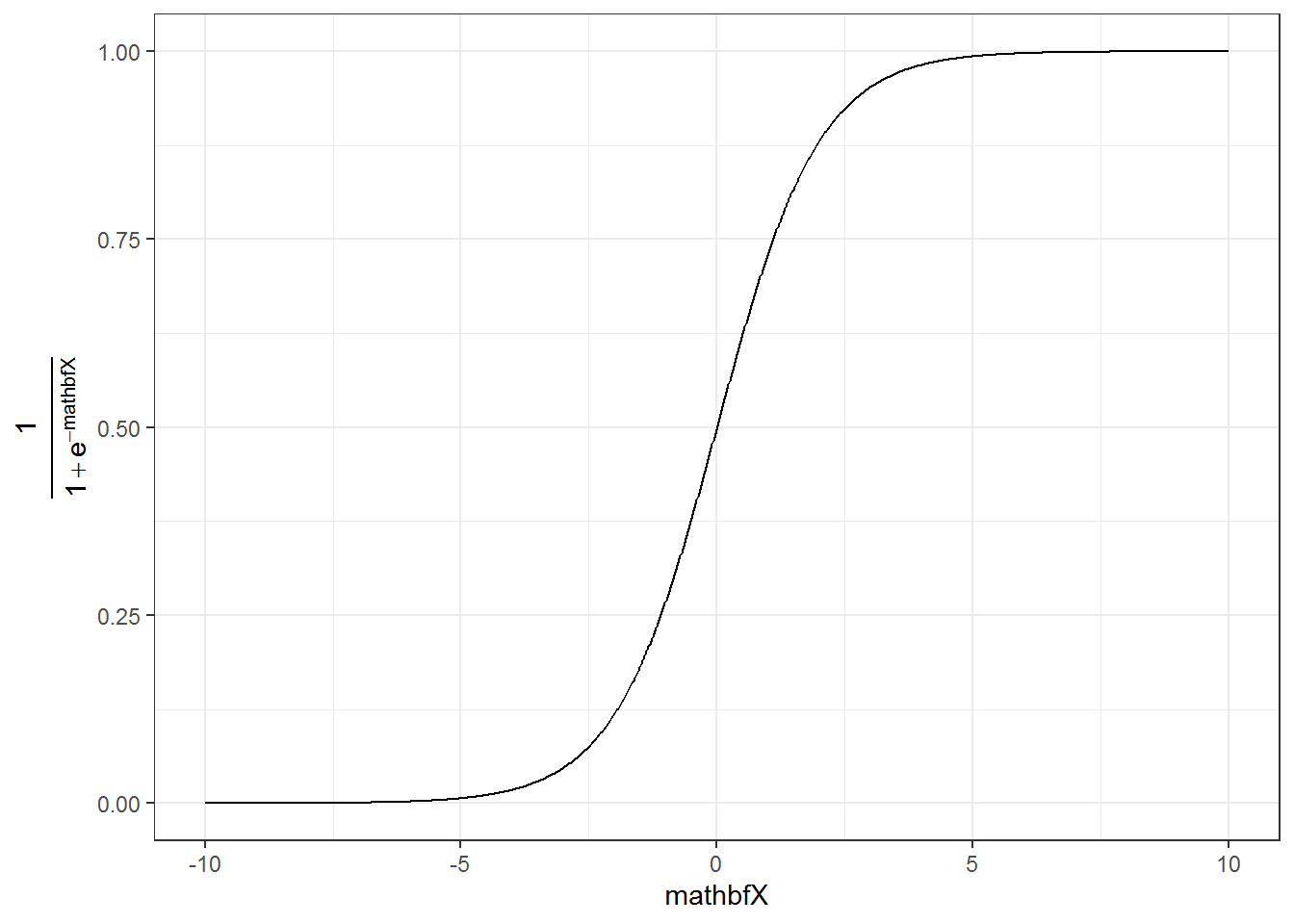

As the name suggests, the logistic function is an important component of the logistic regression model. It has the following form:

\[ f(\mathbf{X}) = \frac{1}{1 + e^{-\mathbf{X}}} \] This function transforms all real numbers into the range between 0 and 1. We need this to model probabilities, as probabilities can only be between 0 and 1.

##

## Attaching package: 'latex2exp'## The following object is masked from 'package:plotly':

##

## TeX

The logistic function on its own is not very useful yet, as we want to be able to determine how predictors influence the probability of a value to be equal to 1. To this end we replace the \(\mathbf{X}\) in the function above with our familiar linear specification, i.e.

\[ \mathbf{X} = \beta_0 + \beta_1 * x_{1,i} + \beta_2 * x_{2,i} + ... +\beta_m * x_{m,i}\\ f(\mathbf{X}) = P(y_i = 1) = \frac{1}{1 + e^{-(\beta_0 + \beta_1 * x_{1,i} + \beta_2 * x_{2,i} + ... +\beta_m * x_{m,i})}} \]

In our case we only have \(\beta_0\) and \(\beta_1\), the coefficient associated with danceability.

In general we now have a mathematical relationship between our predictor variables \((x_1, ..., x_m)\) and the probability of \(y_i\) being equal to one. The last step is to estimate the parameters of this model \((\beta_0, \beta_1, ..., \beta_m)\) to determine the magnitude of the effects.

6.2.3 Estimation in R

We are now going to show how to perform logistic regression in R. Instead of lm() we now use glm(Y~X, family=binomial(link = 'logit')) to use the logit model. We can still use the summary() command to inspect the output of the model.

# Run the glm

logit_model <- glm(top10 ~ danceability, family = binomial(link = "logit"),

data = chart_data)

# Inspect model summary

summary(logit_model)##

## Call:

## glm(formula = top10 ~ danceability, family = binomial(link = "logit"),

## data = chart_data)

##

## Deviance Residuals:

## Min 1Q Median 3Q Max

## -2.885 -0.501 -0.238 0.293 2.820

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -10.041 0.896 -11.2 <0.0000000000000002 ***

## danceability 17.094 1.602 10.7 <0.0000000000000002 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 539.05 on 421 degrees of freedom

## Residual deviance: 258.49 on 420 degrees of freedom

## AIC: 262.5

##

## Number of Fisher Scoring iterations: 6Noticeably this output does not include an \(R^2\) value to asses model fit. Multiple “Pseudo \(R^2\)s”, similar to the one used in OLS, have been developed. There are packages that return the \(R^2\) given a logit model (see rcompanion or pscl). The calculation by hand is also fairly simple. We define the function logisticPseudoR2s() that takes a logit model as an input and returns three popular pseudo \(R^2\) values.

logisticPseudoR2s <- function(LogModel) {

dev <- LogModel$deviance

nullDev <- LogModel$null.deviance

modelN <- length(LogModel$fitted.values)

R.l <- 1 - dev/nullDev

R.cs <- 1 - exp(-(nullDev - dev)/modelN)

R.n <- R.cs/(1 - (exp(-(nullDev/modelN))))

cat("Pseudo R^2 for logistic regression\n")

cat("Hosmer and Lemeshow R^2 ", round(R.l, 3),

"\n")

cat("Cox and Snell R^2 ", round(R.cs, 3),

"\n")

cat("Nagelkerke R^2 ", round(R.n, 3),

"\n")

}

# Inspect Pseudo R2s

logisticPseudoR2s(logit_model)## Pseudo R^2 for logistic regression

## Hosmer and Lemeshow R^2 0.52

## Cox and Snell R^2 0.49

## Nagelkerke R^2 0.67The coefficients of the model give the change in the log odds of the dependent variable due to a unit change in the regressor. This makes the exact interpretation of the coefficients difficult, but we can still interpret the signs and the p-values which will tell us if a variable has a significant positive or negative impact on the probability of the dependent variable being \(1\). In order to get the odds ratios we can simply take the exponent of the coefficients.

exp(coef(logit_model))## (Intercept) danceability

## 0.000044 26532731.711423Notice that the coefficient is extremely large. That is (partly) due to the fact that the danceability variable is constrained to values between \(0\) and \(1\) and the coefficients are for a unit change. We can make the “unit-change” interpretation more meaningful by multiplying the danceability index by \(100\). This linear transformation does not affect the model fit or the p-values.

# Re-scale independet variable

chart_data$danceability_100 <- chart_data$danceability *

100

# Run the regression model

logit_model <- glm(top10 ~ danceability_100, family = binomial(link = "logit"),

data = chart_data)

# Inspect model summary

summary(logit_model)##

## Call:

## glm(formula = top10 ~ danceability_100, family = binomial(link = "logit"),

## data = chart_data)

##

## Deviance Residuals:

## Min 1Q Median 3Q Max

## -2.885 -0.501 -0.238 0.293 2.820

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -10.041 0.896 -11.2 <0.0000000000000002 ***

## danceability_100 0.171 0.016 10.7 <0.0000000000000002 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 539.05 on 421 degrees of freedom

## Residual deviance: 258.49 on 420 degrees of freedom

## AIC: 262.5

##

## Number of Fisher Scoring iterations: 6# Inspect Pseudo R2s

logisticPseudoR2s(logit_model)## Pseudo R^2 for logistic regression

## Hosmer and Lemeshow R^2 0.52

## Cox and Snell R^2 0.49

## Nagelkerke R^2 0.67# Convert coefficients to odds ratios

exp(coef(logit_model))## (Intercept) danceability_100

## 0.000044 1.186418We observe that danceability positively affects the likelihood of becoming at top-10 hit. To get the confidence intervals for the coefficients we can use the same function as with OLS

confint(logit_model)## 2.5 % 97.5 %

## (Intercept) -11.92 -8.4

## danceability_100 0.14 0.2In order to get a rough idea about the magnitude of the effects we can calculate the partial effects at the mean of the data (that is the effect for the average observation). Alternatively, we can calculate the mean of the effects (that is the average of the individual effects). Both can be done with the logitmfx(...) function from the mfx package. If we set logitmfx(logit_model, data = my_data, atmean = FALSE) we calculate the latter. Setting atmean = TRUE will calculate the former. However, in general we are most interested in the sign and significance of the coefficient.

library(mfx)

# Average partial effect

logitmfx(logit_model, data = chart_data, atmean = FALSE)## Call:

## logitmfx(formula = logit_model, data = chart_data, atmean = FALSE)

##

## Marginal Effects:

## dF/dx Std. Err. z P>|z|

## danceability_100 0.01573 0.00298 5.29 0.00000013 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1This now gives the average partial effects in percentage points. An additional point on the danceability scale (from \(1\) to \(100\)), on average, makes it \(1.57%\) more likely for a song to become at top-10 hit.

To get the effect of an additional point at a specific value, we can calculate the odds ratio by predicting the probability at a value and at the value \(+1\). For example if we are interested in how much more likely a song with 51 compared to 50 danceability is to become a hit we can simply calculate the following

# Probability of a top 10 hit with a danceability

# of 50

prob_50 <- exp(-(-summary(logit_model)$coefficients[1,

1] - summary(logit_model)$coefficients[2, 1] *

50))

prob_50## [1] 0.22# Probability of a top 10 hit with a danceability

# of 51

prob_51 <- exp(-(-summary(logit_model)$coefficients[1,

1] - summary(logit_model)$coefficients[2, 1] *

51))

prob_51## [1] 0.27# Odds ratio

prob_51/prob_50## [1] 1.2So the odds are 20% higher at 51 than at 50.

6.2.3.1 Logistic model with multiple predictors

Of course we can also use multiple predictors in logistic regression as shown in the formula above. We might want to add spotify followers (in million) and weeks since the release of the song.

chart_data$spotify_followers_m <- chart_data$spotifyFollowers/1000000

chart_data$weeks_since_release <- chart_data$daysSinceRelease/7Again, the familiar formula interface can be used with the glm() function. All the model summaries shown above still work with multiple predictors.

multiple_logit_model <- glm(top10 ~ danceability_100 +

spotify_followers_m + weeks_since_release, family = binomial(link = "logit"),

data = chart_data)

summary(multiple_logit_model)##

## Call:

## glm(formula = top10 ~ danceability_100 + spotify_followers_m +

## weeks_since_release, family = binomial(link = "logit"), data = chart_data)

##

## Deviance Residuals:

## Min 1Q Median 3Q Max

## -2.886 -0.439 -0.208 0.231 2.801

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -9.60376 0.99048 -9.70 < 0.0000000000000002 ***

## danceability_100 0.16624 0.01636 10.16 < 0.0000000000000002 ***

## spotify_followers_m 0.19772 0.06003 3.29 0.00099 ***

## weeks_since_release -0.01298 0.00496 -2.62 0.00883 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 534.91 on 416 degrees of freedom

## Residual deviance: 239.15 on 413 degrees of freedom

## (5 observations deleted due to missingness)

## AIC: 247.1

##

## Number of Fisher Scoring iterations: 6logisticPseudoR2s(multiple_logit_model)## Pseudo R^2 for logistic regression

## Hosmer and Lemeshow R^2 0.55

## Cox and Snell R^2 0.51

## Nagelkerke R^2 0.7exp(coef(multiple_logit_model))## (Intercept) danceability_100 spotify_followers_m weeks_since_release

## 0.000067 1.180851 1.218617 0.987108confint(multiple_logit_model)## Waiting for profiling to be done...## 2.5 % 97.5 %

## (Intercept) -11.680 -7.7821

## danceability_100 0.136 0.2006

## spotify_followers_m 0.081 0.3171

## weeks_since_release -0.023 -0.00366.2.3.2 Model selection

The question remains, whether a variable should be added to the model. We will present two methods for model selection for logistic regression. The first is based on the Akaike Information Criterium (AIC). It is reported with the summary output for logit models. The value of the AIC is relative, meaning that it has no interpretation by itself. However, it can be used to compare and select models. The model with the lowest AIC value is the one that should be chosen. Note that the AIC does not indicate how well the model fits the data, but is merely used to compare models.

For example, consider the following model, where we exclude the followers covariate. Seeing as it was able to contribute significantly to the explanatory power of the model, the AIC increases, indicating that the model including followers is better suited to explain the data. We always want the lowest possible AIC.

multiple_logit_model2 <- glm(top10 ~ danceability_100 +

weeks_since_release, family = binomial(link = "logit"),

data = chart_data)

summary(multiple_logit_model2)##

## Call:

## glm(formula = top10 ~ danceability_100 + weeks_since_release,

## family = binomial(link = "logit"), data = chart_data)

##

## Deviance Residuals:

## Min 1Q Median 3Q Max

## -2.958 -0.472 -0.219 0.256 2.876

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -8.98023 0.93065 -9.65 <0.0000000000000002 ***

## danceability_100 0.16650 0.01611 10.34 <0.0000000000000002 ***

## weeks_since_release -0.01281 0.00484 -2.65 0.0081 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 534.91 on 416 degrees of freedom

## Residual deviance: 250.12 on 414 degrees of freedom

## (5 observations deleted due to missingness)

## AIC: 256.1

##

## Number of Fisher Scoring iterations: 6As a second measure for variable selection, you can use the pseudo \(R^2\)s as shown above. The fit is distinctly worse according to all three values presented here, when excluding the Spotify followers.

logisticPseudoR2s(multiple_logit_model2)## Pseudo R^2 for logistic regression

## Hosmer and Lemeshow R^2 0.53

## Cox and Snell R^2 0.49

## Nagelkerke R^2 0.686.2.3.3 Predictions

We can predict the probability given an observation using the predict(my_logit, newdata = ..., type = "response") function. Replace ... with the observed values for which you would like to predict the outcome variable.

# Prediction for one observation

predict(multiple_logit_model, newdata = data.frame(danceability_100 = 50,

spotify_followers_m = 10, weeks_since_release = 1),

type = "response")## 1

## 0.66The prediction indicates that a song with danceability of \(50\) from an artist with \(10M\) Spotify followers has a \(66%\) chance of being in the top-10, 1 week after its release.

6.2.3.4 Perfect Prediction Logit

Perfect prediction occurs whenever a linear function of \(X\) can perfectly separate the \(1\)s from the \(0\)s in the dependent variable. This is problematic when estimating a logit model as it will result in biased estimators (also check to p-values in the example!). R will return the following message if this occurs:

glm.fit: fitted probabilities numerically 0 or 1 occurred

Given this error, one should not use the output of the glm(...) function for the analysis. There are various ways to deal with this problem, one of which is to use Firth’s bias-reduced penalized-likelihood logistic regression with the logistf(Y~X) function in the logistf package.

6.2.3.4.1 Example

In this example data \(Y = 0\) if \(x_1 <0\) and \(Y=1\) if \(x_1>0\) and we thus have perfect prediction. As we can see the output of the regular logit model is not interpretable. The standard errors are huge compared to the coefficients and thus the p-values are \(1\) despite \(x_1\) being a predictor of \(Y\). Thus, we turn to the penalized-likelihood version. This model correctly indicates that \(x_1\) is in fact a predictor for \(Y\) as the coefficient is significant.

Y <- c(0, 0, 0, 0, 1, 1, 1, 1)

X <- cbind(c(-1, -2, -3, -3, 5, 6, 10, 11), c(3, 2,

-1, -1, 2, 4, 1, 0))

# Perfect prediction with regular logit

summary(glm(Y ~ X, family = binomial(link = "logit")))##

## Call:

## glm(formula = Y ~ X, family = binomial(link = "logit"))

##

## Deviance Residuals:

## 1 2 3 4 5

## -0.000010220 -0.000001230 -0.000003368 -0.000003368 0.000010589

## 6 7 8

## 0.000006079 0.000000021 0.000000021

##

## Coefficients:

## Estimate Std. Error z value Pr(>|z|)

## (Intercept) -6.94 113859.81 0 1

## X1 7.36 15925.25 0 1

## X2 -3.12 43853.49 0 1

##

## (Dispersion parameter for binomial family taken to be 1)

##

## Null deviance: 11.09035488895912 on 7 degrees of freedom

## Residual deviance: 0.00000000027772 on 5 degrees of freedom

## AIC: 6

##

## Number of Fisher Scoring iterations: 24library(logistf)

# Perfect prediction with penalized-likelihood

# logit

summary(logistf(Y ~ X))## logistf(formula = Y ~ X)

##

## Model fitted by Penalized ML

## Coefficients:

## coef se(coef) lower 0.95 upper 0.95 Chisq p method

## (Intercept) -0.989 1.21 -10.217 1.9 0.59 0.442 2

## X1 0.332 0.18 0.042 1.5 5.32 0.021 2

## X2 0.083 0.51 -2.179 3.4 0.02 0.888 2

##

## Method: 1-Wald, 2-Profile penalized log-likelihood, 3-None

##

## Likelihood ratio test=5.8 on 2 df, p=0.055, n=8

## Wald test = 3.7 on 2 df, p = 0.15Learning check

(LC6.1) What is a correlation coefficient?

- It describes the difference in means of two variables

- It describes the causal relation between two variables

- It is the standardized covariance

- It describes the degree to which the variation in one variable is related to the variation in another variable

- None of the above

(LC6.2) Which line through a scatterplot produces the best fit in a linear regression model?

- The line associated with the steepest slope parameter

- The line that minimizes the sum of the squared deviations of the predicted values (regression line) from the observed values

- The line that minimizes the sum of the squared residuals

- The line that maximizes the sum of the squared residuals

- None of the above

(LC6.3) Which of the following statements about the adjusted R-squared is TRUE?

- It is always larger than the regular \(R^{2}\)

- It increases with every additional variable

- It increases only with additional variables that add more explanatory power than pure chance

- It contains a “penalty” for including unnecessary variables

- None of the above

(LC6.4) When do you use a logistic regression model?

- When the dependent variable is continuous

- When the independent and dependent variables are binary

- When the dependent variable is binary

- None of the above

(LC6.5) What is the correct way to implement a linear regression model in R? (x = independent variable, y = dependent variable)?

lm(y~x, data=data)lm(x~y + error, data=data)lm(x~y, data=data)lm(y~x + error, data=data)- None of the above

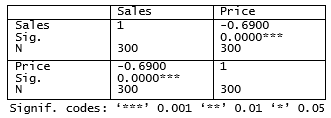

(LC6.6) Consider the output from a bivariate correlation below

- …lower prices cause higher sales.

- …lower prices are associated with higher sales and vice versa.

- None of the above

(LC6.7) When interpreting the statistical significance of a regression coefficient, the p-value…

(LC6.7) When interpreting the statistical significance of a regression coefficient, the p-value…