7 Regression

7.1 Correlation

Before we start with regression analysis, we will review the basic concept of correlation first. Correlation helps us to determine the degree to which the variation in one variable, X, is related to the variation in another variable, Y.

7.1.1 Correlation coefficient

The correlation coefficient summarizes the strength of the linear relationship between two metric (interval or ratio scaled) variables. Let’s consider a simple example. Say you conduct a survey to investigate the relationship between the attitude towards a city and the duration of residency. The “Attitude” variable can take values between 1 (very unfavorable) and 12 (very favorable), and the “duration of residency” is measured in years. Let’s further assume for this example that the attitude measurement represents an interval scale (although it is usually not realistic to assume that the scale points on an itemized rating scale have the same distance). To keep it simple, let’s further assume that you only asked 12 people. We can create a short data set like this:

library(psych)

attitude <- c(6,9,8,3,10,4,5,2,11,9,10,2)

duration <- c(10,12,12,4,12,6,8,2,18,9,17,2)

att_data <- data.frame(attitude, duration)

att_data <- att_data[order(-attitude), ]

att_data$respodentID <- c(1:12)

str(att_data)## 'data.frame': 12 obs. of 3 variables:

## $ attitude : num 11 10 10 9 9 8 6 5 4 3 ...

## $ duration : num 18 12 17 12 9 12 10 8 6 4 ...

## $ respodentID: int 1 2 3 4 5 6 7 8 9 10 ...## vars n mean sd median trimmed mad min max range skew kurtosis

## attitude 1 12 6.58 3.32 7.0 6.6 4.45 2 11 9 -0.14 -1.74

## duration 2 12 9.33 5.26 9.5 9.2 4.45 2 18 16 0.10 -1.27

## se

## attitude 0.96

## duration 1.52## attitude duration respodentID

## 9 11 18 1

## 5 10 12 2

## 11 10 17 3

## 2 9 12 4

## 10 9 9 5

## 3 8 12 6

## 1 6 10 7

## 7 5 8 8

## 6 4 6 9

## 4 3 4 10

## 8 2 2 11

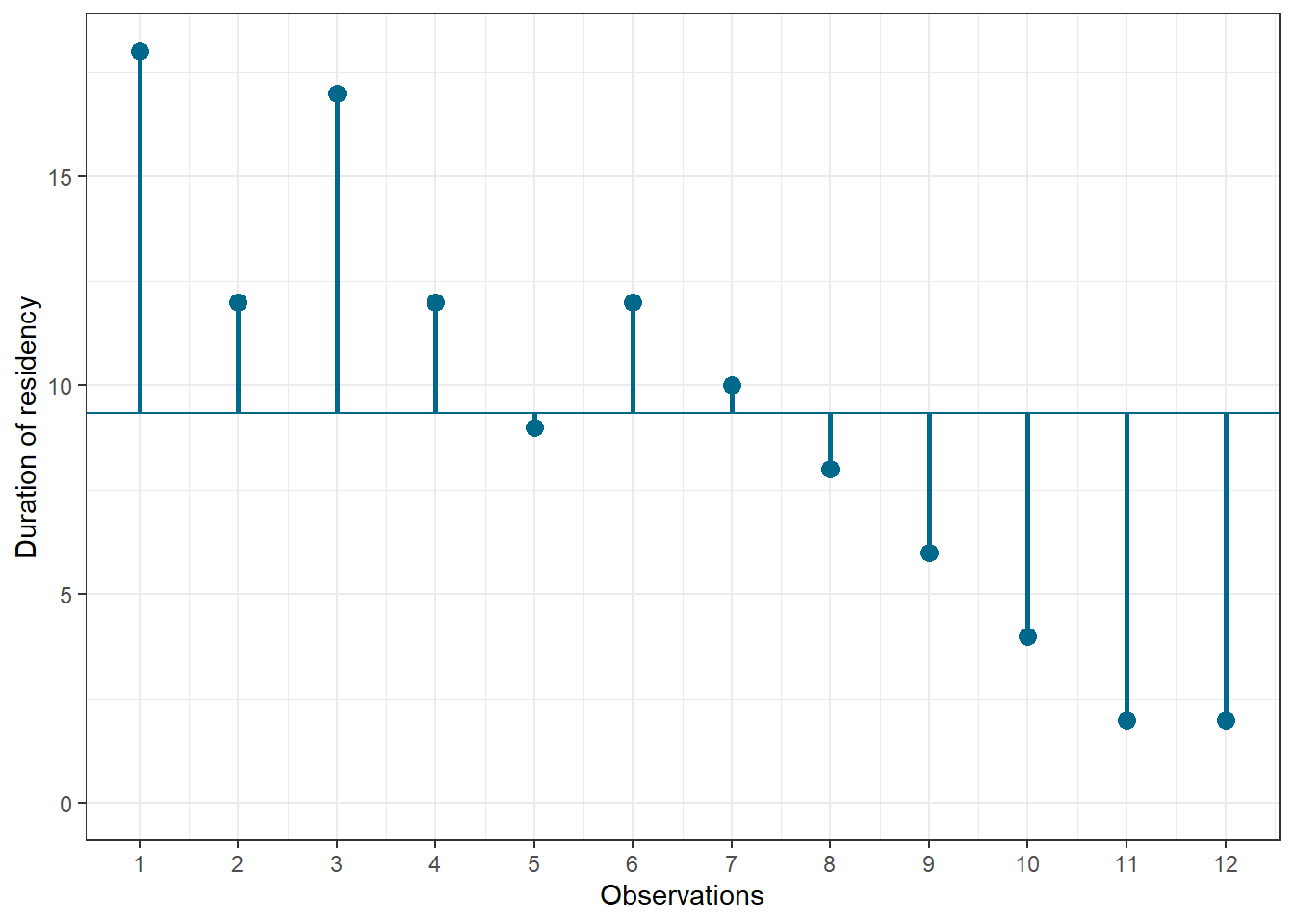

## 12 2 2 12Let’s look at the data first. The following graph shows the individual data points for the “duration of residency”” variable, where the y-axis shows the duration of residency in years and the x-axis shows the respondent ID. The blue horizontal line represents the mean of the variable (9.33) and the vertical lines show the distance of the individual data points from the mean.

Figure 1.3: Scores for duration of residency variable

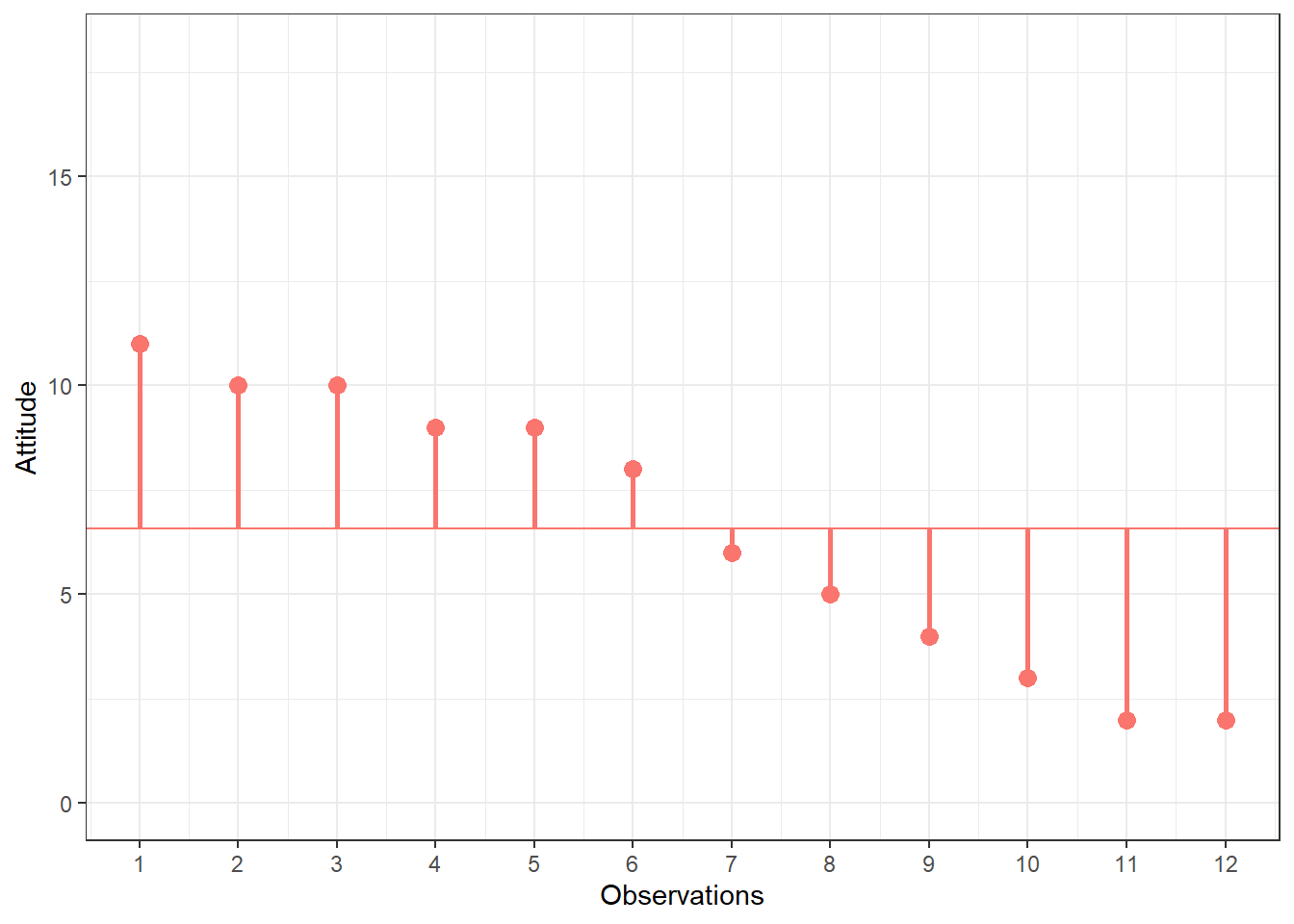

You can see that there are some respondents that have been living in the city longer than average and some respondents that have been living in the city shorter than average. Let’s do the same for the second variable (“Attitude”). Again, the y-axis shows the observed scores for this variable and the x-axis shows the respondent ID.

Figure 1.4: Scores for attitude variable

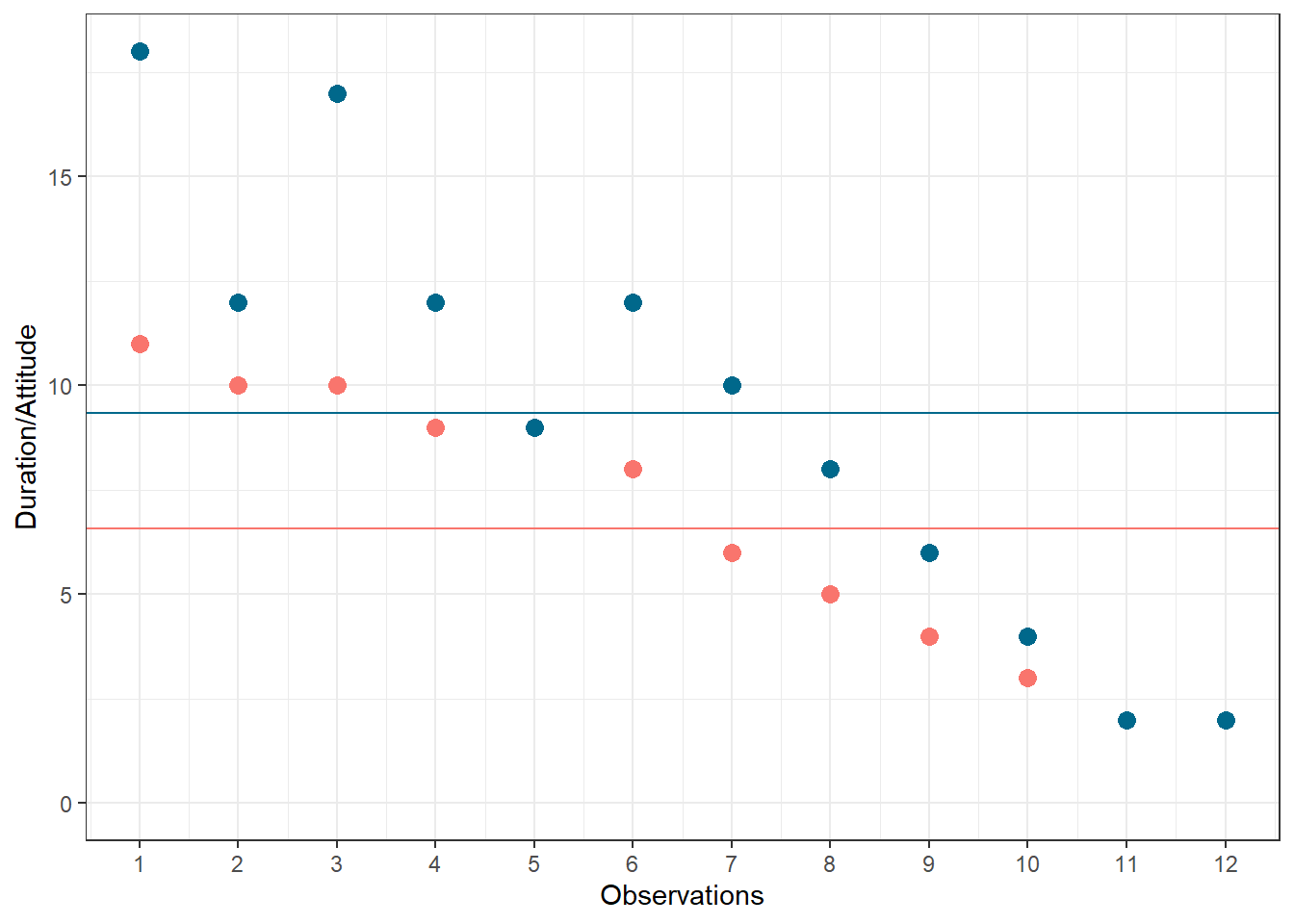

Again, we can see that some respondents have an above average attitude towards the city (more favorable) and some respondents have a below average attitude towards the city. Let’s combine both variables in one graph now to see if there is some co-movement:

Figure 5.1: Scores for attitude and duration of residency variables

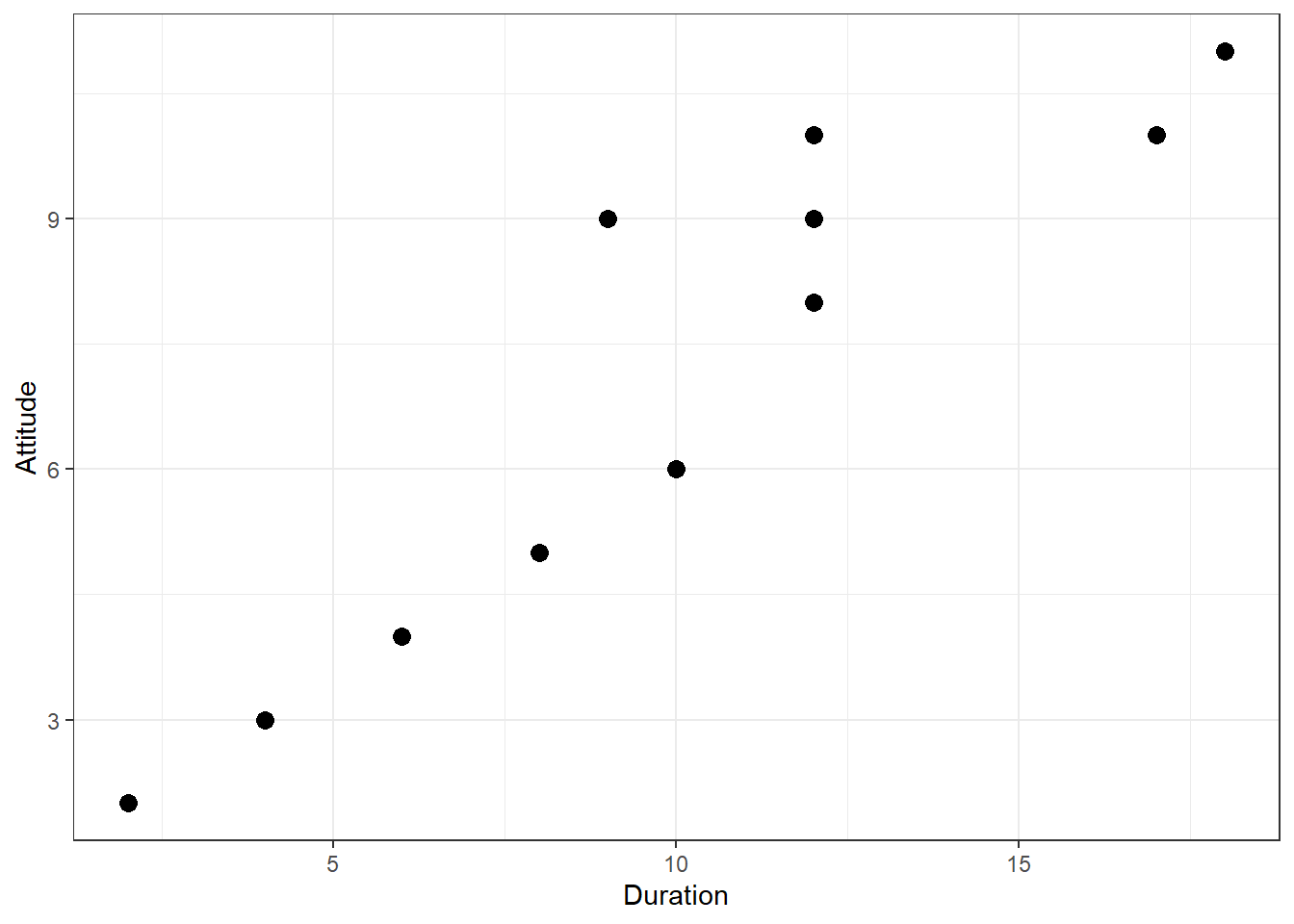

We can see that there is indeed some co-movement here. The variables covary because respondents who have an above (below) average attitude towards the city also appear to have been living in the city for an above (below) average amount of time and vice versa. Correlation helps us to quantify this relationship. Before you proceed to compute the correlation coefficient, you should first look at the data. We usually use a scatterplot to visualize the relationship between two metric variables:

Figure 1.5: Scatterplot for durationand attitute variables

How can we compute the correlation coefficient? Remember that the variance measures the average deviation from the mean of a variable:

\[\begin{equation} \begin{split} s_x^2&=\frac{\sum_{i=1}^{N} (X_i-\overline{X})^2}{N-1} \\ &= \frac{\sum_{i=1}^{N} (X_i-\overline{X})*(X_i-\overline{X})}{N-1} \end{split} \tag{7.1} \end{equation}\]

When we consider two variables, we multiply the deviation for one variable by the respective deviation for the second variable:

\((X_i-\overline{X})*(Y_i-\overline{Y})\)

This is called the cross-product deviation. Then we sum the cross-product deviations:

\(\sum_{i=1}^{N}(X_i-\overline{X})*(Y_i-\overline{Y})\)

… and compute the average of the sum of all cross-product deviations to get the covariance:

\[\begin{equation} Cov(x, y) =\frac{\sum_{i=1}^{N}(X_i-\overline{X})*(Y_i-\overline{Y})}{N-1} \tag{7.2} \end{equation}\]

You can easily compute the covariance manually as follows

x <- att_data$duration

x_bar <- mean(att_data$duration)

y <- att_data$attitude

y_bar <- mean(att_data$attitude)

N <- nrow(att_data)

cov <- (sum((x - x_bar)*(y - y_bar))) / (N - 1)

cov## [1] 16.333333Or you simply use the built-in cov() function:

## [1] 16.333333A positive covariance indicates that as one variable deviates from the mean, the other variable deviates in the same direction. A negative covariance indicates that as one variable deviates from the mean (e.g., increases), the other variable deviates in the opposite direction (e.g., decreases).

However, the size of the covariance depends on the scale of measurement. Larger scale units will lead to larger covariance. To overcome the problem of dependence on measurement scale, we need to convert the covariance to a standard set of units through standardization by dividing the covariance by the standard deviation (similar to how we compute z-scores).

With two variables, there are two standard deviations. We simply multiply the two standard deviations. We then divide the covariance by the product of the two standard deviations to get the standardized covariance, which is known as a correlation coefficient r:

\[\begin{equation} r=\frac{Cov_{xy}}{s_x*s_y} \tag{7.3} \end{equation}\]

This is known as the product moment correlation (r) and it is straight-forward to compute:

## [1] 0.93607782Or you could just use the cor() function:

## attitude duration

## attitude 1.00000000 0.93607782

## duration 0.93607782 1.00000000The properties of the correlation coefficient (‘r’) are:

- ranges from -1 to + 1

- +1 indicates perfect linear relationship

- -1 indicates perfect negative relationship

- 0 indicates no linear relationship

- ± .1 represents small effect

- ± .3 represents medium effect

- ± .5 represents large effect

7.1.2 Significance testing

How can we determine if our two variables are significantly related? To test this, we denote the population moment correlation ρ. Then we test the null of no relationship between variables:

\[H_0:\rho=0\] \[H_1:\rho\ne0\]

The test statistic is:

\[\begin{equation} t=\frac{r*\sqrt{N-2}}{\sqrt{1-r^2}} \tag{7.4} \end{equation}\]

It has a t distribution with n - 2 degrees of freedom. Then, we follow the usual procedure of calculating the test statistic and comparing the test statistic to the critical value of the underlying probability distribution. If the calculated test statistic is larger than the critical value, the null hypothesis of no relationship between X and Y is rejected.

## [1] 8.4144314## [1] 2.2281389## [1] 0.0000075451612Or you can simply use the cor.test() function, which also produces the 95% confidence interval:

cor.test(att_data$attitude, att_data$duration, alternative = "two.sided", method = "pearson", conf.level = 0.95)##

## Pearson's product-moment correlation

##

## data: att_data$attitude and att_data$duration

## t = 8.41443, df = 10, p-value = 0.0000075452

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## 0.78260411 0.98228152

## sample estimates:

## cor

## 0.93607782To determine the linear relationship between variables, the data only needs to be measured using interval scales. If you want to test the significance of the association, the sampling distribution needs to be normally distributed (we usually assume this when our data are normally distributed or when N is large). If parametric assumptions are violated, you should use non-parametric tests:

- Spearman’s correlation coefficient: requires ordinal data and ranks the data before applying Pearson’s equation.

- Kendall’s tau: use when N is small or the number of tied ranks is large.

cor.test(att_data$attitude, att_data$duration, alternative = "two.sided", method = "spearman", conf.level = 0.95)##

## Spearman's rank correlation rho

##

## data: att_data$attitude and att_data$duration

## S = 14.1969, p-value = 0.0000021833

## alternative hypothesis: true rho is not equal to 0

## sample estimates:

## rho

## 0.95036059cor.test(att_data$attitude, att_data$duration, alternative = "two.sided", method = "kendall", conf.level = 0.95)##

## Kendall's rank correlation tau

##

## data: att_data$attitude and att_data$duration

## z = 3.90948, p-value = 0.000092496

## alternative hypothesis: true tau is not equal to 0

## sample estimates:

## tau

## 0.89602867Report the results:

A Pearson product-moment correlation coefficient was computed to assess the relationship between the duration of residence in a city and the attitude toward the city. There was a positive correlation between the two variables, r = 0.936, n = 12, p < 0.05. A scatterplot summarizes the results (Figure XY).

A note on the interpretation of correlation coefficients:

As we have already seen in chapter 1, correlation coefficients give no indication of the direction of causality. In our example, we can conclude that the attitude toward the city is more positive as the years of residence increases. However, we cannot say that the years of residence cause the attitudes to be more positive. There are two main reasons for caution when interpreting correlations:

- Third-variable problem: there may be other unobserved factors that affect both the ‘attitude towards a city’ and the ‘duration of residency’ variables

- Direction of causality: Correlations say nothing about which variable causes the other to change (reverse causality: attitudes may just as well cause the years of residence variable).

7.2 Regression

Correlations measure relationships between variables (i.e., how much two variables covary). Using regression analysis we can predict the outcome of a dependent variable (Y) from one or more independent variables (X). For example, we could be interested in how many products will we will sell if we increase the advertising expenditures by 1000 Euros? In regression analysis, we fit a model to our data and use it to predict the values of the dependent variable from one predictor variable (bivariate regression) or several predictor variables (multiple regression). The following table shows a comparison of correlation and regression analysis:

| Correlation | Regression | |

|---|---|---|

| Estimated coefficient | Coefficient of correlation (bounded between -1 and +1) | Regression coefficient (not bounded a priori) |

| Interpretation | Linear association between two variables; Association is bidirectional | (Linear) relation between one or more independent variables and dependent variable; Relation is directional |

| Role of theory | Theory neither required nor testable | Theory required and testable |

7.2.1 Simple linear regression

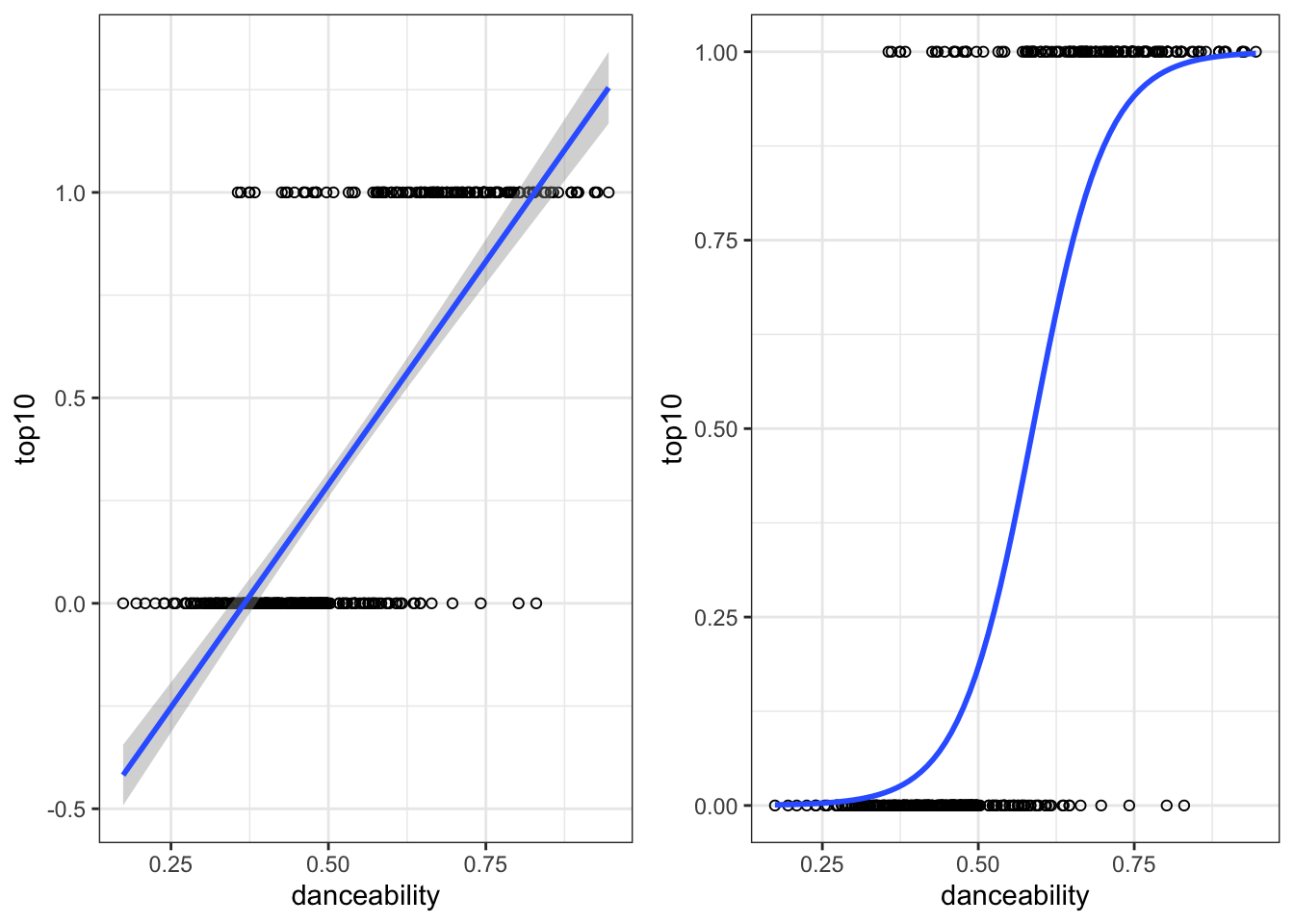

In simple linear regression, we assess the relationship between one dependent (regressand) and one independent (regressor) variable. The goal is to fit a line through a scatterplot of observations in order to find the line that best describes the data (scatterplot).

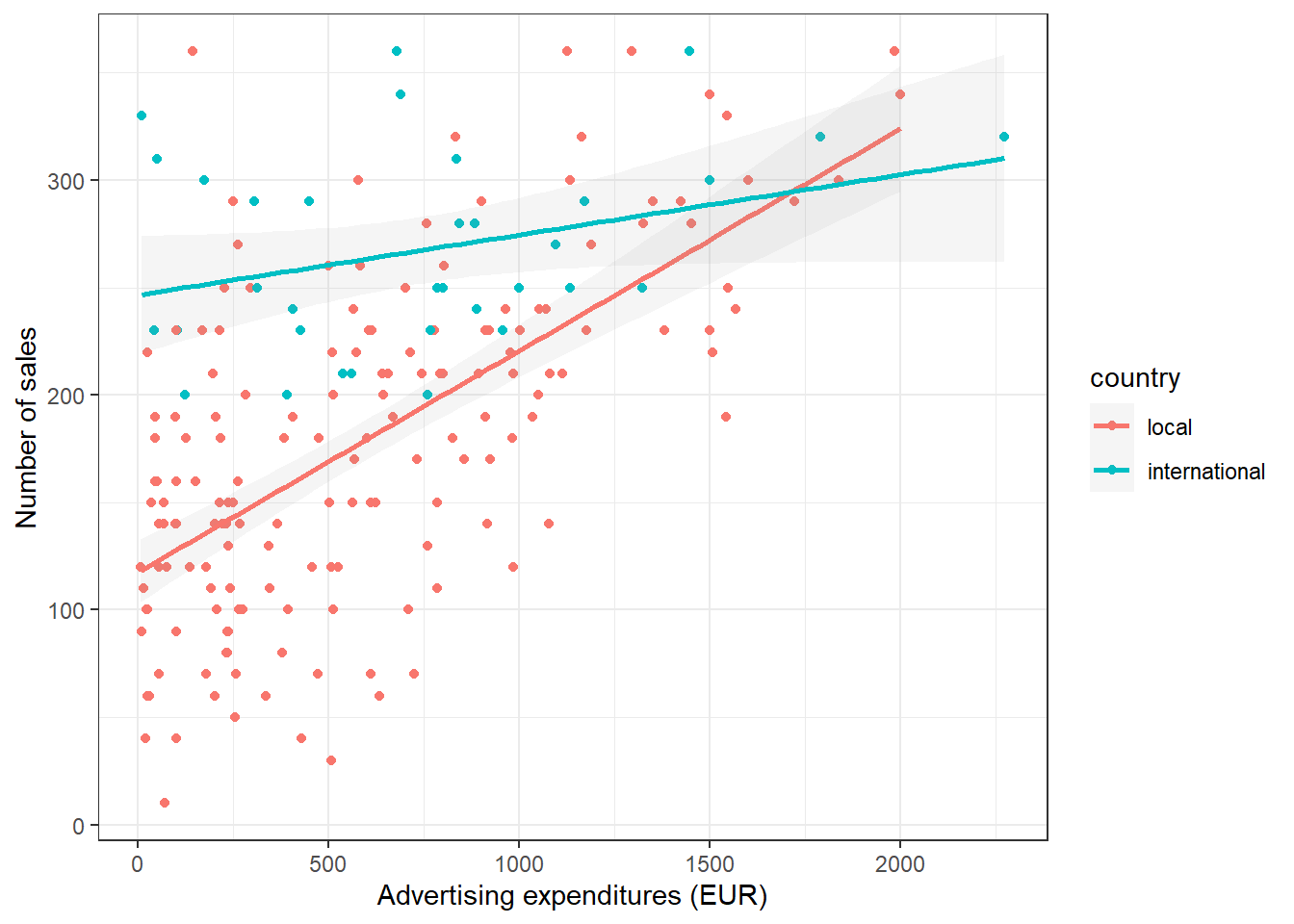

Suppose you are a marketing research analyst at a music label and your task is to suggest, on the basis of historical data, a marketing plan for the next year that will maximize product sales. The data set that is available to you includes information on the sales of music downloads (units), advertising expenditures (in thousands of Euros), the number of radio plays an artist received per week (airplay), the number of previous releases of an artist (starpower), repertoire origin (country; 0 = local, 1 = international), and genre (1 = rock, 2 = pop, 3 = electronic). Let’s load and inspect the data first:

regression <- read.table("https://raw.githubusercontent.com/IMSMWU/Teaching/master/MRDA2017/music_sales_regression.dat",

sep = "\t",

header = TRUE) #read in data

regression$country <- factor(regression$country, levels = c(0:1), labels = c("local", "international")) #convert grouping variable to factor

regression$genre <- factor(regression$genre, levels = c(1:3), labels = c("rock", "pop","electronic")) #convert grouping variable to factor

head(regression)## vars n mean sd median trimmed mad min max range

## sales 1 200 193.20 80.70 200.00 192.69 88.96 10.0 360.00 350.00

## adspend 2 200 614.41 485.66 531.92 560.81 489.09 9.1 2271.86 2262.76

## airplay 3 200 27.50 12.27 28.00 27.46 11.86 0.0 63.00 63.00

## starpower 4 200 6.77 1.40 7.00 6.88 1.48 1.0 10.00 9.00

## genre* 5 200 2.40 0.79 3.00 2.50 0.00 1.0 3.00 2.00

## country* 6 200 1.17 0.38 1.00 1.09 0.00 1.0 2.00 1.00

## skew kurtosis se

## sales 0.04 -0.72 5.71

## adspend 0.84 0.17 34.34

## airplay 0.06 -0.09 0.87

## starpower -1.27 3.56 0.10

## genre* -0.83 -0.91 0.06

## country* 1.74 1.05 0.03As stated above, regression analysis may be used to relate a quantitative response (“dependent variable”) to one or more predictor variables (“independent variables”). In a simple linear regression, we have one dependent and one independent variable and we regress the dependent variable on the independent variable.

Here are a few important questions that we might seek to address based on the data:

- Is there a relationship between advertising budget and sales?

- How strong is the relationship between advertising budget and sales?

- Which other variables contribute to sales?

- How accurately can we estimate the effect of each variable on sales?

- How accurately can we predict future sales?

- Is the relationship linear?

- Is there synergy among the advertising activities?

We may use linear regression to answer these questions. We will see later that the interpretation of the results strongly depends on the goal of the analysis - whether you would like to simply predict an outcome variable or you would like to explain the causal effect of the independent variable on the dependent variable (see chapter 1). Let’s start with the first question and investigate the relationship between advertising and sales.

7.2.1.1 Estimating the coefficients

A simple linear regression model only has one predictor and can be written as:

\[\begin{equation} Y=\beta_0+\beta_1X+\epsilon \tag{7.5} \end{equation}\]

In our specific context, let’s consider only the influence of advertising on sales for now:

\[\begin{equation} Sales=\beta_0+\beta_1*adspend+\epsilon \tag{7.6} \end{equation}\]

The word “adspend” represents data on advertising expenditures that we have observed and β1 (the “slope”“) represents the unknown relationship between advertising expenditures and sales. It tells you by how much sales will increase for an additional Euro spent on advertising. β0 (the”intercept”) is the number of sales we would expect if no money is spent on advertising. Together, β0 and β1 represent the model coefficients or parameters. The error term (ε) captures everything that we miss by using our model, including, (1) misspecifications (the true relationship might not be linear), (2) omitted variables (other variables might drive sales), and (3) measurement error (our measurement of the variables might be imperfect).

Once we have used our training data to produce estimates for the model coefficients, we can predict future sales on the basis of a particular value of advertising expenditures by computing:

\[\begin{equation} \hat{Sales}=\hat{\beta_0}+\hat{\beta_1}*adspend \tag{7.7} \end{equation}\]

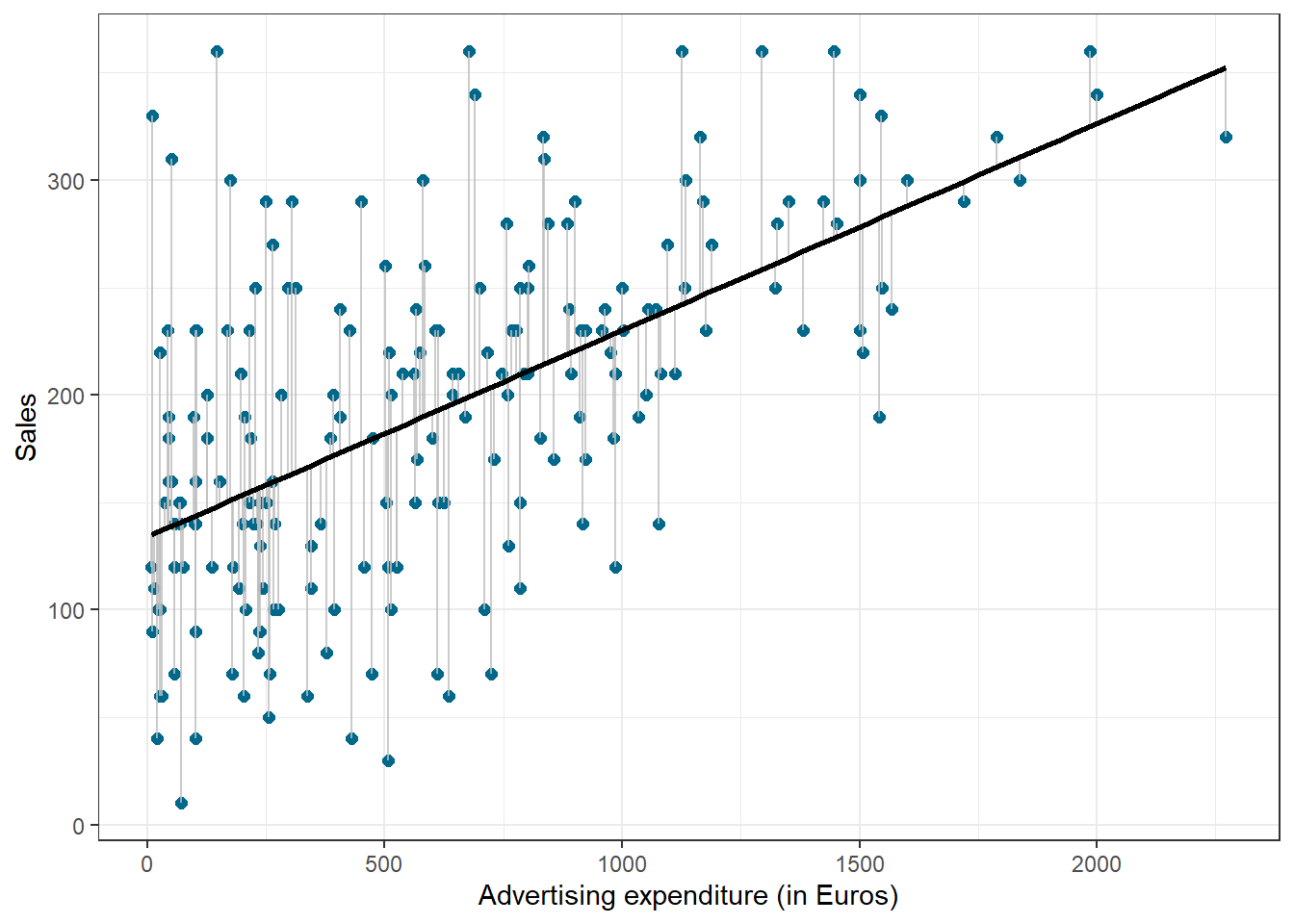

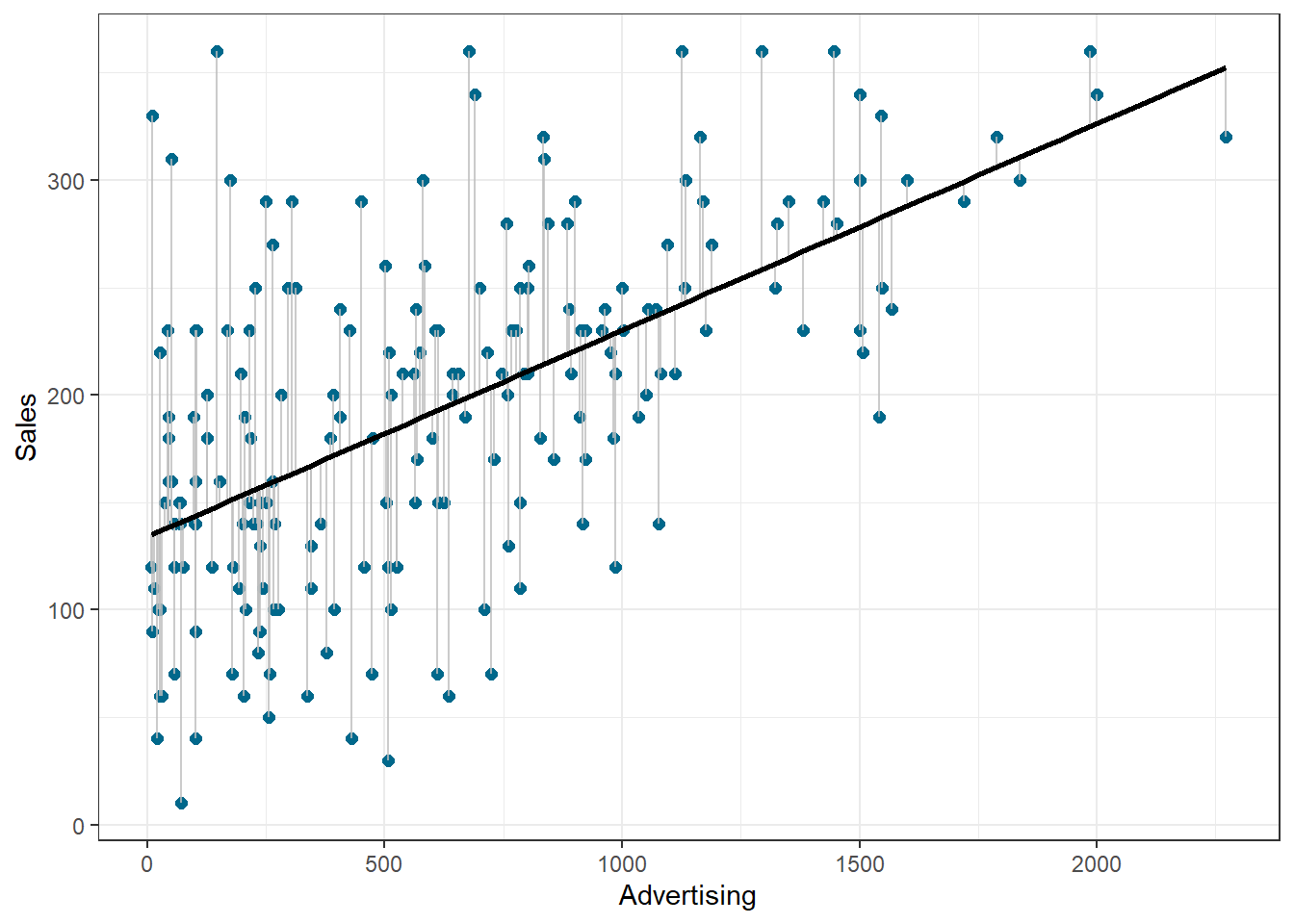

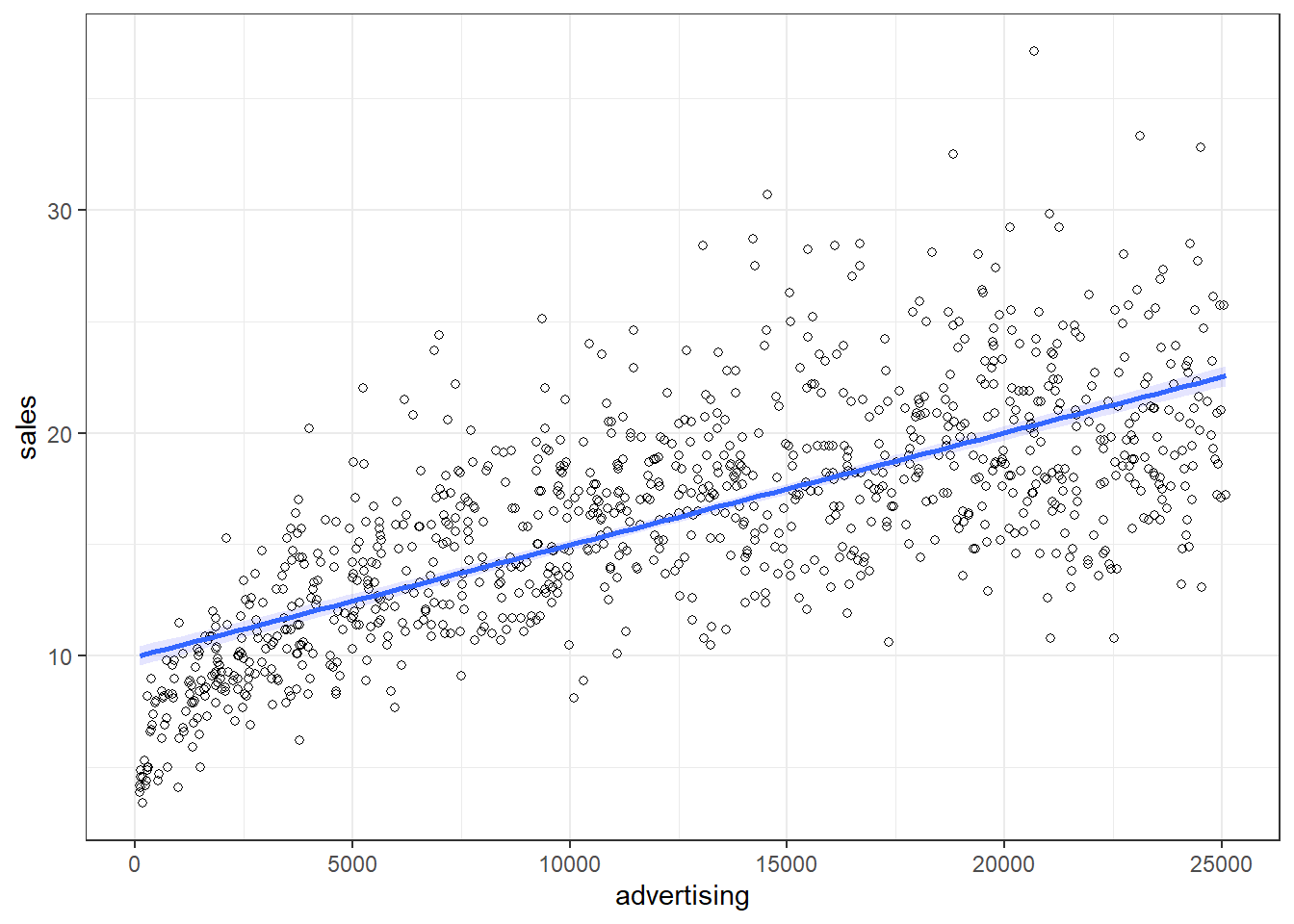

We use the hat symbol, ^, to denote the estimated value for an unknown parameter or coefficient, or to denote the predicted value of the response (sales). In practice, β0 and β1 are unknown and must be estimated from the data to make predictions. In the case of our advertising example, the data set consists of the advertising budget and product sales of 200 music songs (n = 200). Our goal is to obtain coefficient estimates such that the linear model fits the available data well. In other words, we fit a line through the scatterplot of observations and try to find the line that best describes the data. The following graph shows the scatterplot for our data, where the black line shows the regression line. The grey vertical lines shows the difference between the predicted values (the regression line) and the observed values. This difference is referred to as the residuals (“e”).

Figure 1.14: Ordinary least squares (OLS)

The estimation of the regression function is based on the idea of the method of least squares (OLS = ordinary least squares). The first step is to calculate the residuals by subtracting the observed values from the predicted values.

\(e_i = Y_i-(\beta_0+\beta_1X_i)\)

This difference is then minimized by minimizing the sum of the squared residuals:

\[\begin{equation} \sum_{i=1}^{N} e_i^2= \sum_{i=1}^{N} [Y_i-(\beta_0+\beta_1X_i)]^2\rightarrow min! \tag{7.8} \end{equation}\]

ei: Residuals (i = 1, 2, …, N)

Yi: Values of the dependent variable (i = 1, 2, …, N)

β0: Intercept

β1: Regression coefficient / slope parameters

Xni: Values of the nth independent variables and the ith observation

N: Number of observations

This is also referred to as the residual sum of squares (RSS), which you may still remember from the previous chapter on ANOVA. Now we need to choose the values for β0 and β1 that minimize RSS. So how can we derive these values for the regression coefficient? The equation for β1 is given by:

\[\begin{equation} \hat{\beta_1}=\frac{COV_{XY}}{s_x^2} \tag{7.9} \end{equation}\]

The exact mathematical derivation of this formula is beyond the scope of this script, but the intuition is to calculate the first derivative of the squared residuals with respect to β1 and set it to zero, thereby finding the β1 that minimizes the term. Using the above formula, you can easily compute β1 using the following code:

## [1] 22672.016## [1] 235860.98## [1] 0.096124486The interpretation of β1 is as follows:

For every extra Euro spent on advertising, sales can be expected to increase by 0.096 units. Or, in other words, if we increase our marketing budget by 1,000 Euros, sales can be expected to increase by 96 units.

Using the estimated coefficient for β1, it is easy to compute β0 (the intercept) as follows:

\[\begin{equation} \hat{\beta_0}=\overline{Y}-\hat{\beta_1}\overline{X} \tag{7.10} \end{equation}\]

The R code for this is:

## [1] 134.13994The interpretation of β0 is as follows:

If we spend no money on advertising, we would expect to sell 134.14 (134) units.

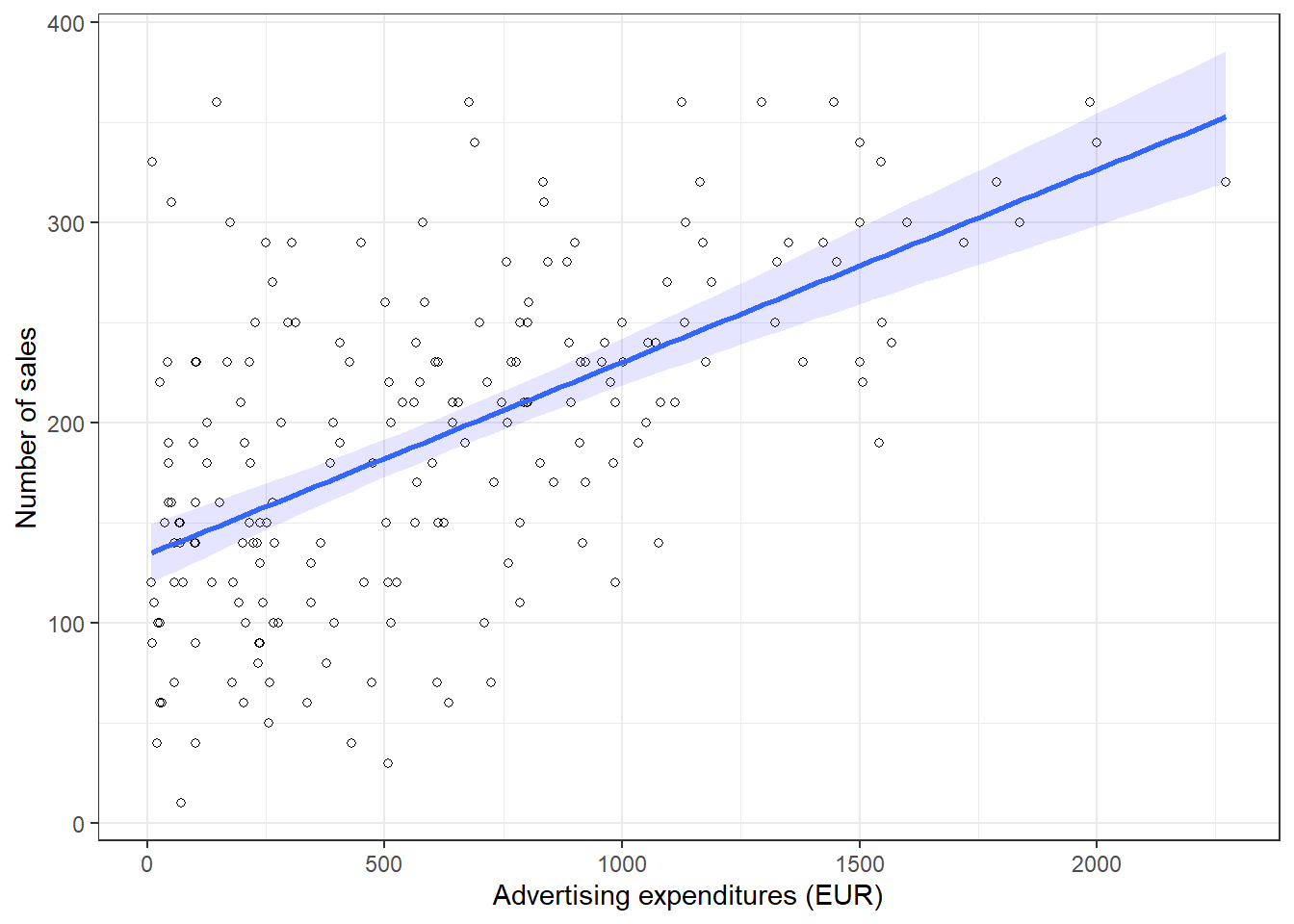

You may also verify this based on a scatterplot of the data. The following plot shows the scatterplot including the regression line, which is estimated using OLS.

ggplot(regression, mapping = aes(adspend, sales)) +

geom_point(shape = 1) +

geom_smooth(method = "lm", fill = "blue", alpha = 0.1) +

labs(x = "Advertising expenditures (EUR)", y = "Number of sales") +

theme_bw()

Figure 1.17: Scatterplot

You can see that the regression line intersects with the y-axis at 134.14, which corresponds to the expected sales level when advertising expenditure (on the x-axis) is zero (i.e., the intercept β0). The slope coefficient (β1) tells you by how much sales (on the y-axis) would increase if advertising expenditures (on the x-axis) are increased by one unit.

7.2.1.2 Significance testing

In a next step, we assess if the effect of advertising on sales is statistically significant. This means that we test the null hypothesis H0: “There is no relationship between advertising and sales” versus the alternative hypothesis H1: “The is some relationship between advertising and sales”. Or, to state this formally:

\[H_0:\beta_1=0\] \[H_1:\beta_1\ne0\]

How can we test if the effect is statistically significant? Recall the generalized equation to derive a test statistic:

\[\begin{equation} test\ statistic = \frac{effect}{error} \tag{7.11} \end{equation}\]

The effect is given by the β1 coefficient in this case. To compute the test statistic, we need to come up with a measure of uncertainty around this estimate (the error). This is because we use information from a sample to estimate the least squares line to make inferences regarding the regression line in the entire population. Since we only have access to one sample, the regression line will be slightly different every time we take a different sample from the population. This is sampling variation and it is perfectly normal! It just means that we need to take into account the uncertainty around the estimate, which is achieved by the standard error. Thus, the test statistic for our hypothesis is given by:

\[\begin{equation} t = \frac{\hat{\beta_1}}{SE(\hat{\beta_1})} \tag{7.12} \end{equation}\]

After calculating the test statistic, we compare its value to the values that we would expect to find if there was no effect based on the t-distribution. In a regression context, the degrees of freedom are given by N - p - 1 where N is the sample size and p is the number of predictors. In our case, we have 200 observations and one predictor. Thus, the degrees of freedom is 200 - 1 - 1 = 198. In the regression output below, R provides the exact probability of observing a t value of this magnitude (or larger) if the null hypothesis was true. This probability - as we already saw in chapter 6 - is the p-value. A small p-value indicates that it is unlikely to observe such a substantial association between the predictor and the outcome variable due to chance in the absence of any real association between the predictor and the outcome.

To estimate the regression model in R, you can use the lm() function. Within the function, you first specify the dependent variable (“sales”) and independent variable (“adspend”) separated by a ~ (tilde). As mentioned previously, this is known as formula notation in R. The data = regression argument specifies that the variables come from the data frame named “regression”. Strictly speaking, you use the lm() function to create an object called “simple_regression,” which holds the regression output. You can then view the results using the summary() function:

simple_regression <- lm(sales ~ adspend, data = regression) #estimate linear model

summary(simple_regression) #summary of results##

## Call:

## lm(formula = sales ~ adspend, data = regression)

##

## Residuals:

## Min 1Q Median 3Q Max

## -152.9493 -43.7961 -0.3933 37.0404 211.8658

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 134.1399378 7.5365747 17.7985 < 0.00000000000000022 ***

## adspend 0.0961245 0.0096324 9.9793 < 0.00000000000000022 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 65.991 on 198 degrees of freedom

## Multiple R-squared: 0.33465, Adjusted R-squared: 0.33129

## F-statistic: 99.587 on 1 and 198 DF, p-value: < 0.000000000000000222Note that the estimated coefficients for β0 (134.14) and β1 (0.096) correspond to the results of our manual computation above. The associated t-values and p-values are given in the output. The t-values are larger than the critical t-values for the 95% confidence level, since the associated p-values are smaller than 0.05. In case of the coefficient for β1, this means that the probability of an association between the advertising and sales of the observed magnitude (or larger) is smaller than 0.05, if the value of β1 was, in fact, 0. This finding leads us to reject the null hypothesis of no association between advertising and sales.

The coefficients associated with the respective variables represent point estimates. To obtain a better understanding of the range of values that the coefficients could take, it is helpful to compute confidence intervals. A 95% confidence interval is defined as a range of values such that with a 95% probability, the range will contain the true unknown value of the parameter. For example, for β1, the confidence interval can be computed as.

\[\begin{equation} CI = \hat{\beta_1}\pm(t_{1-\frac{\alpha}{2}}*SE(\beta_1)) \tag{7.13} \end{equation}\]

It is easy to compute confidence intervals in R using the confint() function. You just have to provide the name of you estimated model as an argument:

## 2.5 % 97.5 %

## (Intercept) 119.277680821 149.00219480

## adspend 0.077129291 0.11511968For our model, the 95% confidence interval for β0 is [119.28,149], and the 95% confidence interval for β1 is [0.08,0.12]. Thus, we can conclude that when we do not spend any money on advertising, sales will be somewhere between 119 and 149 units on average. In addition, for each increase in advertising expenditures by one Euro, there will be an average increase in sales of between 0.08 and 0.12. If you revisit the graphic depiction of the regression model above, the uncertainty regarding the intercept and slope parameters can be seen in the confidence bounds (blue area) around the regression line.

7.2.1.3 Assessing model fit

Once we have rejected the null hypothesis in favor of the alternative hypothesis, the next step is to investigate how well the model represents (“fits”) the data. How can we assess the model fit?

- First, we calculate the fit of the most basic model (i.e., the mean)

- Then, we calculate the fit of the best model (i.e., the regression model)

- A good model should fit the data significantly better than the basic model

- R2: Represents the percentage of the variation in the outcome that can be explained by the model

- The F-ratio measures how much the model has improved the prediction of the outcome compared to the level of inaccuracy in the model

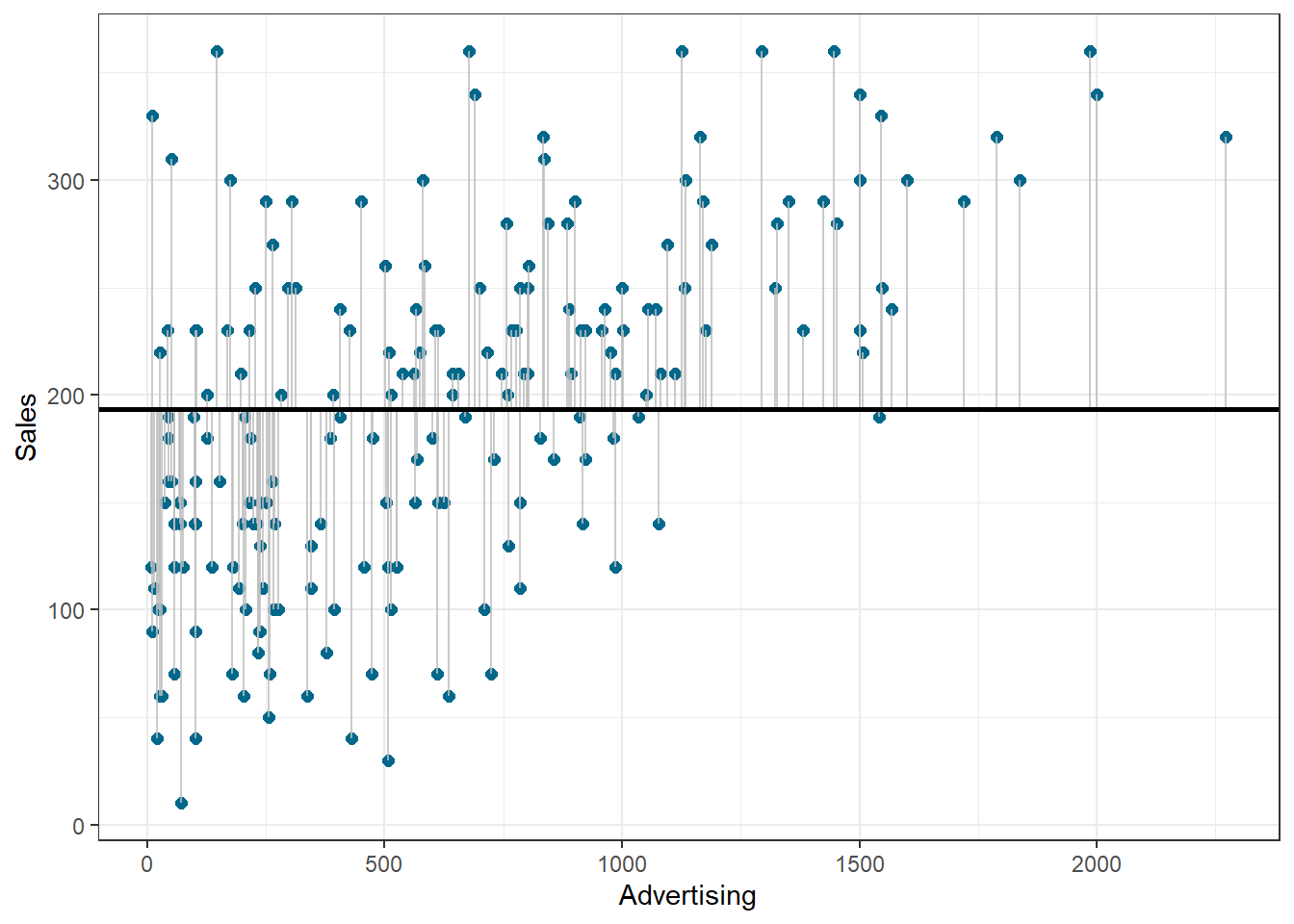

Similar to ANOVA, the calculation of model fit statistics relies on estimating the different sum of squares values. SST is the difference between the observed data and the mean value of Y (aka. total variation). In the absence of any other information, the mean value of Y (\(\overline{Y}\)) represents the best guess on where a particular observation \(Y_{i}\) at a given level of advertising will fall:

\[\begin{equation} SS_T= \sum_{i=1}^{N} (Y_i-\overline{Y})^2 \tag{7.14} \end{equation}\]

The following graph shows the total sum of squares:

Figure 1.20: Total sum of squares

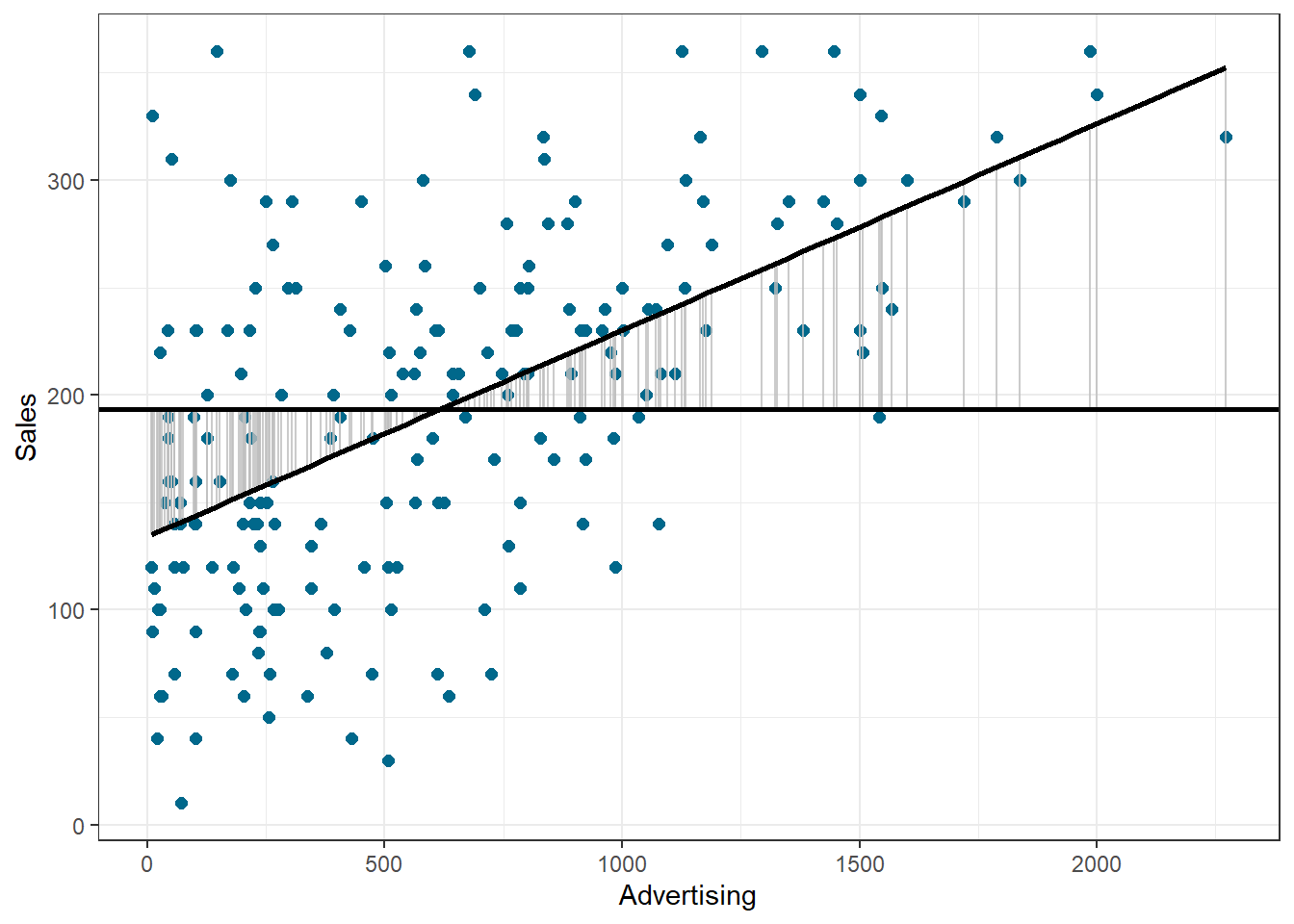

Based on our linear model, the best guess about the sales level at a given level of advertising is the predicted value \(\hat{Y}_i\). The model sum of squares (SSM) therefore has the mathematical representation:

\[\begin{equation} SS_M= \sum_{i=1}^{N} (\hat{Y}_i-\overline{Y})^2 \tag{7.15} \end{equation}\]

The model sum of squares represents the improvement in prediction resulting from using the regression model rather than the mean of the data. The following graph shows the model sum of squares for our example:

Figure 1.21: Ordinary least squares (OLS)

The residual sum of squares (SSR) is the difference between the observed data points (\(Y_{i}\)) and the predicted values along the regression line (\(\hat{Y}_{i}\)), i.e., the variation not explained by the model.

\[\begin{equation} SS_R= \sum_{i=1}^{N} ({Y}_{i}-\hat{Y}_{i})^2 \tag{7.16} \end{equation}\]

The following graph shows the residual sum of squares for our example:

Figure 1.22: Ordinary least squares (OLS)

Based on these statistics, we can determine have well the model fits the data as we will see next.

R-squared

The R2 statistic represents the proportion of variance that is explained by the model and is computed as:

\[\begin{equation} R^2= \frac{SS_M}{SS_T} \tag{7.16} \end{equation}\]

It takes values between 0 (very bad fit) and 1 (very good fit). Note that when the goal of your model is to predict future outcomes, a “too good” model fit can pose severe challenges. The reason is that the model might fit your specific sample so well, that it will only predict well within the sample but not generalize to other samples. This is called overfitting and it shows that there is a trade-off between model fit and out-of-sample predictive ability of the model, if the goal is to predict beyond the sample. We will come back to this point later in this chapter.

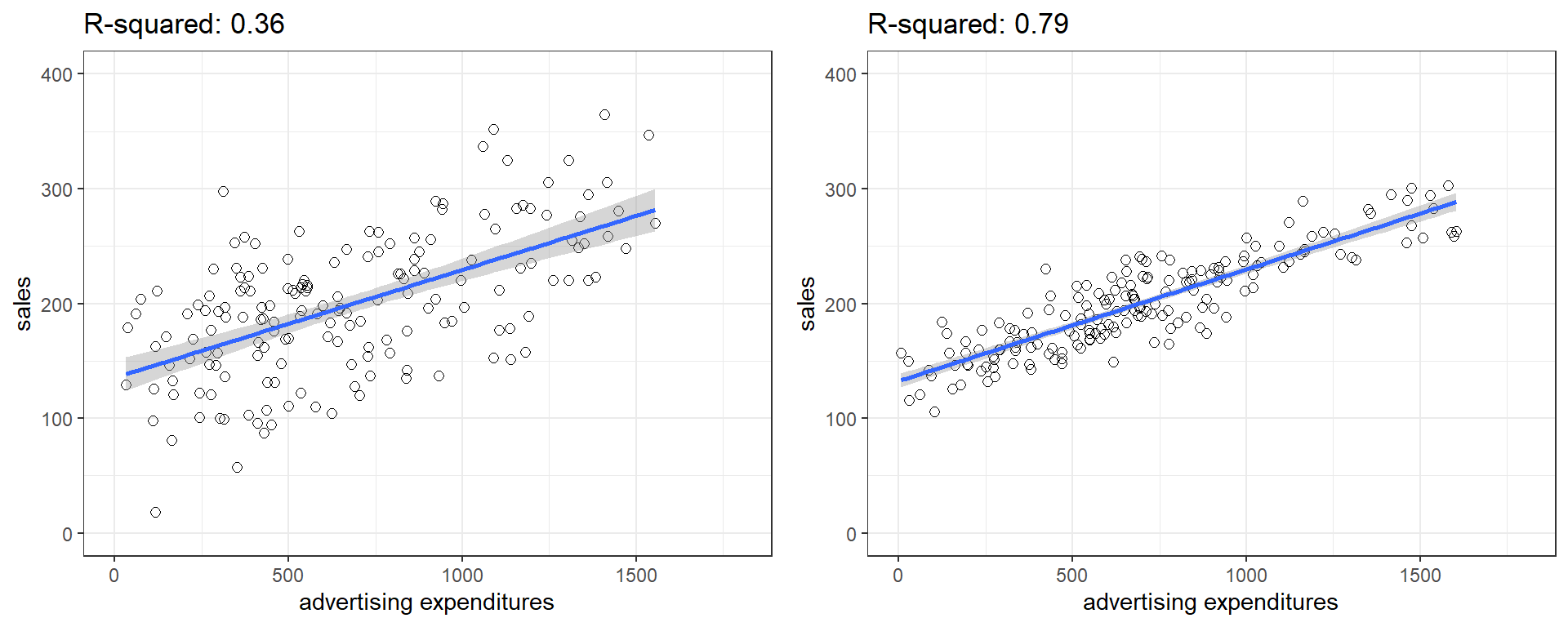

You can get a first impression of the fit of the model by inspecting the scatter plot as can be seen in the plot below. If the observations are highly dispersed around the regression line (left plot), the fit will be lower compared to a data set where the values are less dispersed (right plot).

Figure 1.23: Good vs. bad model fit

The R2 statistic is reported in the regression output (see above). However, you could also extract the relevant sum of squares statistics from the regression object using the anova() function to compute it manually:

## Analysis of Variance Table

##

## Response: sales

## Df Sum Sq Mean Sq F value Pr(>F)

## adspend 1 433688 433688 99.6 <0.0000000000000002 ***

## Residuals 198 862264 4355

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Now we can compute R2 in the same way that we have computed Eta2 in the last section:

r2 <- anova(simple_regression)$'Sum Sq'[1]/(anova(simple_regression)$'Sum Sq'[1] + anova(simple_regression)$'Sum Sq'[2]) #compute R2

r2## [1] 0.33Adjusted R-squared

Due to the way the R2 statistic is calculated, it will never decrease if a new explanatory variable is introduced into the model. This means that every new independent variable either doesn’t change the R2 or increases it, even if there is no real relationship between the new variable and the dependent variable. Hence, one could be tempted to just add as many variables as possible to increase the R2 and thus obtain a “better” model. However, this actually only leads to more noise and therefore a worse model.

To account for this, there exists a test statistic closely related to the R2, the adjusted R2. It can be calculated as follows:

\[\begin{equation} \overline{R^2} = 1 - (1 - R^2)\frac{n-1}{n - k - 1} \tag{7.17} \end{equation}\]

where n is the total number of observations and k is the total number of explanatory variables. The adjusted R2 is equal to or less than the regular R2 and can be negative. It will only increase if the added variable adds more explanatory power than one would expect by pure chance. Essentially, it contains a “penalty” for including unnecessary variables and therefore favors more parsimonious models. As such, it is a measure of suitability, good for comparing different models and is very useful in the model selection stage of a project. In R, the standard lm() function automatically also reports the adjusted R2 as you can see above.

F-test

Similar to the ANOVA in chapter 6, another significance test is the F-test, which tests the null hypothesis:

\[H_0:R^2=0\]

Or, to state it slightly differently:

\[H_0:\beta_1=\beta_2=\beta_3=\beta_k=0\]

This means that, similar to the ANOVA, we test whether any of the included independent variables has a significant effect on the dependent variable. So far, we have only included one independent variable, but we will extend the set of predictor variables below.

The F-test statistic is calculated as follows:

\[\begin{equation} F=\frac{\frac{SS_M}{k}}{\frac{SS_R}{(n-k-1)}}=\frac{MS_M}{MS_R} \tag{7.16} \end{equation}\]

which has a F distribution with k (number of predictors) and (n — k — 1) degrees of freedom. In other words, you divide the systematic (“explained”) variation due to the predictor variables by the unsystematic (“unexplained”) variation.

The result of the F-test is provided in the regression output. However, you might manually compute the F-test using the ANOVA results from the model:

## Analysis of Variance Table

##

## Response: sales

## Df Sum Sq Mean Sq F value Pr(>F)

## adspend 1 433688 433688 99.6 <0.0000000000000002 ***

## Residuals 198 862264 4355

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1f_calc <- anova(simple_regression)$'Mean Sq'[1]/anova(simple_regression)$'Mean Sq'[2] #compute F

f_calc## [1] 100## [1] 3.9## [1] TRUE7.2.1.4 Using the model

After fitting the model, we can use the estimated coefficients to predict sales for different values of advertising. Suppose you want to predict sales for a new product, and the company plans to spend 800 Euros on advertising. How much will it sell? You can easily compute this either by hand:

\[\hat{sales}=134.134 + 0.09612*800=211\]

… or by extracting the estimated coefficients from the model summary:

summary(simple_regression)$coefficients[1,1] + # the intercept

summary(simple_regression)$coefficients[2,1]*800 # the slope * 800## [1] 211The predicted value of the dependent variable is 211 units, i.e., the product will (on average) sell 211 units.

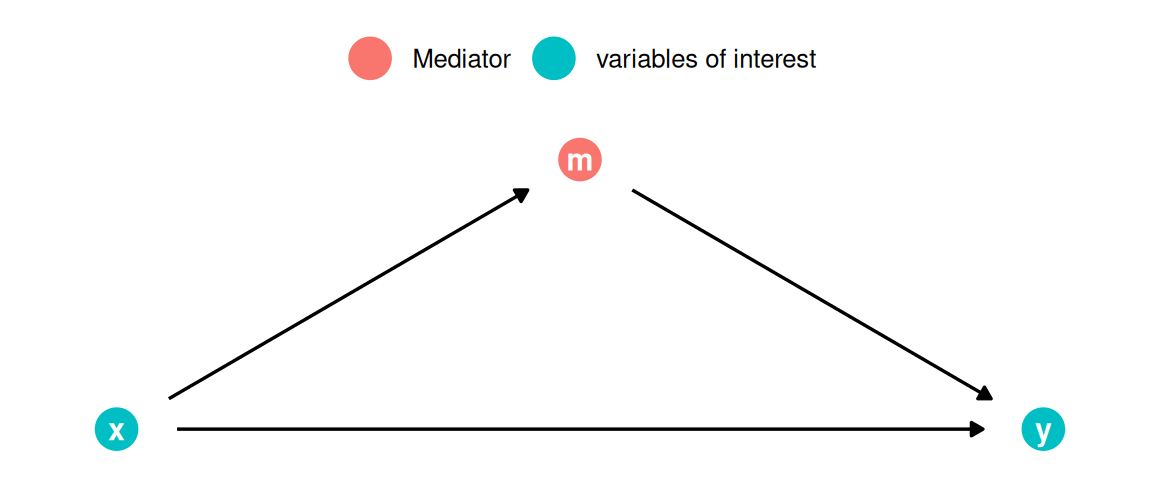

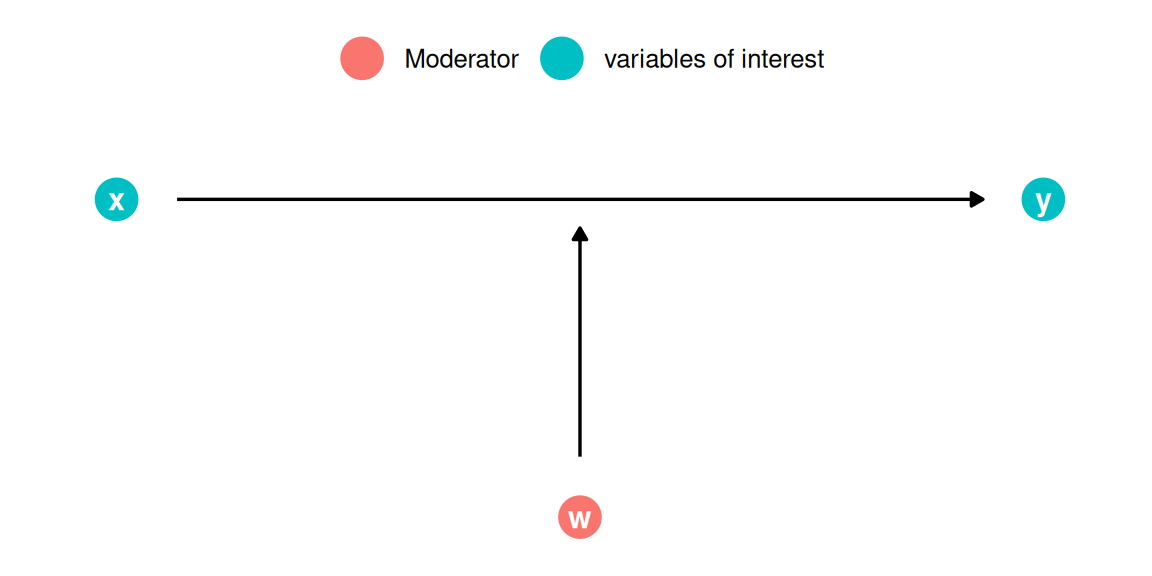

7.2.2 Multiple linear regression

Multiple linear regression is a statistical technique that simultaneously tests the relationships between two or more independent variables and an interval-scaled dependent variable. The general form of the equation is given by:

\[\begin{equation} Y=(\beta_0+\beta_1*X_1+\beta_2*X_2+\beta_n*X_n)+\epsilon \tag{7.5} \end{equation}\]

Again, we aim to find the linear combination of predictors that correlate maximally with the outcome variable. Note that if you change the composition of predictors, the partial regression coefficient of an independent variable will be different from that of the bivariate regression coefficient. This is because the regressors are usually correlated, and any variation in Y that was shared by X1 and X2 was attributed to X1. The interpretation of the partial regression coefficients is the expected change in Y when X is changed by one unit and all other predictors are held constant.

Let’s extend the previous example. Say, in addition to the influence of advertising, you are interested in estimating the influence of radio airplay on the number of album downloads. The corresponding equation would then be given by:

\[\begin{equation} Sales=\beta_0+\beta_1*adspend+\beta_2*airplay+\epsilon \tag{7.6} \end{equation}\]

The words “adspend” and “airplay” represent data that we have observed on advertising expenditures and number of radio plays, and β1 and β2 represent the unknown relationship between sales and advertising expenditures and radio airplay, respectively. The corresponding coefficients tell you by how much sales will increase for an additional Euro spent on advertising (when radio airplay is held constant) and by how much sales will increase for an additional radio play (when advertising expenditures are held constant). Thus, we can make predictions about album sales based not only on advertising spending, but also on radio airplay.

With several predictors, the partitioning of sum of squares is the same as in the bivariate model, except that the model is no longer a 2-D straight line. With two predictors, the regression line becomes a 3-D regression plane. In our example:

Figure 4.1: Regression plane

Like in the bivariate case, the plane is fitted to the data with the aim to predict the observed data as good as possible. The deviation of the observations from the plane represent the residuals (the error we make in predicting the observed data from the model). Note that this is conceptually the same as in the bivariate case, except that the computation is more complex (we won’t go into details here). The model is fairly easy to plot using a 3-D scatterplot, because we only have two predictors. While multiple regression models that have more than two predictors are not as easy to visualize, you may apply the same principles when interpreting the model outcome:

- Total sum of squares (SST) is still the difference between the observed data and the mean value of Y (total variation)

- Residual sum of squares (SSR) is still the difference between the observed data and the values predicted by the model (unexplained variation)

- Model sum of squares (SSM) is still the difference between the values predicted by the model and the mean value of Y (explained variation)

- R measures the multiple correlation between the predictors and the outcome

- R2 is the amount of variation in the outcome variable explained by the model

Estimating multiple regression models is straightforward using the lm() function. You just need to separate the individual predictors on the right hand side of the equation using the + symbol. For example, the model:

\[\begin{equation} Sales=\beta_0+\beta_1*adspend+\beta_2*airplay+\beta_3*starpower+\epsilon \tag{7.6} \end{equation}\]

could be estimated as follows:

multiple_regression <- lm(sales ~ adspend + airplay + starpower, data = regression) #estimate linear model

summary(multiple_regression) #summary of results##

## Call:

## lm(formula = sales ~ adspend + airplay + starpower, data = regression)

##

## Residuals:

## Min 1Q Median 3Q Max

## -121.32 -28.34 -0.45 28.97 144.13

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -26.61296 17.35000 -1.53 0.13

## adspend 0.08488 0.00692 12.26 < 0.0000000000000002 ***

## airplay 3.36743 0.27777 12.12 < 0.0000000000000002 ***

## starpower 11.08634 2.43785 4.55 0.0000095 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 47 on 196 degrees of freedom

## Multiple R-squared: 0.665, Adjusted R-squared: 0.66

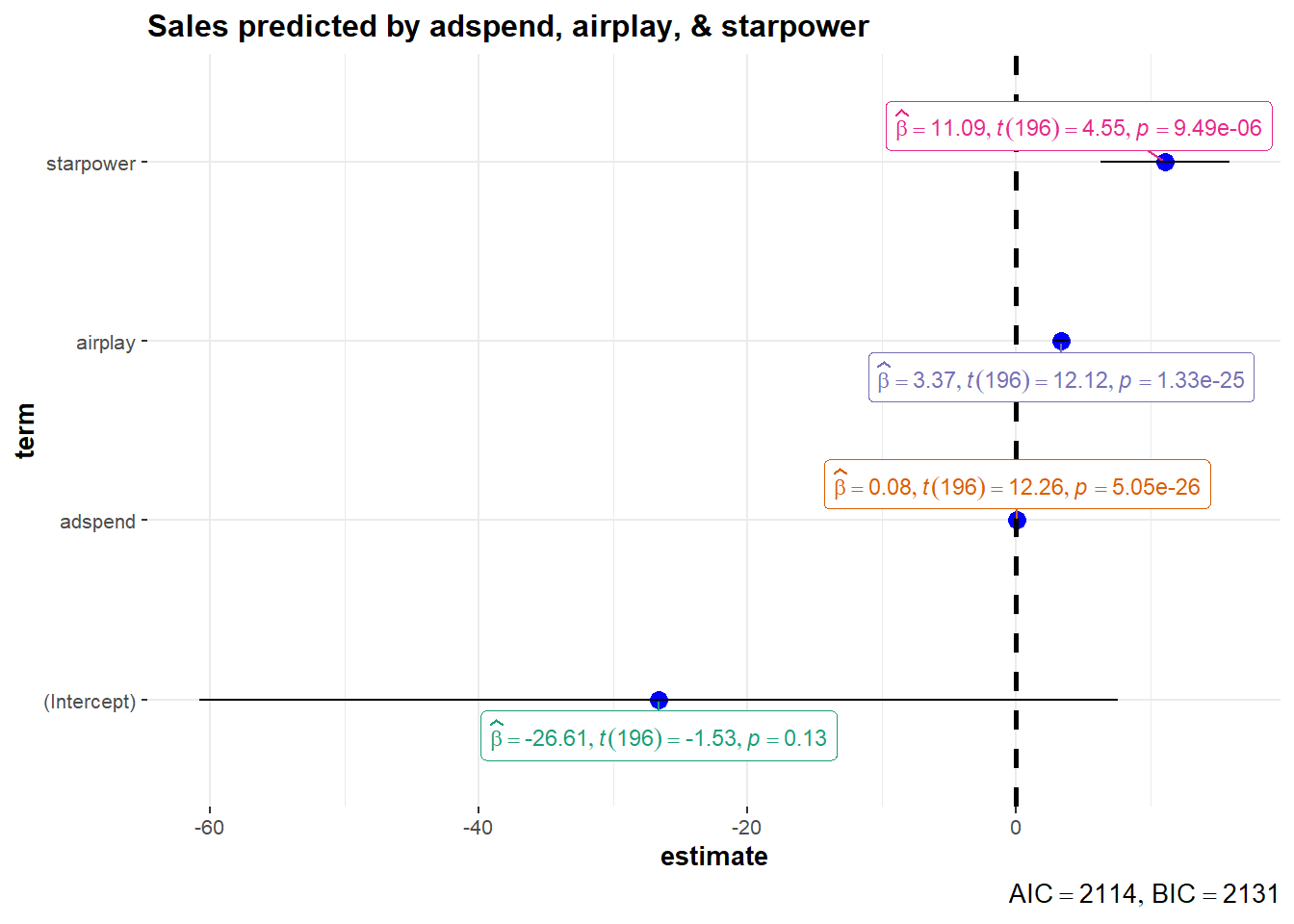

## F-statistic: 129 on 3 and 196 DF, p-value: <0.0000000000000002The interpretation of the coefficients is as follows:

- adspend (β1): when advertising expenditures increase by 1 Euro, sales will increase by 0.085 units

- airplay (β2): when radio airplay increases by 1 play per week, sales will increase by 3.367 units

- starpower (β3): when the number of previous albums increases by 1, sales will increase by 11.086 units

The associated t-values and p-values are also given in the output. You can see that the p-values are smaller than 0.05 for all three coefficients. Hence, all effects are “significant”. This means that if the null hypothesis was true (i.e., there was no effect between the variables and sales), the probability of observing associations of the estimated magnitudes (or larger) is very small (e.g., smaller than 0.05).

Again, to get a better feeling for the range of values that the coefficients could take, it is helpful to compute confidence intervals.

## 2.5 % 97.5 %

## (Intercept) -60.830 7.604

## adspend 0.071 0.099

## airplay 2.820 3.915

## starpower 6.279 15.894What does this tell you? Recall that a 95% confidence interval is defined as a range of values such that with a 95% probability, the range will contain the true unknown value of the parameter. For example, for β3, the confidence interval is [6.2785522,15.8941182]. Thus, although we have computed a point estimate of 11.086 for the effect of starpower on sales based on our sample, the effect might actually just as well take any other value within this range, considering the sample size and the variability in our data. You could also visualize the output from your regression model including the confidence intervals using the ggstatsplot package as follows:

library(ggstatsplot)

ggcoefstats(x = multiple_regression,

title = "Sales predicted by adspend, airplay, & starpower")

Figure 4.3: Confidence intervals for regression model

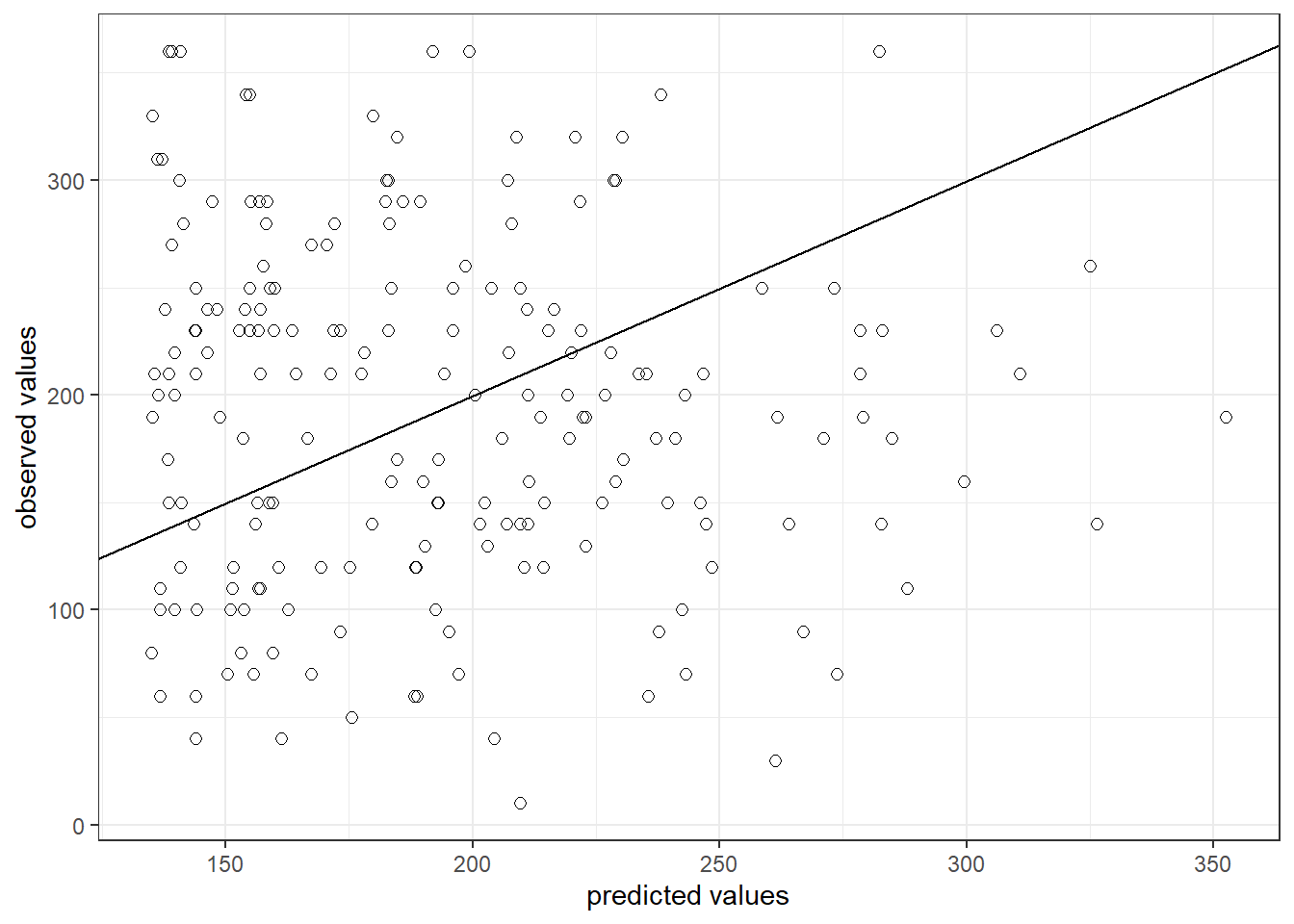

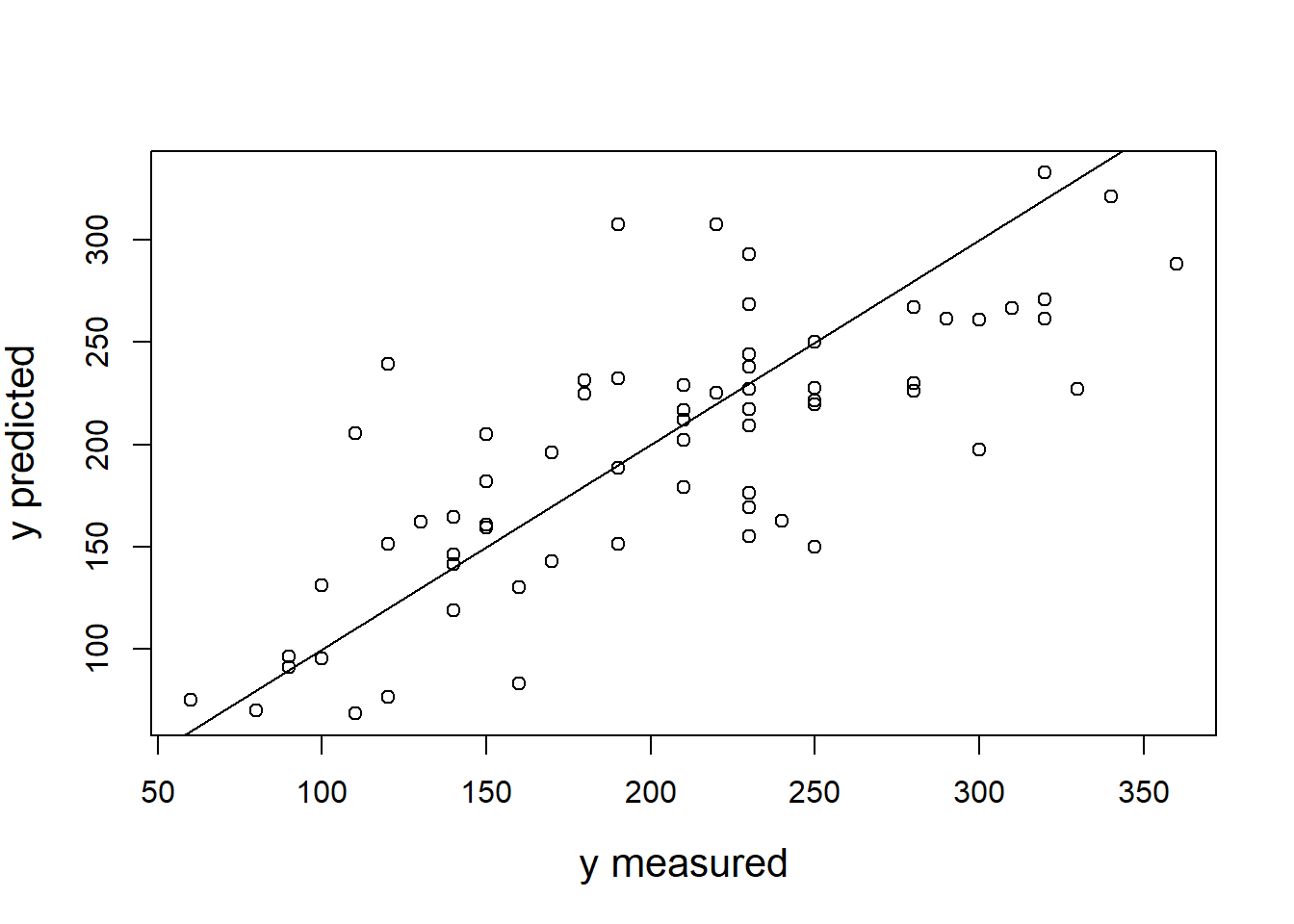

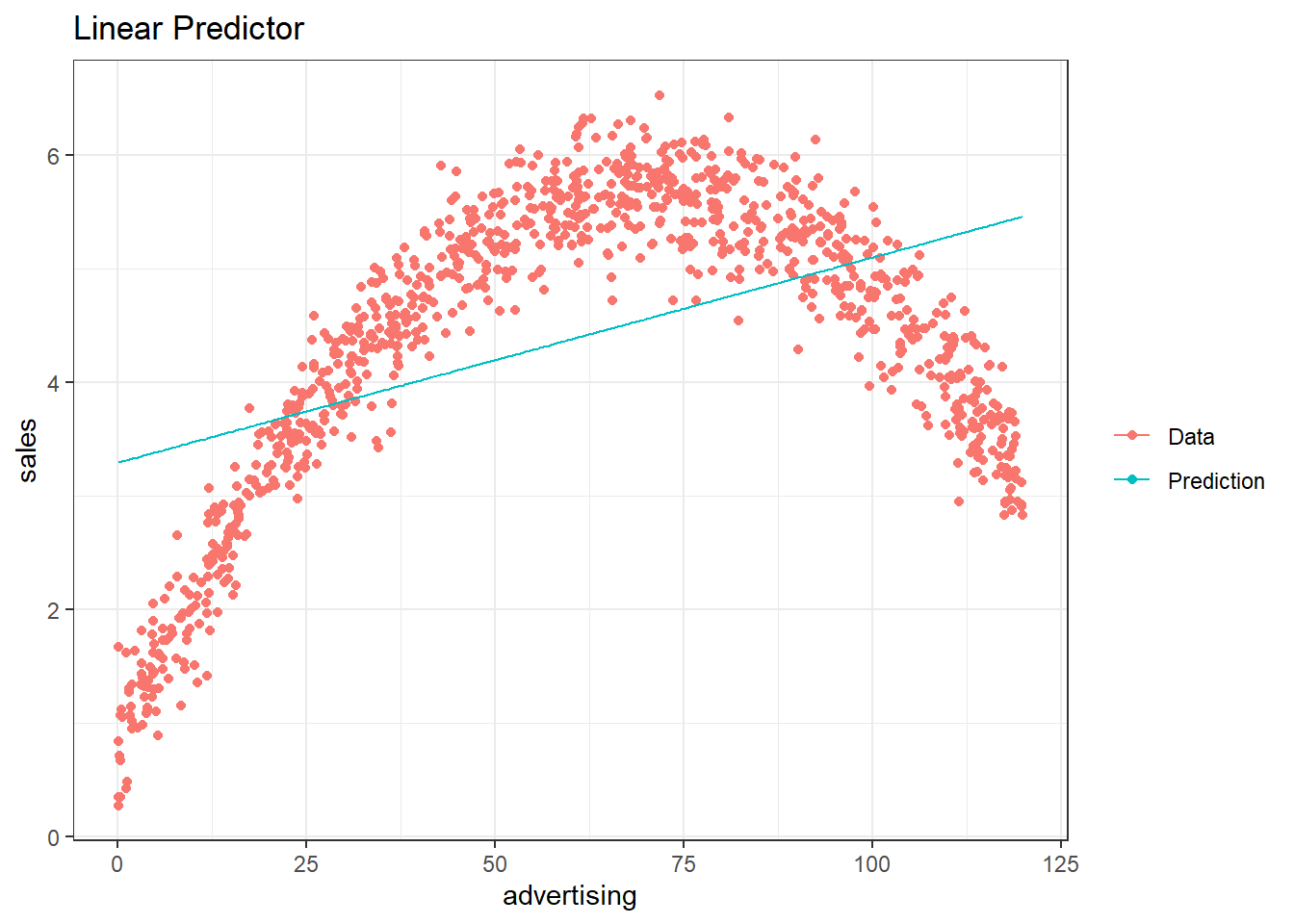

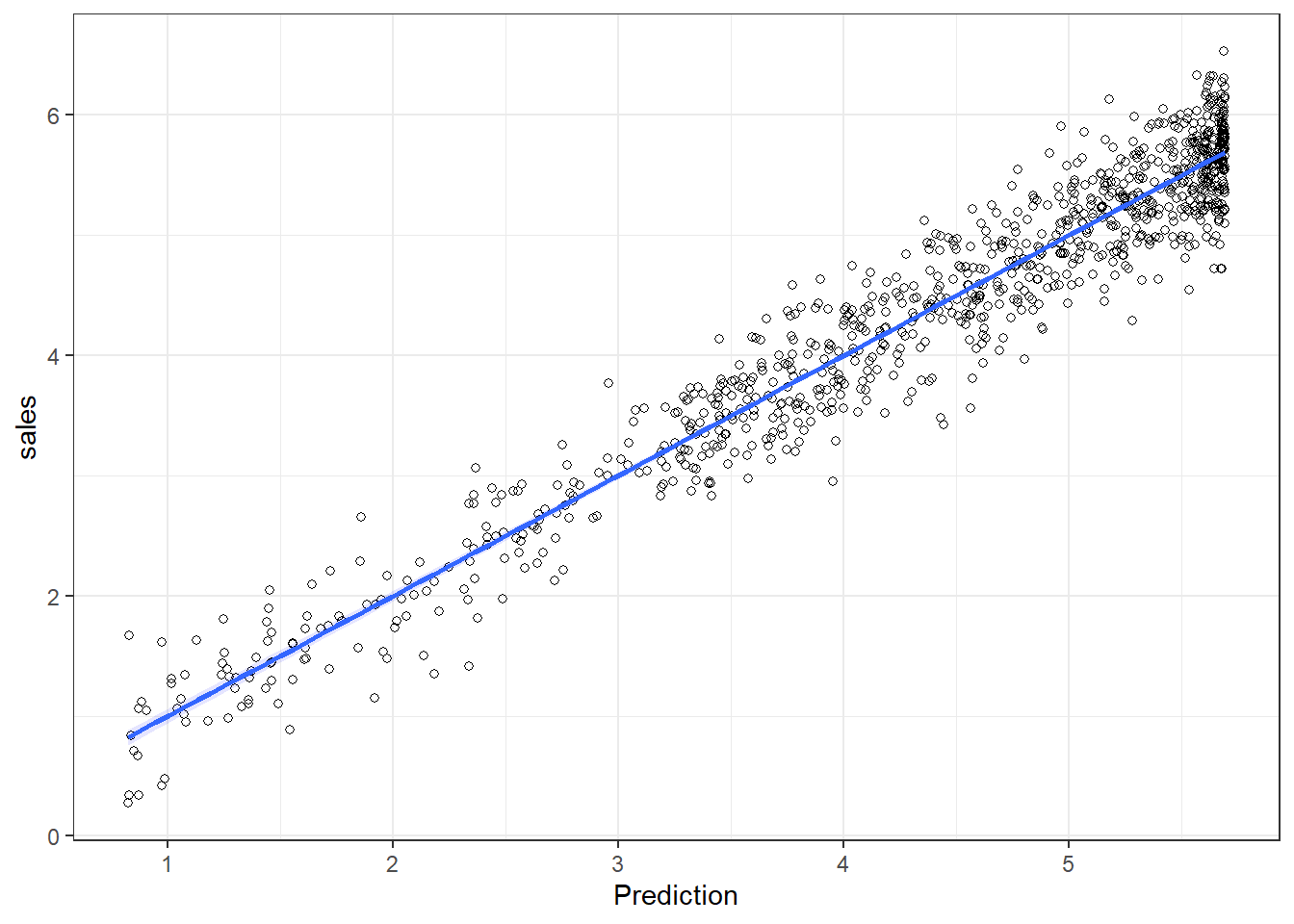

The output also tells us that 66.4667687% of the variation can be explained by our model. You may also visually inspect the fit of the model by plotting the predicted values against the observed values. We can extract the predicted values using the predict() function. So let’s create a new variable yhat, which contains those predicted values.

We can now use this variable to plot the predicted values against the observed values. In the following plot, the model fit would be perfect if all points would fall on the diagonal line. The larger the distance between the points and the line, the worse the model fit. In other words, if all points would fall exactly on the diagonal line, the model would perfectly predict the observed values.

ggplot(regression,aes(yhat,sales)) +

geom_point(size=2,shape=1) + #Use hollow circles

scale_x_continuous(name="predicted values") +

scale_y_continuous(name="observed values") +

geom_abline(intercept = 0, slope = 1) +

theme_bw()

Figure 6.5: Model fit

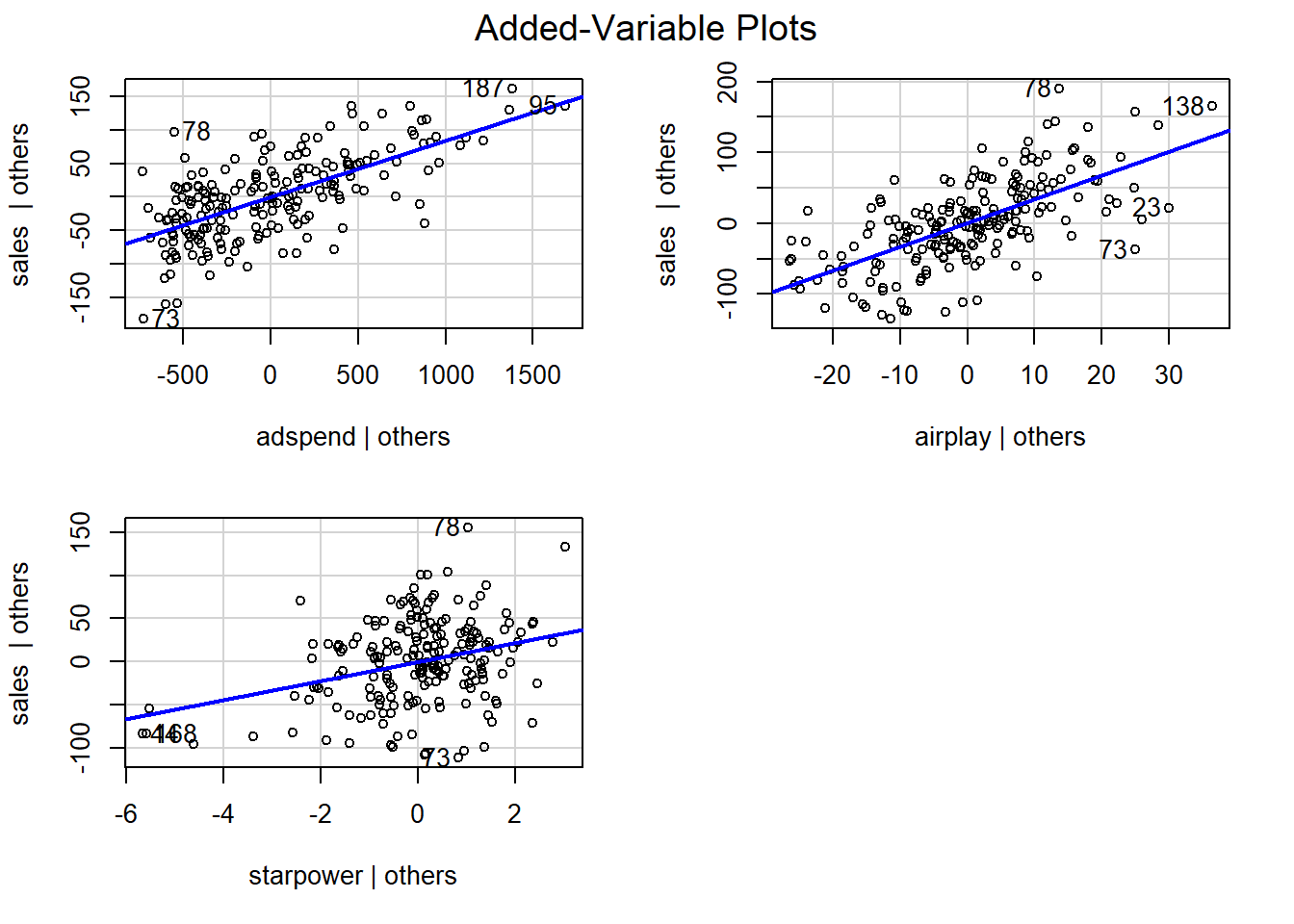

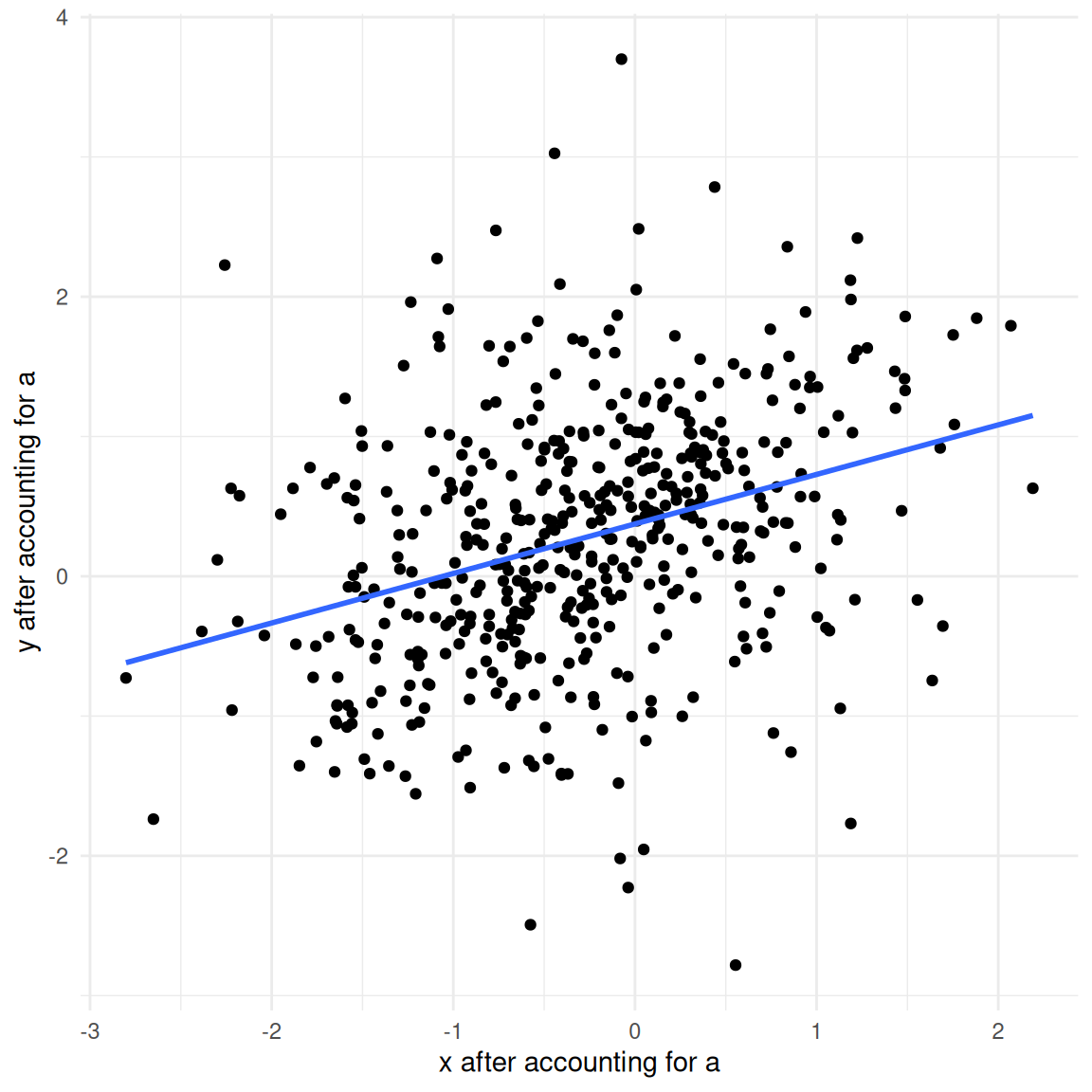

Partial plots

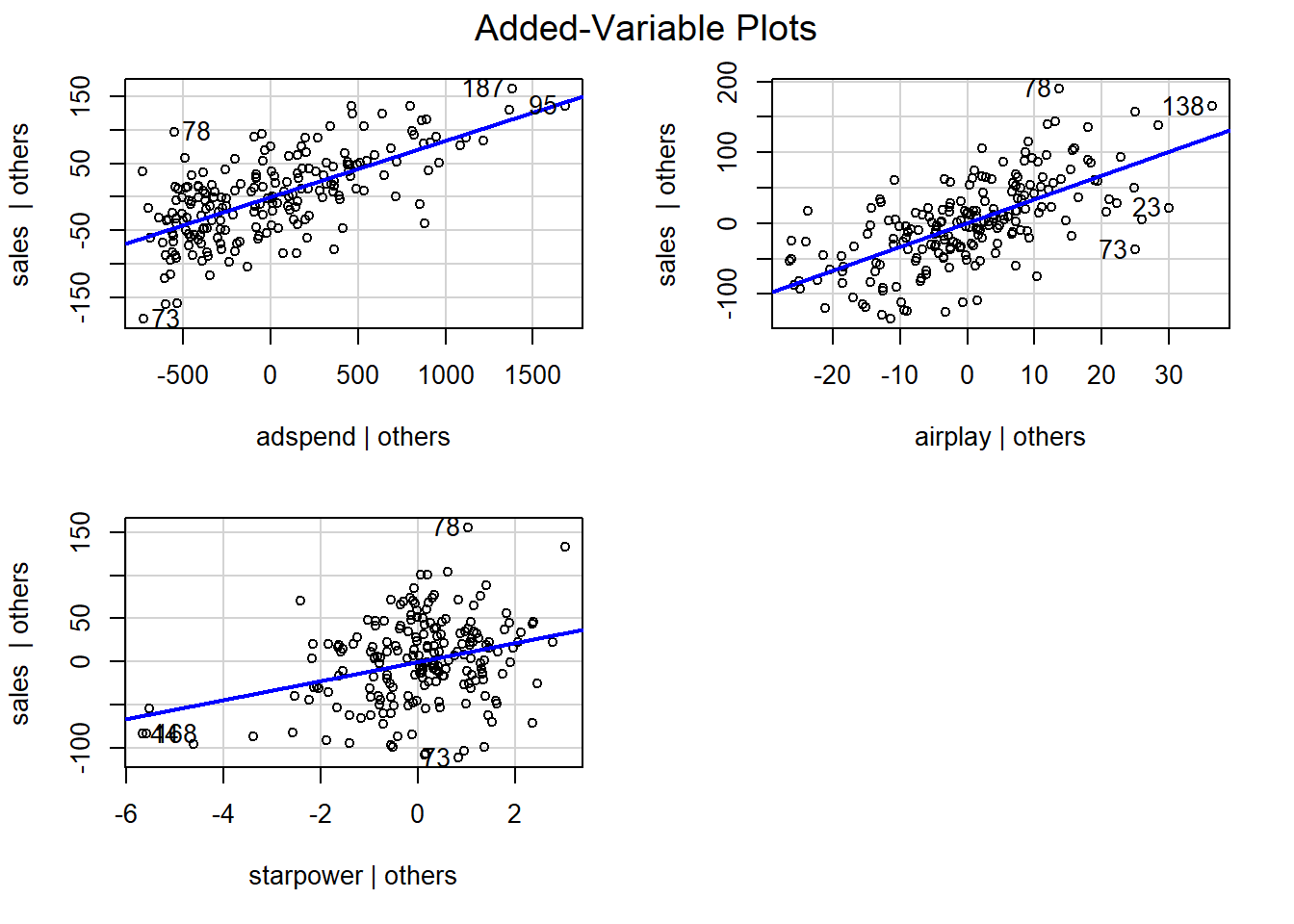

In the context of a simple linear regression (i.e., with a single independent variable), a scatter plot of the dependent variable against the independent variable provides a good indication of the nature of the relationship. If there is more than one independent variable, however, things become more complicated. The reason is that although the scatter plot still show the relationship between the two variables, it does not take into account the effect of the other independent variables in the model. Partial regression plot show the effect of adding another variable to a model that already controls for the remaining variables in the model. In other words, it is a scatterplot of the residuals of the outcome variable and each predictor when both variables are regressed separately on the remaining predictors. As an example, consider the effect of advertising expenditures on sales. In this case, the partial plot would show the effect of adding advertising expenditures as an explanatory variable while controlling for the variation that is explained by airplay and starpower in both variables (sales and advertising). Think of it as the purified relationship between advertising and sales that remains after controlling for other factors. The partial plots can easily be created using the avPlots() function from the car package:

Figure 6.7: Partial plots

Using the model

After fitting the model, we can use the estimated coefficients to predict sales for different values of advertising, airplay, and starpower. Suppose you would like to predict sales for a new music album with advertising expenditures of 800, airplay of 30 and starpower of 5. How much will it sell?

\[\hat{sales}=−26.61 + 0.084 * 800 + 3.367*30 + 11.08 ∗ 5= 197.74\]

… or by extracting the estimated coefficients:

summary(multiple_regression)$coefficients[1,1] +

summary(multiple_regression)$coefficients[2,1]*800 +

summary(multiple_regression)$coefficients[3,1]*30 +

summary(multiple_regression)$coefficients[4,1]*5## [1] 198The predicted value of the dependent variable is 198 units, i.e., the product will sell 198 units.

Comparing effects

Using the output from the regression model above, it is difficult to compare the effects of the independent variables because they are all measured on different scales (Euros, radio plays, releases). Standardized regression coefficients can be used to judge the relative importance of the predictor variables. Standardization is achieved by multiplying the unstandardized coefficient by the ratio of the standard deviations of the independent and dependent variables:

\[\begin{equation} B_{k}=\beta_{k} * \frac{s_{x_k}}{s_y} \tag{7.18} \end{equation}\]

Hence, the standardized coefficient will tell you by how many standard deviations the outcome will change as a result of a one standard deviation change in the predictor variable. Standardized coefficients can be easily computed using the lm.beta() function from the lm.beta package.

##

## Call:

## lm(formula = sales ~ adspend + airplay + starpower, data = regression)

##

## Standardized Coefficients::

## (Intercept) adspend airplay starpower

## NA 0.51 0.51 0.19The results show that for adspend and airplay, a change by one standard deviation will result in a 0.51 standard deviation change in sales, whereas for starpower, a one standard deviation change will only lead to a 0.19 standard deviation change in sales. Hence, while the effects of adspend and airplay are comparable in magnitude, the effect of starpower is less strong.

7.3 Potential problems

Once you have built and estimated your model it is important to run diagnostics to ensure that the results are accurate. In the following section we will discuss common problems.

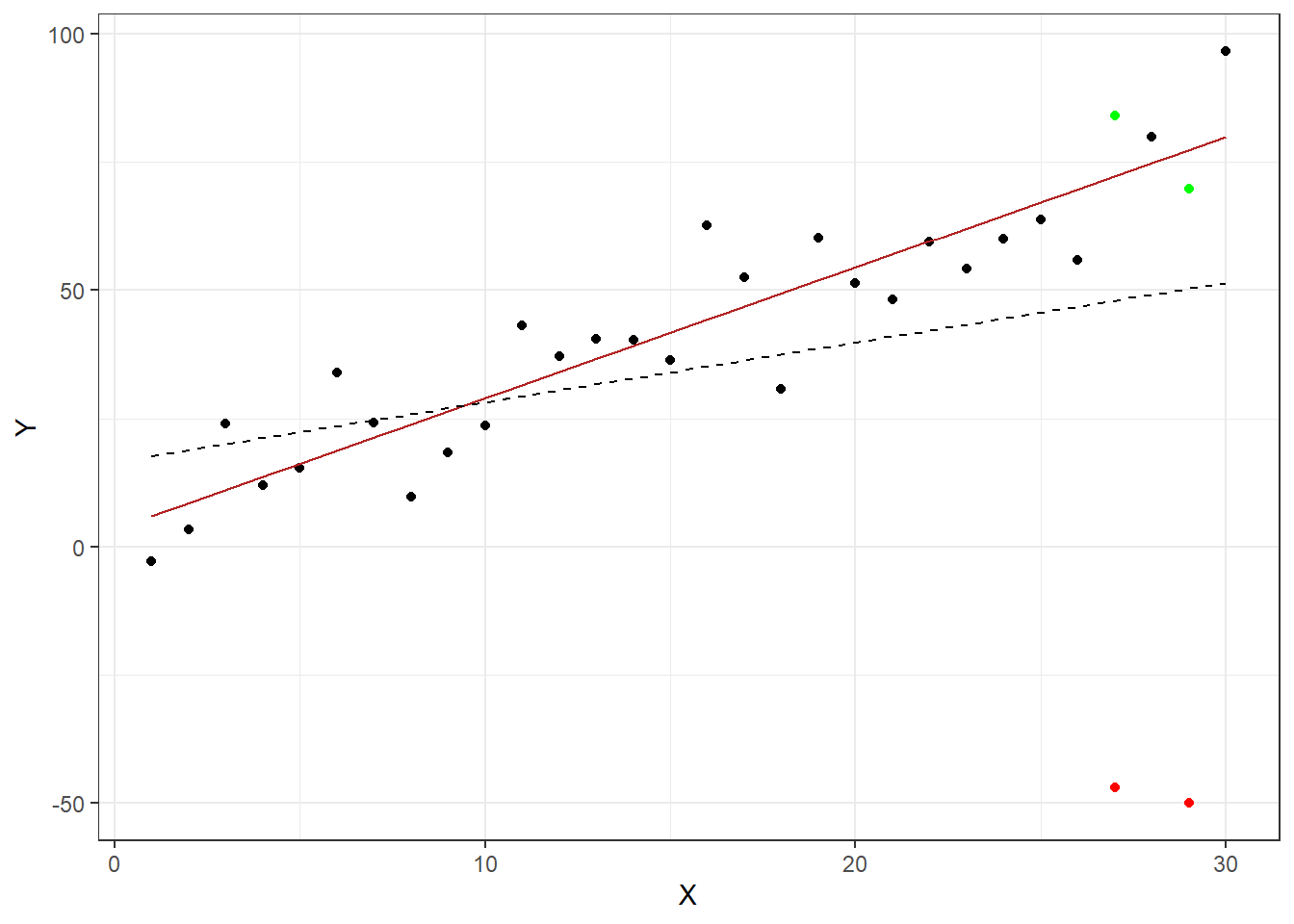

7.3.1 Outliers

Outliers are data points that differ vastly from the trend. They can introduce bias into a model due to the fact that they alter the parameter estimates. Consider the example below. A linear regression was performed twice on the same data set, except during the second estimation the two green points were changed to be outliers by being moved to the positions indicated in red. The solid red line is the regression line based on the unaltered data set, while the dotted line was estimated using the altered data set. As you can see the second regression would lead to different conclusions than the first. Therefore it is important to identify outliers and further deal with them.

Figure 4.6: Effects of outliers

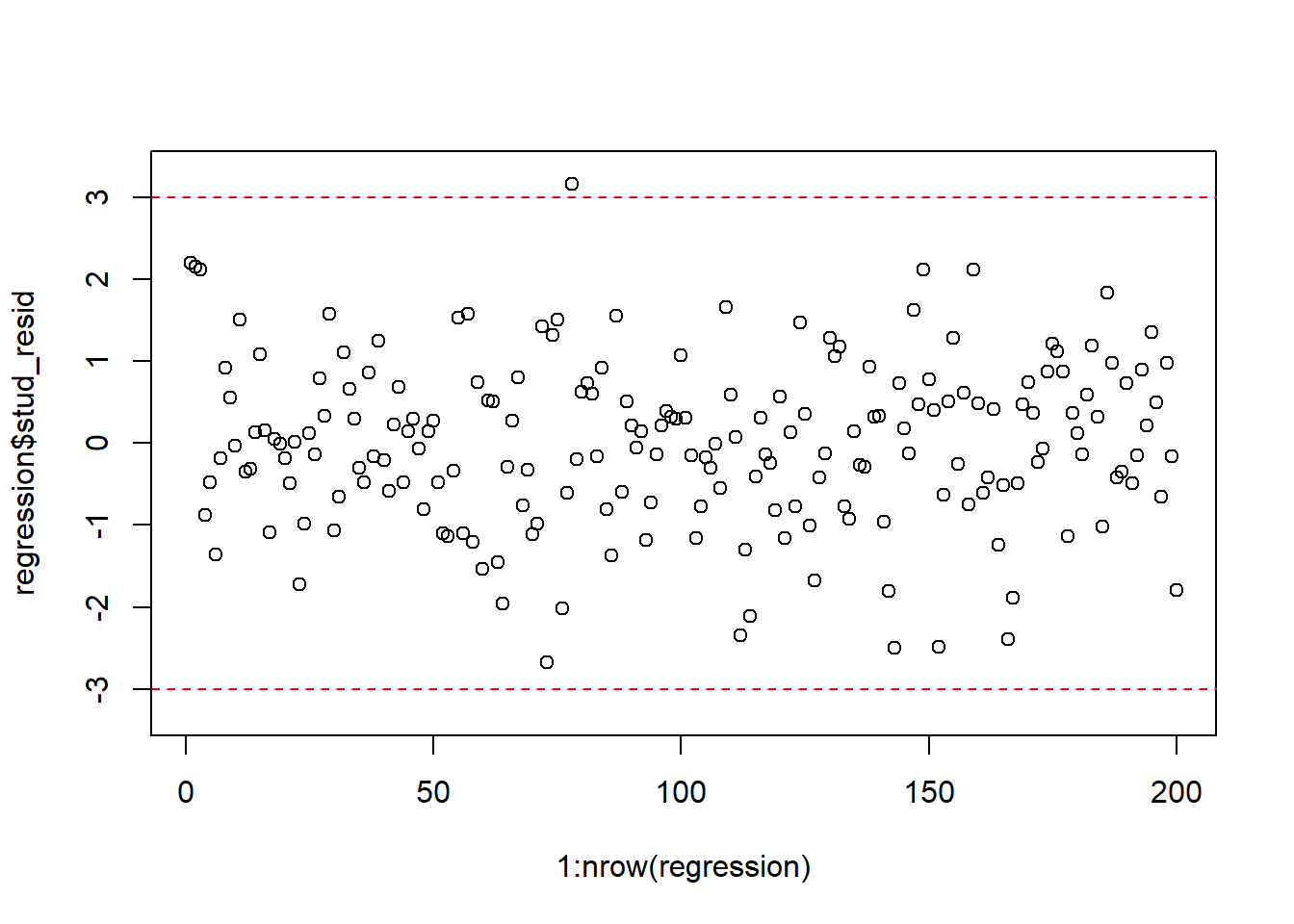

One quick way to visually detect outliers is by creating a scatterplot (as above) to see whether anything seems off. Another approach is to inspect the studentized residuals. If there are no outliers in your data, about 95% will be between -2 and 2, as per the assumptions of the normal distribution. Values well outside of this range are unlikely to happen by chance and warrant further inspection. As a rule of thumb, observations whose studentized residuals are greater than 3 in absolute values are potential outliers.

The studentized residuals can be obtained in R with the function rstudent(). We can use this function to create a new variable that contains the studentized residuals e music sales regression from before yields the following residuals:

A good way to visually inspect the studentized residuals is to plot them in a scatterplot and roughly check if most of the observations are within the -3, 3 bounds.

plot(1:nrow(regression),regression$stud_resid, ylim=c(-3.3,3.3)) #create scatterplot

abline(h=c(-3,3),col="red",lty=2) #add reference lines

Figure 4.8: Plot of the studentized residuals

To identify potentially influential observations in our data set, we can apply a filter to our data:

After a detailed inspection of the potential outliers, you might decide to delete the affected observations from the data set or not. If an outlier has resulted from an error in data collection, then you might simply remove the observation. However, even though data may have extreme values, they might not be influential to determine a regression line. That means, the results wouldn’t be much different if we either include or exclude them from analysis. This means that the decision of whether to exclude an outlier or not is closely related to the question whether this observation is an influential observation, as will be discussed next.

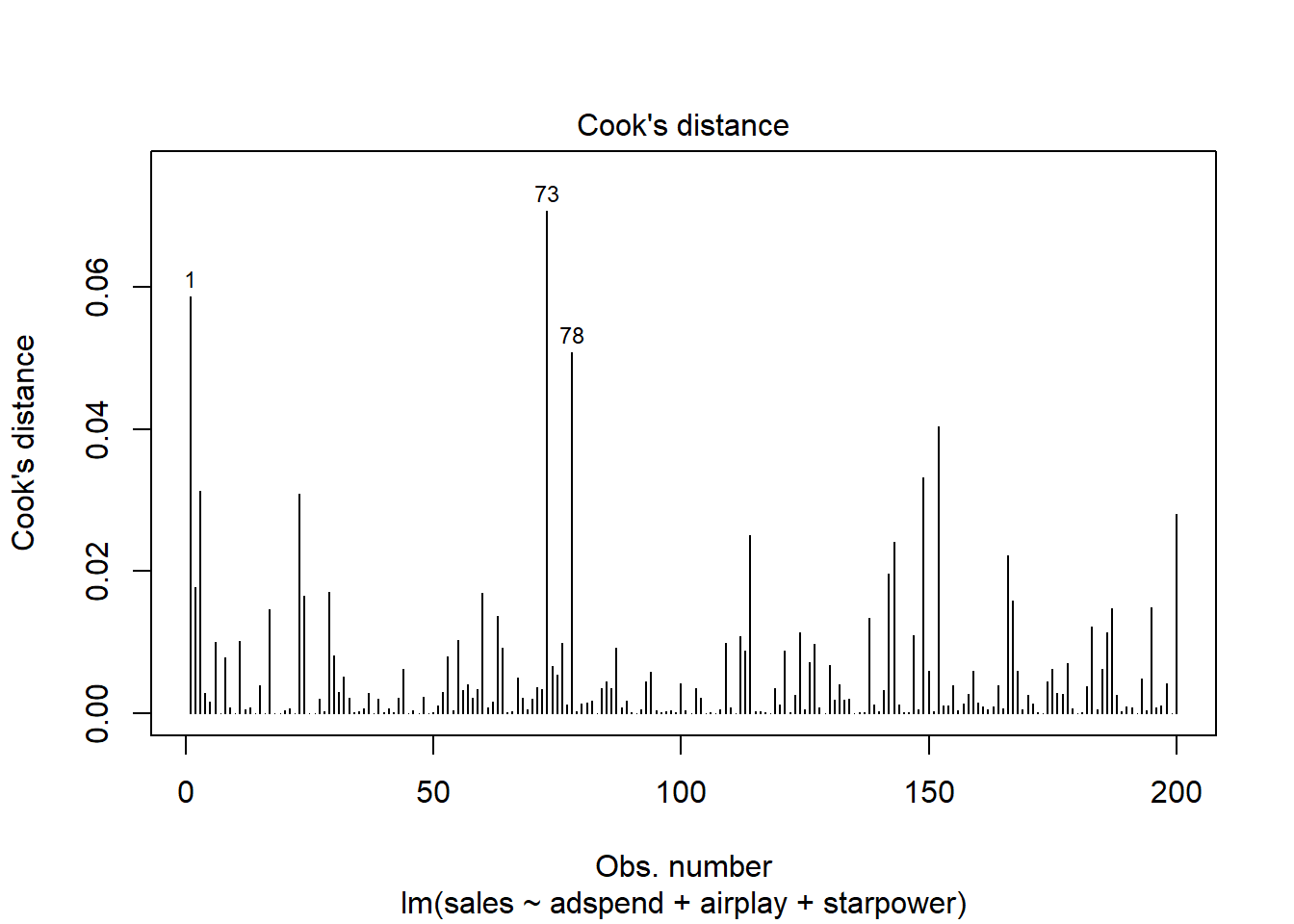

7.3.2 Influential observations

Related to the issue of outliers is that of influential observations, meaning observations that exert undue influence on the parameters. It is possible to determine whether or not the results are driven by an influential observation by calculating how far the predicted values for your data would move if the model was fitted without this particular observation. This calculated total distance is called Cook’s distance. To identify influential observations, we can inspect the respective plots created from the model output. A rule of thumb to determine whether an observation should be classified as influential or not is to look for observation with a Cook’s distance > 1 (although opinions vary on this). The following plot can be used to see the Cook’s distance associated with each data point:

Figure 7.1: Cook’s distance

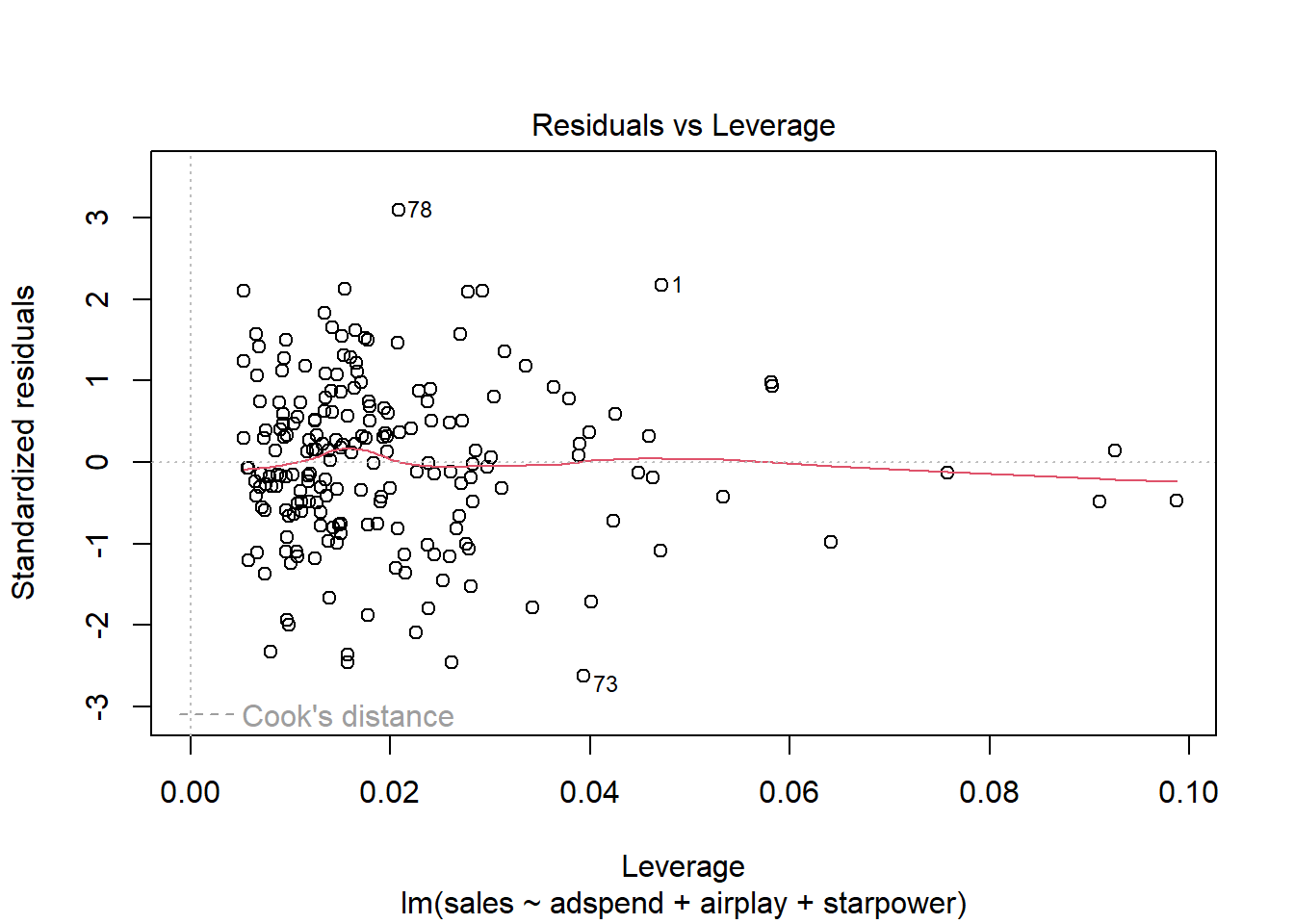

It is easy to see that none of the Cook’s distance values is close to the critical value of 1. Another useful plot to identify influential observations is plot number 5 from the output:

Figure 7.2: Residuals vs. Leverage

In this plot, we look for cases outside of a dashed line, which represents Cook’s distance. Lines for Cook’s distance thresholds of 0.5 and 1 are included by default. In our example, this line is not even visible, since the Cook’s distance values are far away from the critical values. Generally, you would watch out for outlying values at the upper right corner or at the lower right corner of the plot. Those spots are the places where cases can be influential against a regression line. In our example, there are no influential cases.

To see how influential observations can impact your regression, have a look at this example.

To summarize, if you detected outliers in your data, you should test if these observations exert undue influence on your results using the Cook’s distance statistic as described above. If you detect observations which bias your results, you should remove these observations.

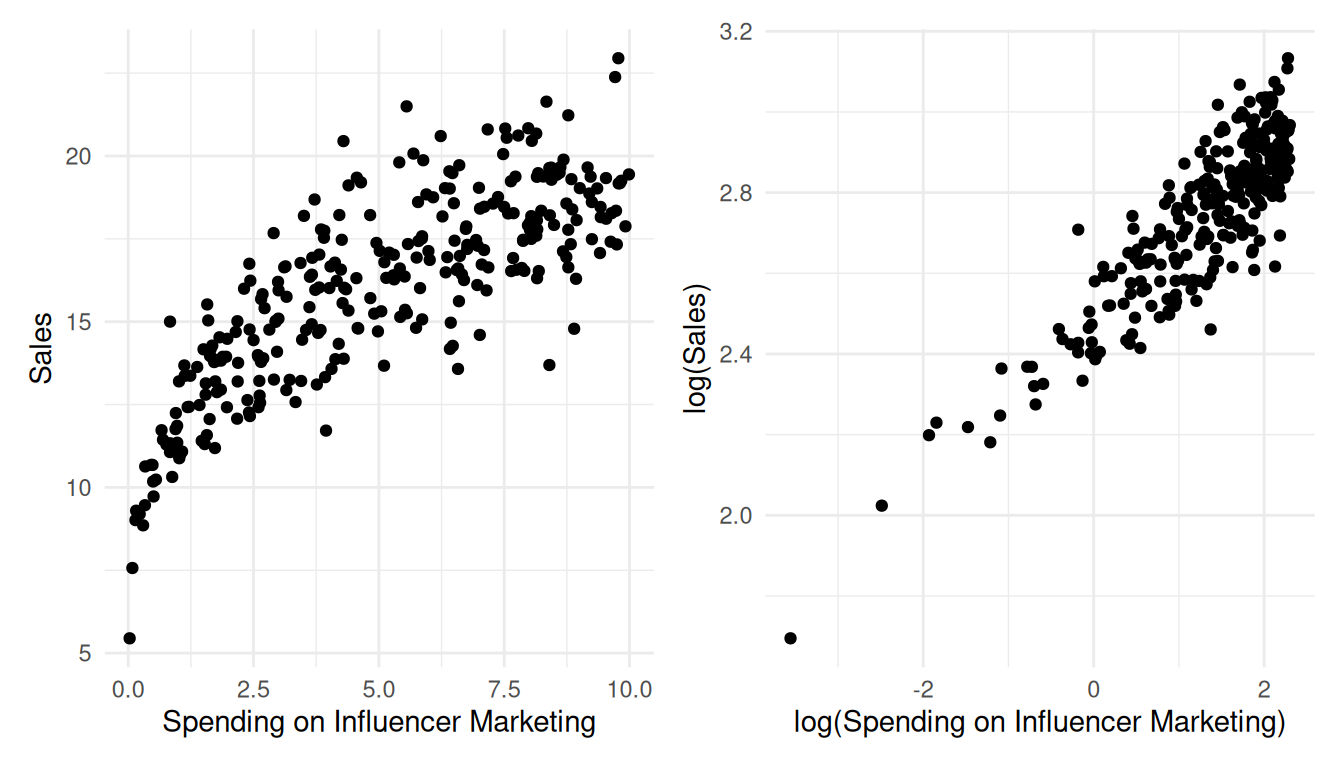

7.3.3 Non-linearity

An important underlying assumption for OLS is that of linearity, meaning that the relationship between the dependent and the independent variable can be reasonably approximated in linear terms. One quick way to assess whether a linear relationship can be assumed is to inspect the added variable plots that we already came across earlier:

Figure 6.1: Partial plots

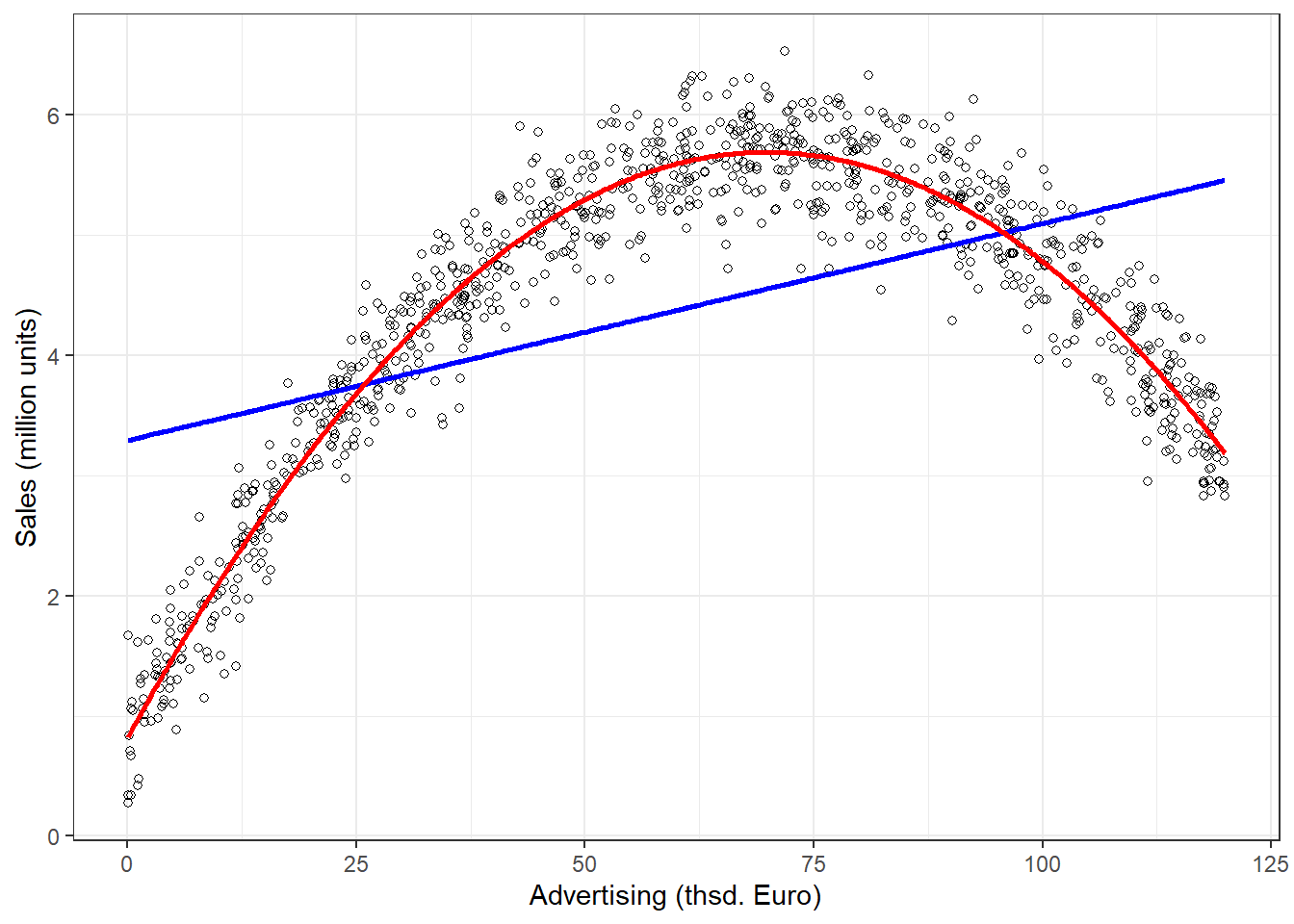

In our example, it appears that linear relationships can be reasonably assumed. Please note, however, that the linear model implies two things:

- Constant marginal returns

- Elasticities increase with X

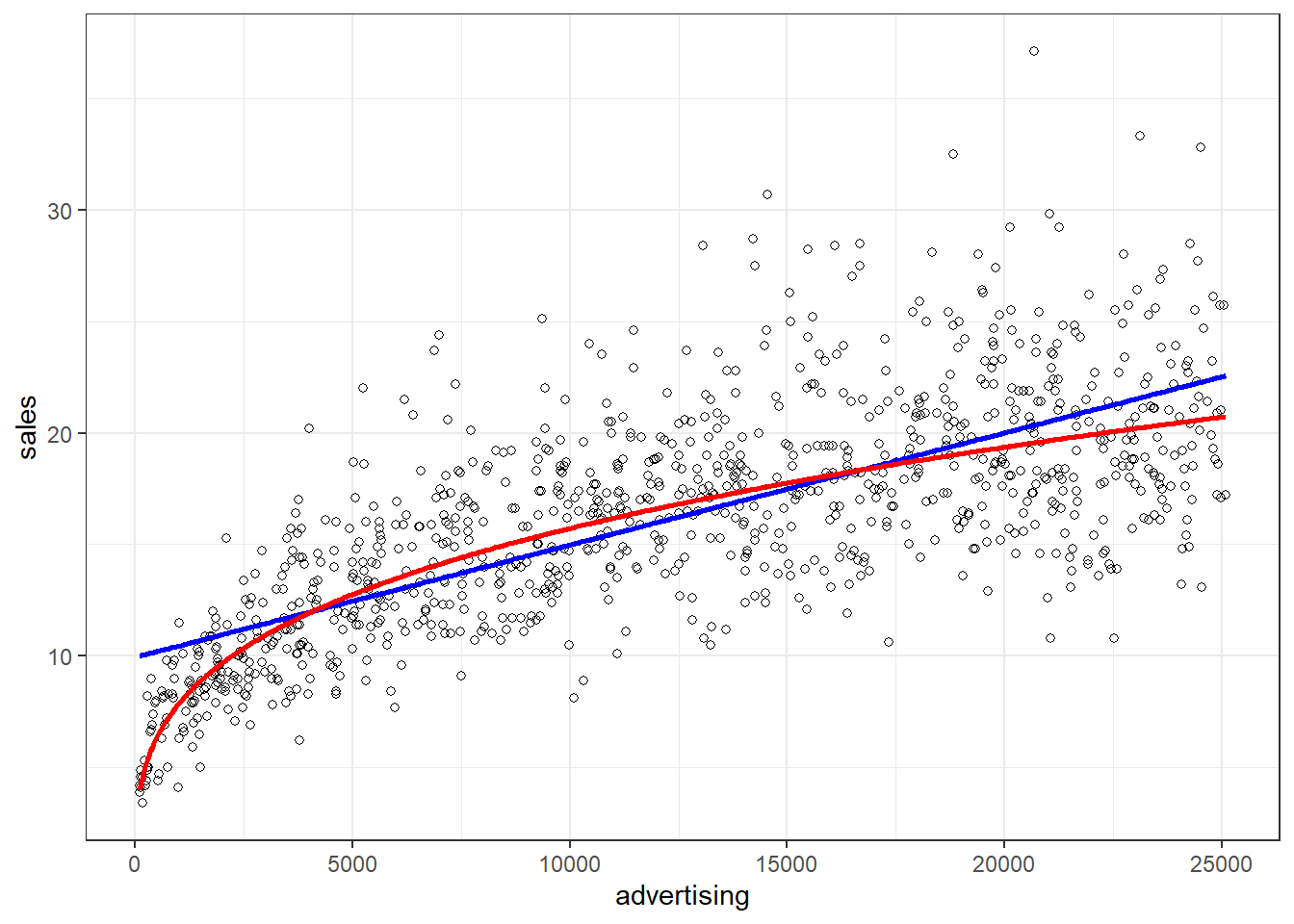

These assumptions may not be justifiable in certain contexts. As an example, consider the effect of marketing expenditures on sales. The linear model assumes that if you change your advertising expenditures from, say 10€ to 11€, this will change sales by the same amount as if you would change your marketing expenditure from, say 100,000€ to 100,001€. This is what we mean by constant marginal returns - irrespective of the level of advertising, spending an additional Euro on advertising will change sales by the same amount. Or consider the effect of price on sales. A linear model assumes that changing the price from, say 10€ to 11€, will change the sales by the same amount as increasing the price from, say 20€ to 21€. An elasticity tells you the relative change in the outcome variable (e.g., sales) due to a relative change in the predictor variable. For example, if we change our advertising expenditures by 1%, sales will change by XY%. As we have seen, the linear model assumes constant marginal returns, which implies that the elasticity increases with the level of the independent variable. In our example, advertising becomes relatively more effective since as we move to higher levels of advertising expenditures, a relatively smaller change in advertising expenditure will yield the same return.

In marketing applications, it is often more realistic to assume decreasing marginal returns, meaning that the return from an increase in advertising is decreasing with increasing levels of advertising (e.g., and increase in advertising expenditures from 10€ to 11€ will lead to larger changes in sales, compared to a change from, say 100,000€ to 100,001€). We will see how to implement such a model further below in the section on extensions of the non-linear model.

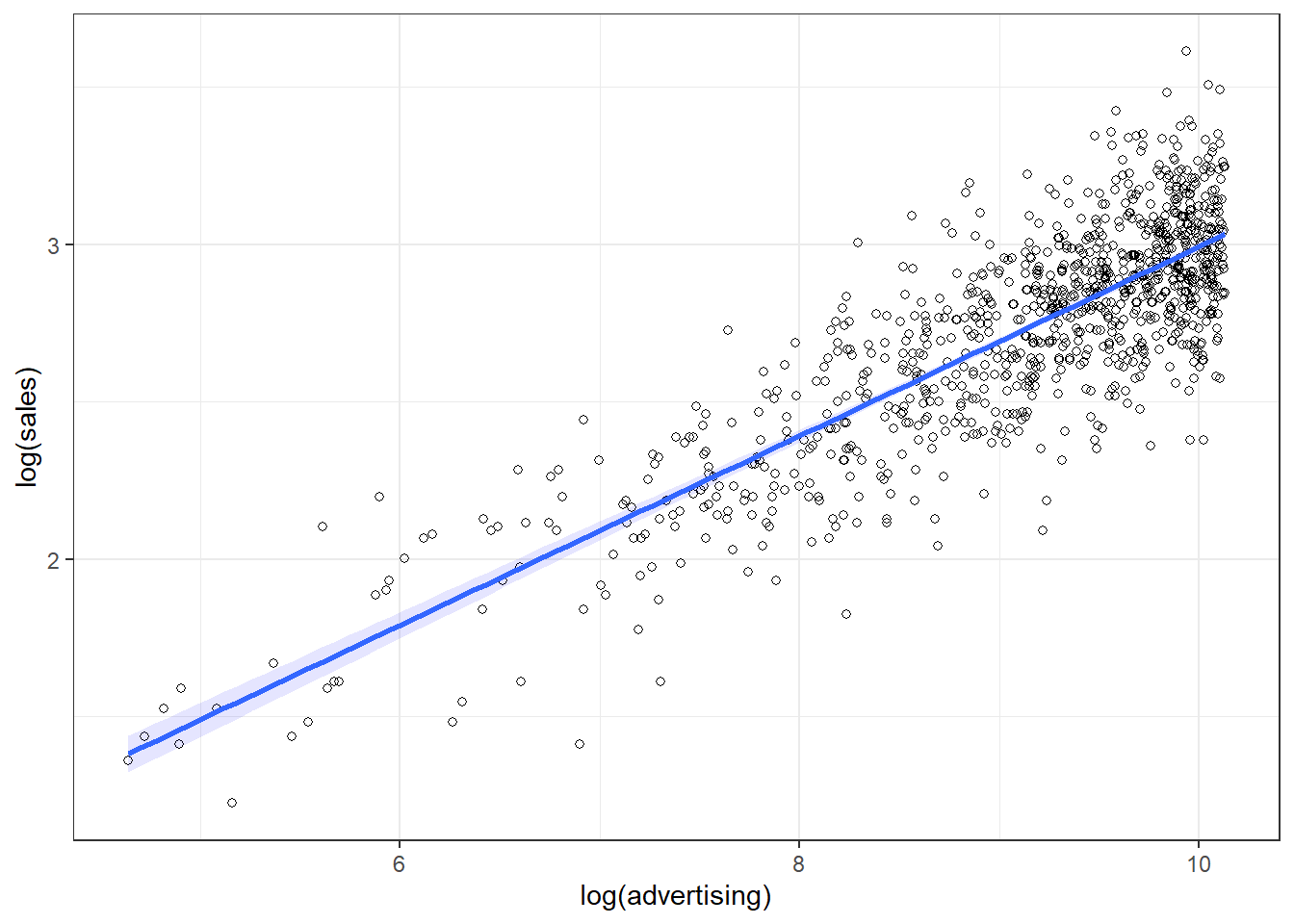

To summarize, if you find indications that the linear specification might not represent your data well, you should consider a non-linear specification, which we will cover below. One popular and easy way to implement a non-linear specification in marketing applications is the so-called log-log model, where you take the logarithm of the dependent variable and independent variable(s). This type of model allows for decreasing marginal returns and yields constant elasticity, which is more realistic in many marketing settings. Constant elasticity means that a 1% change in the independent variable yields the same relative return for different levels of the independent variable. If you are unsure which model specification represents your data better, you can also compare different model specifications, e.g., by comparing the explained variance of the models (the better fitting model explains more of the variation in your data), and then opt for the specification that fits your data best.

7.3.4 Non-constant error variance

The following video summarizes how to identify non-constant error variance in R

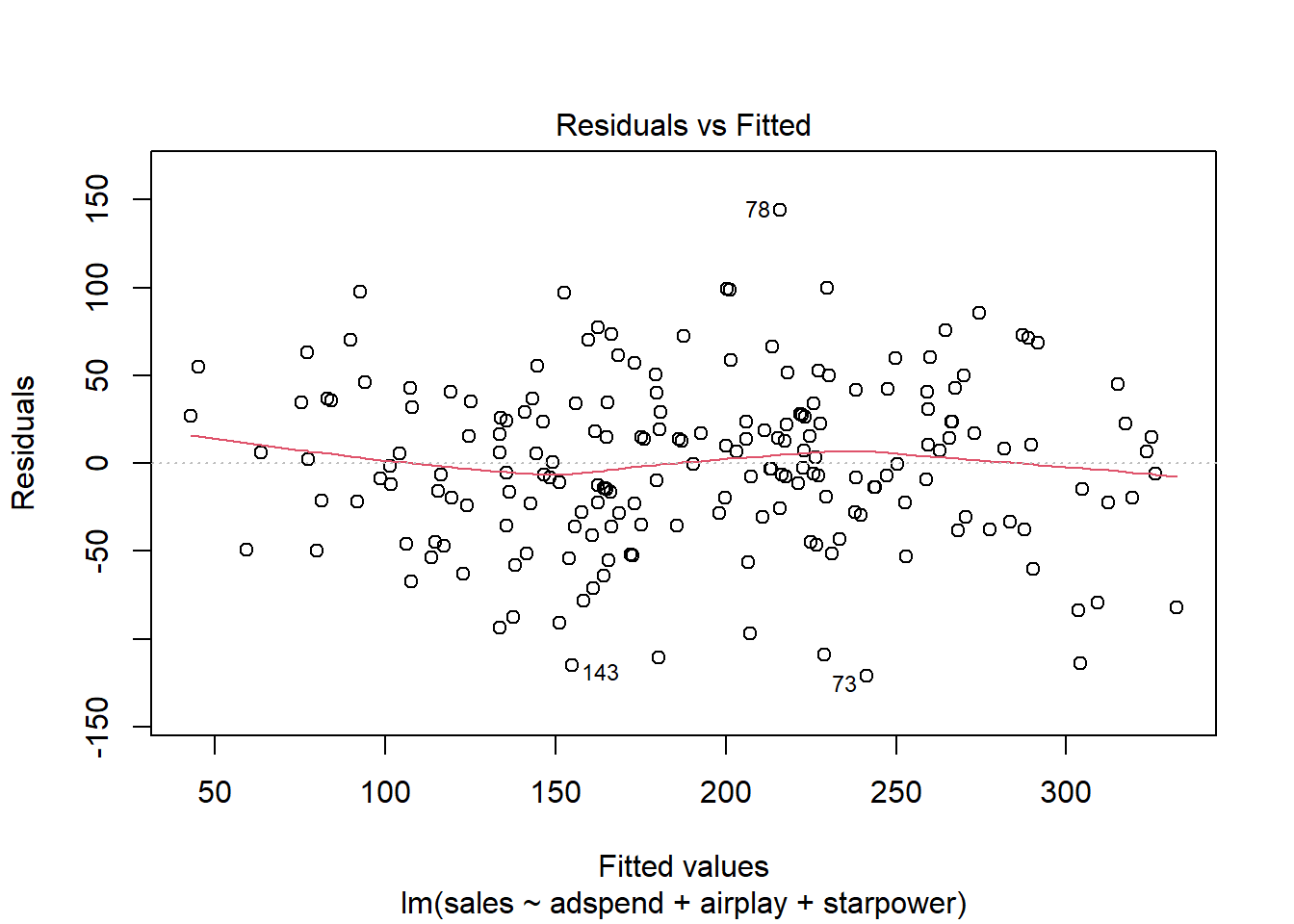

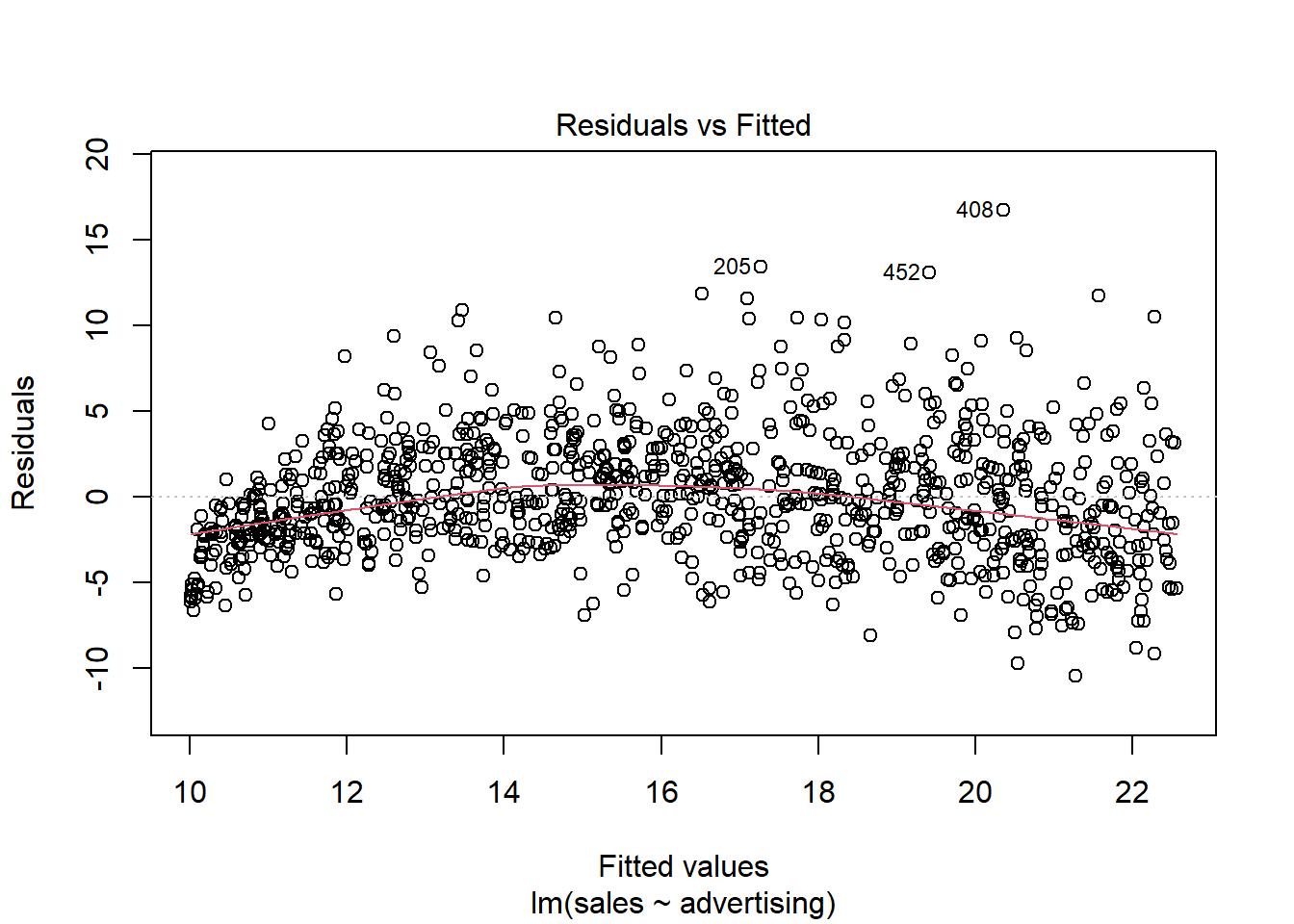

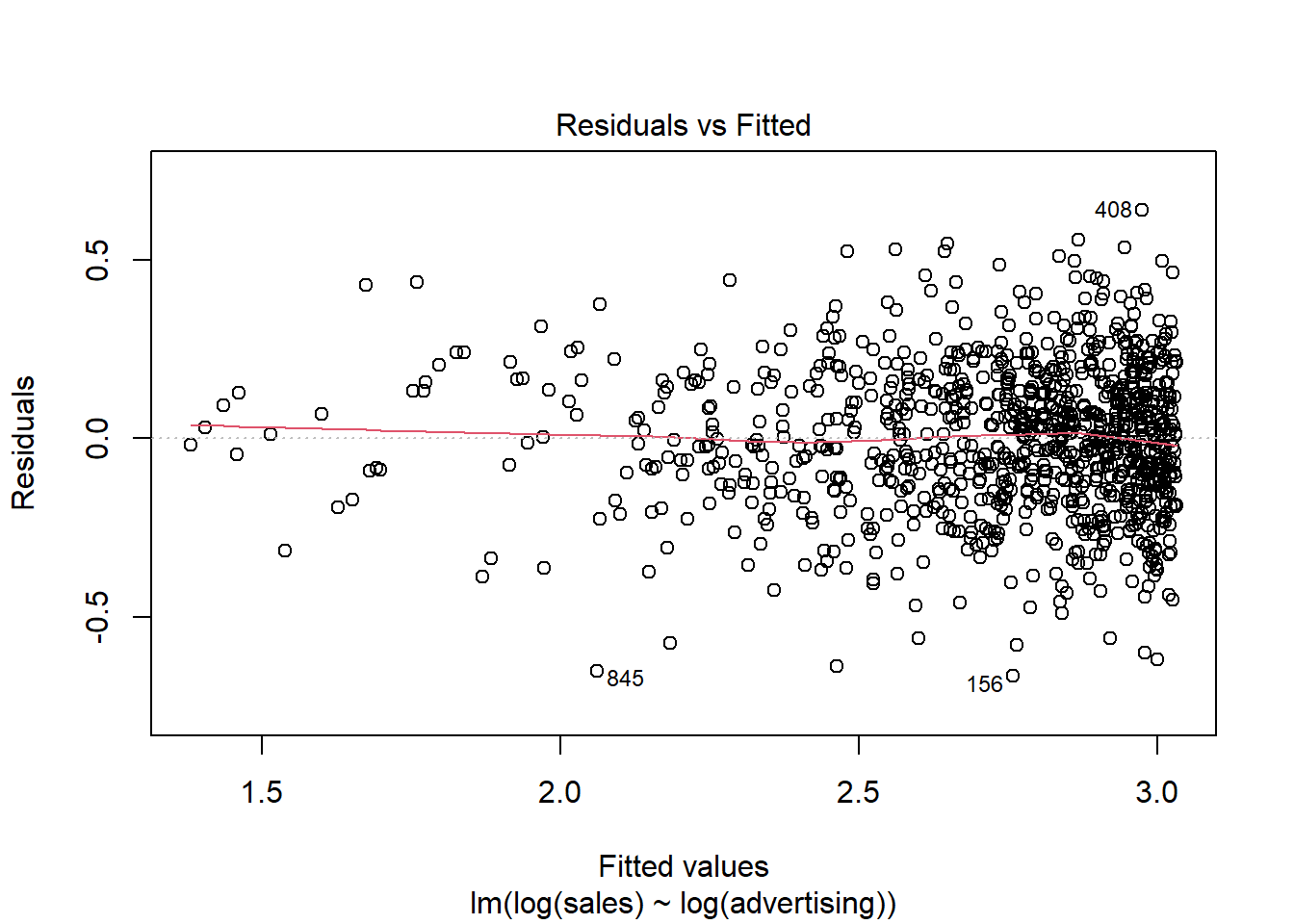

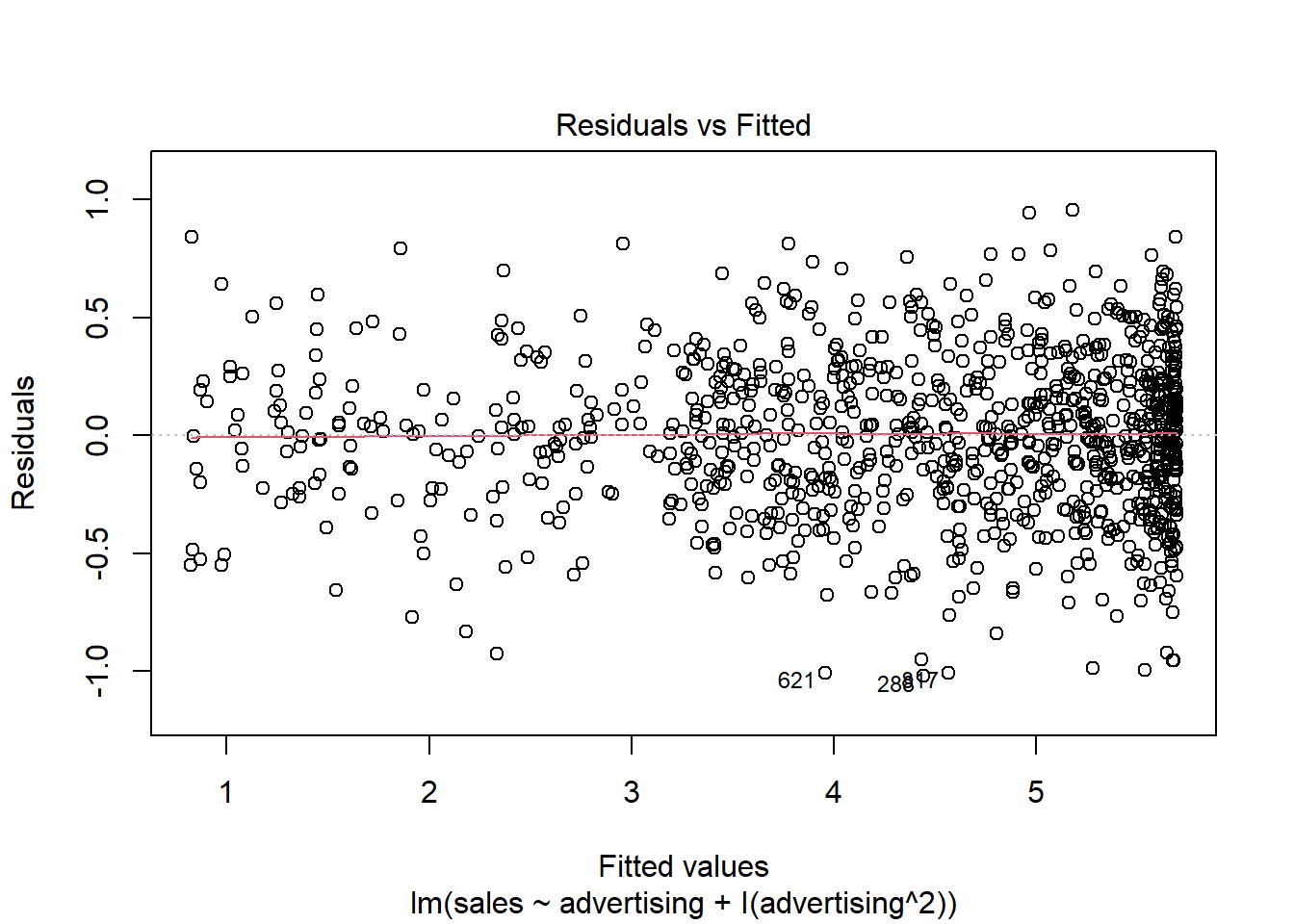

Another important assumption of the linear model is that the error terms have a constant variance (i.e., homoskedasticity). The following plot from the model output shows the residuals (the vertical distance from an observed value to the predicted values) versus the fitted values (the predicted value from the regression model). If all the points fell exactly on the dashed grey line, it would mean that we have a perfect prediction. The residual variance (i.e., the spread of the values on the y-axis) should be similar across the scale of the fitted values on the x-axis.

Figure 4.10: Residuals vs. fitted values

In our case, this appears to be the case. You can identify non-constant variances in the errors (i.e., heteroscedasticity) from the presence of a funnel shape in the above plot. When the assumption of constant error variances is not met, this might be due to a misspecification of your model (e.g., the relationship might not be linear). In these cases, it often helps to transform your data (e.g., using log-transformations). The red line also helps you to identify potential misspecification of your model. It is a smoothed curve that passes through the residuals and if it lies close to the gray dashed line (as in our case) it suggest a correct specification. If the line would deviate from the dashed grey line a lot (e.g., a U-shape or inverse U-shape), it would suggest that the linear model specification is not reasonable and you should try different specifications. You can also test for heterogskedasticity in you regression model by using the Breusch-Pagan test, which has the null hypothesis that the error variances are equal (i.e., homoskedasticity) versus the alternative that the error variances are not equal (i.e., heteroskedasticity). The test can be implemented using the bptest() function from the lmtest package.

##

## studentized Breusch-Pagan test

##

## data: multiple_regression

## BP = 6, df = 3, p-value = 0.1As the p-value is larger than 0.05, we cannot reject the null hypothesis of equal error variances so that the assumption of homoskedasticity is met.

If OLS is performed despite heteroscedasticity, the estimates of the coefficient will still be correct on average. However, the estimator is inefficient, meaning that the standard error is wrong, which will impact the significance tests (i.e., the p-values will be wrong).

Assume that the test would have suggested a violation of the assumption of homoskedasticity - how could you proceed in this case? In the presence of heteroskedasticity, you could rely on robust regression methods, which correct the standard errors. You could implement a robust regression model in R using the coeftest() function from the sandwich package as follows:

##

## t test of coefficients:

##

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -26.61296 16.17045 -1.65 0.1

## adspend 0.08488 0.00693 12.25 < 0.0000000000000002 ***

## airplay 3.36743 0.31510 10.69 < 0.0000000000000002 ***

## starpower 11.08634 2.24743 4.93 0.0000017 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1As you can see, the standard errors (and thus the t-values and p-values) are different compared to the non-robust specification above while the coefficients remain unchanged. However, the difference in this example is not too large since the Breusch-Pagan test suggested the presence of homoskedasticity and we could thus rely on the standard output.

To summarize, you can inspect if the assumption of homoskedasticity is met using the residual plot and the Breusch-Pagan test. If the assumption is violated, you should try to transform your data (e.g., using a log-transformation) first and see if this solves the problem. If the problem persists, you can rely on the robust standard errors as it is shown in the example above.

7.3.5 Non-normally distributed errors

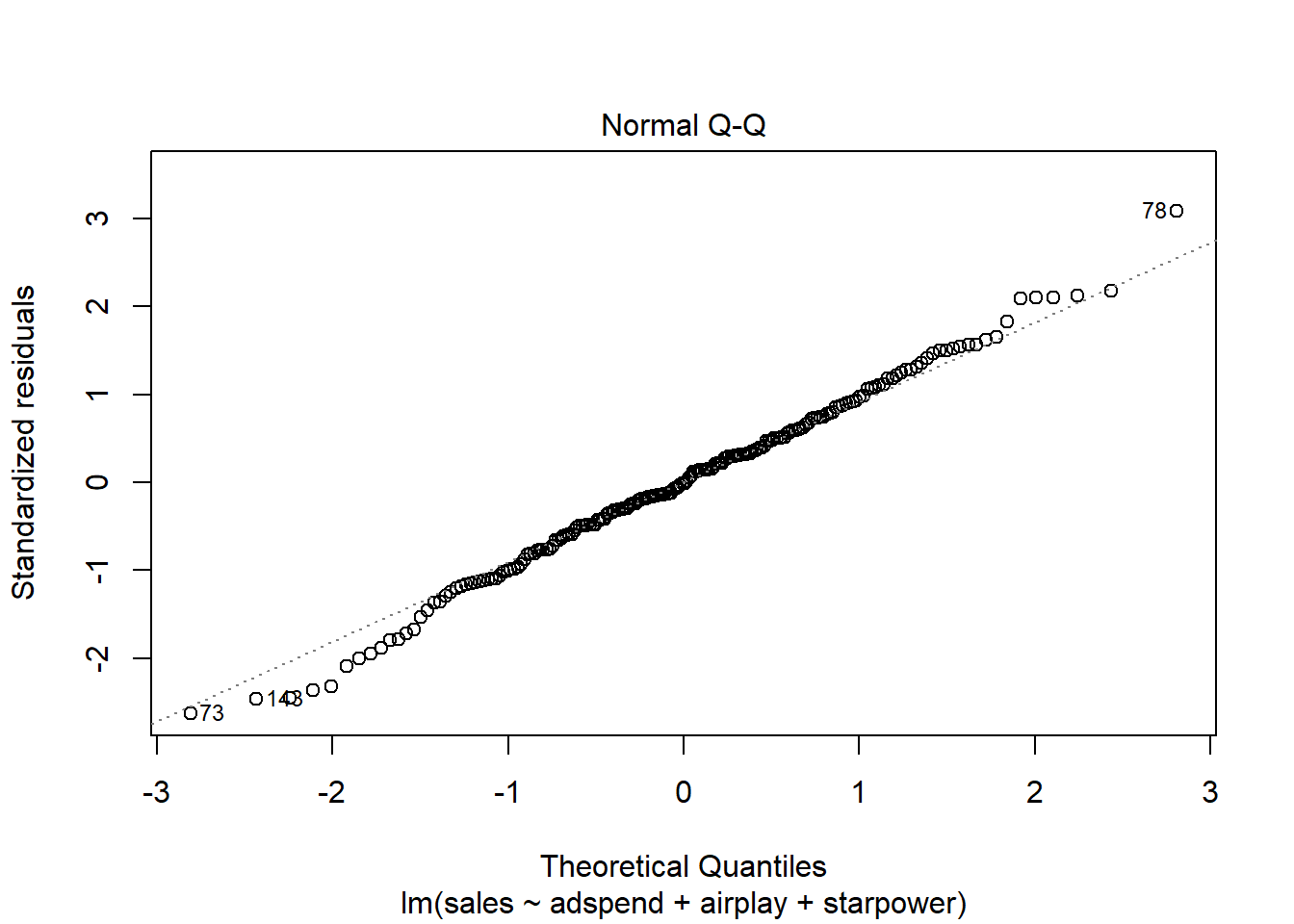

Another assumption of OLS is that the error term is normally distributed. This can be a reasonable assumption for many scenarios, but we still need a way to check if it is actually the case. As we can not directly observe the actual error term, we have to work with the next best thing - the residuals.

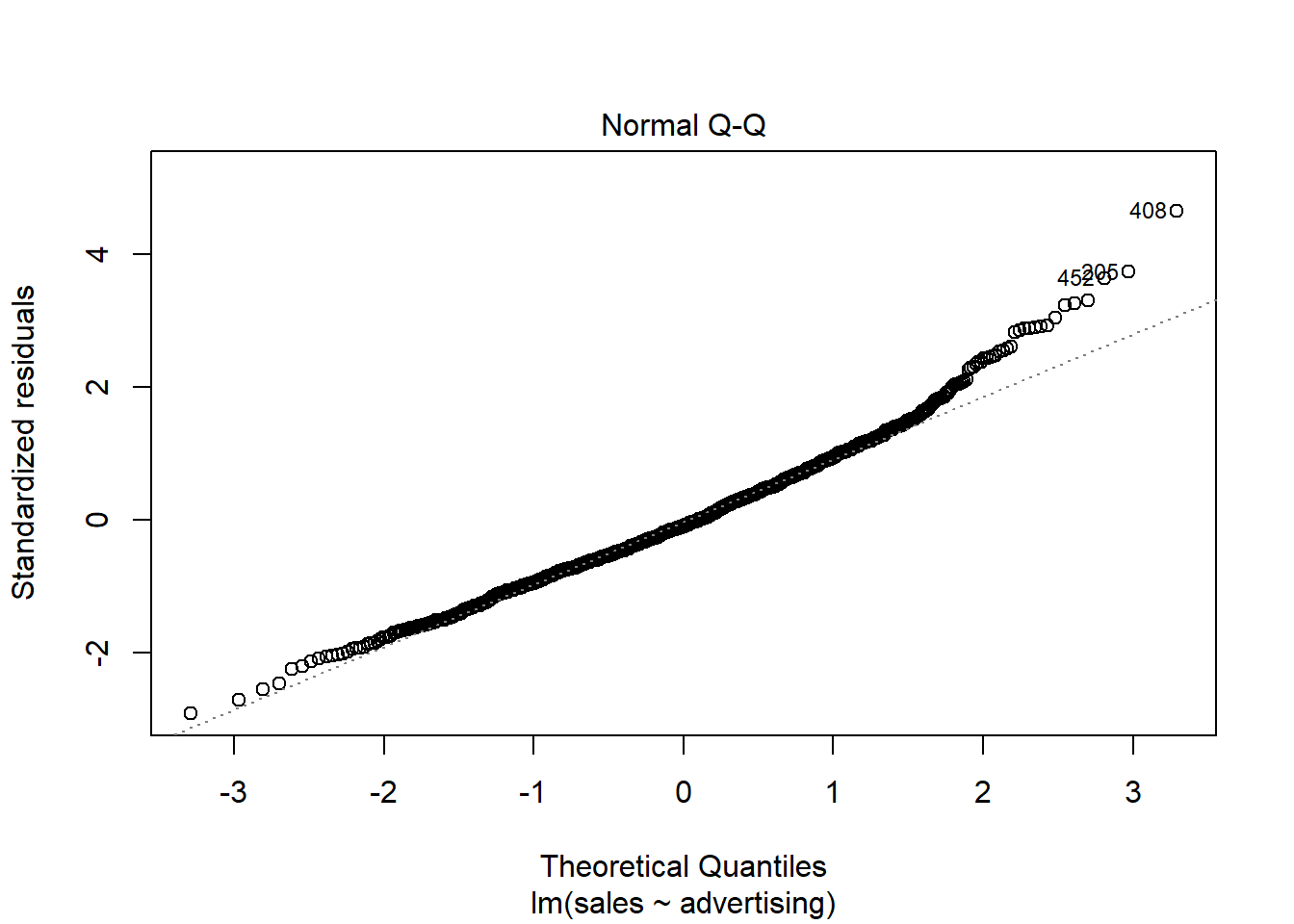

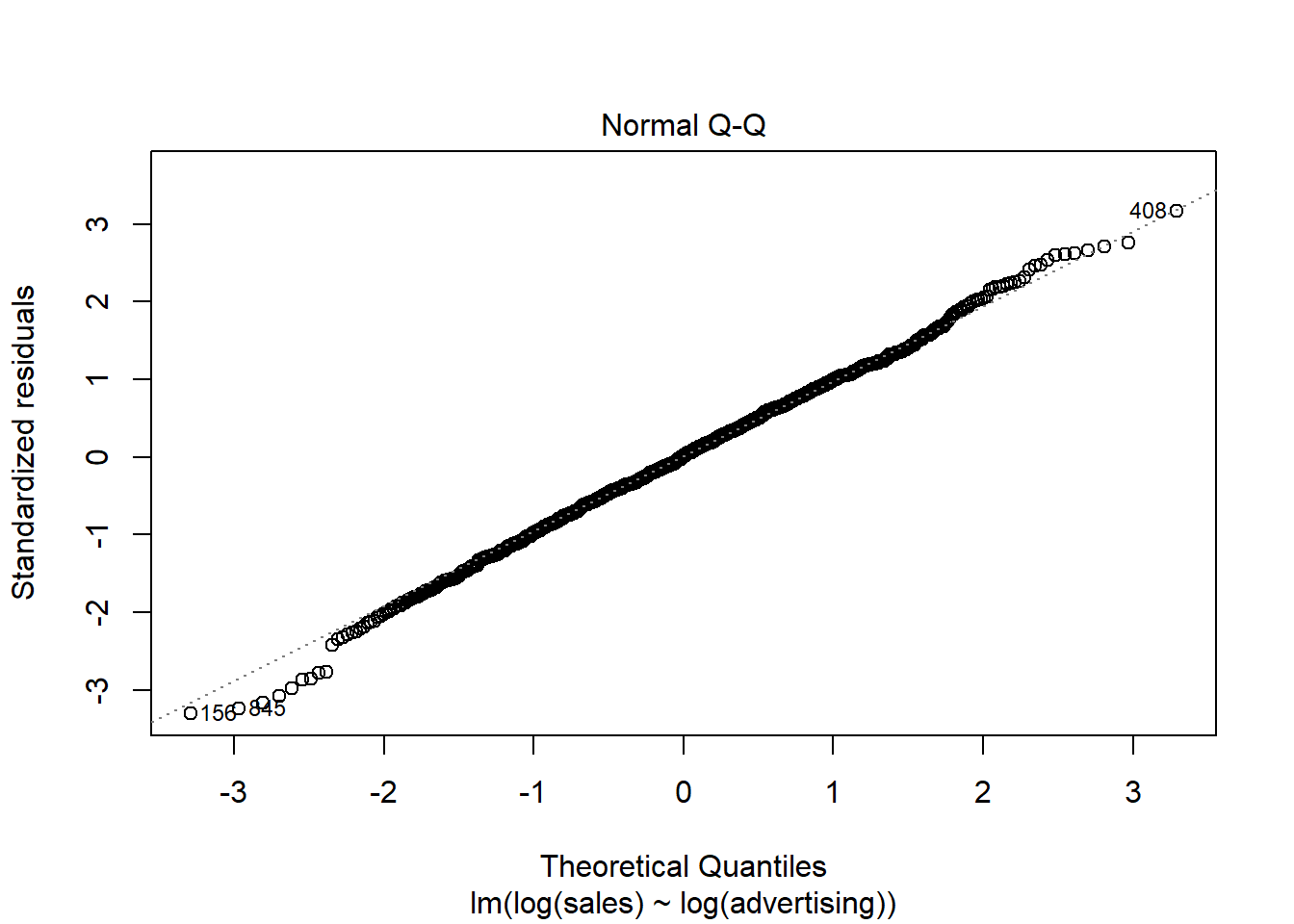

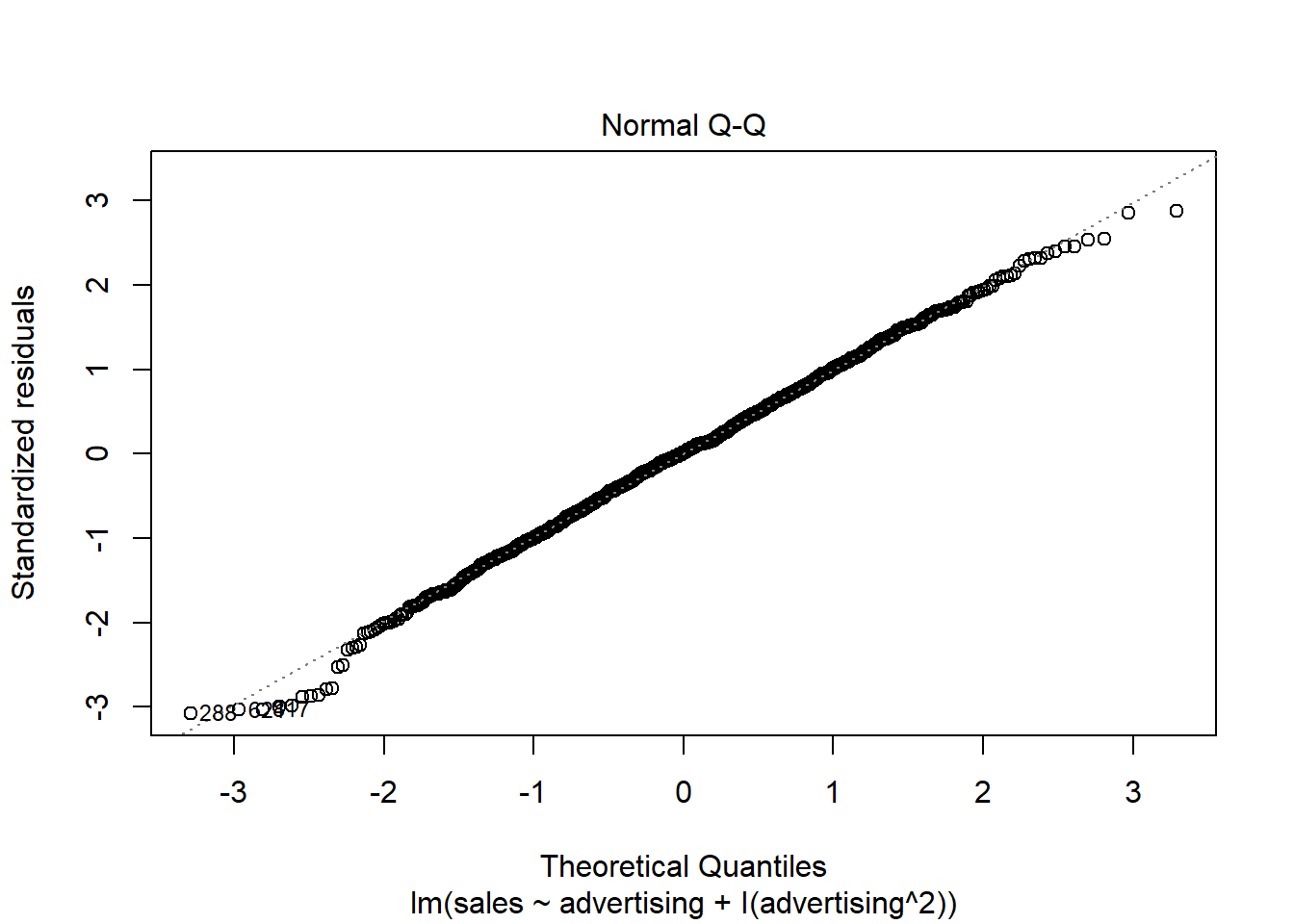

A quick way to assess whether a given sample is approximately normally distributed is by using Q-Q plots. These plot the theoretical position of the observations (under the assumption that they are normally distributed) against the actual position. The plot below is created by the model output and shows the residuals in a Q-Q plot. As you can see, most of the points roughly follow the theoretical distribution, as given by the straight line. If most of the points are close to the line, the data is approximately normally distributed.

Figure 4.12: Q-Q plot

Another way to check for normal distribution of the data is to employ statistical tests that test the null hypothesis that the data is normally distributed, such as the Shapiro–Wilk test. We can extract the residuals from our model using the resid() function and apply the shapiro.test() function to it:

##

## Shapiro-Wilk normality test

##

## data: resid(multiple_regression)

## W = 1, p-value = 0.7As you can see, we can not reject the H0 of normally distributed residuals, which means that we can assume the residuals to be approximately normally distributed in our example.

When the assumption of normally distributed errors is not met, this might again be due to a misspecification of your model, in which case it might help to transform your data (e.g., using log-transformations). If transforming your data doesn’t solve the issue, you may use bootstrapping to obtain corrected results. Bootstrapping is a so-called resampling method in which we use repeated random sampling with replacement to estimate the sampling distribution based on the sample itself, rather than relying on some assumptions about the shape of the sampling distribution to determine the probability of obtaining a test statistic of a particular magnitude. In other words, the data from our sample are treated as the population from which smaller random samples (so-called bootstrap samples) are repeatedly taken with replacement. The statistic of interest, e.g., the regression coefficients in our example, is calculated in each sample, and by taking many samples, the sampling distribution can be estimated. Similar to the simulations we did in chapter 5, the standard error of the statistic can be estimated using the standard deviation of the sampling distribution. Once we have computed the standard error, we can use it to compute the confidence intervals and significance tests. You can find a description of how to implement this procedure in R e.g., here).

Essentially, we can use the boot() function contained in the boot package to obtain the bootstrapped results. However, we need to pass this function a statistic to apply the bootstrapping on - in our case, this would be the coefficients from our regression model. To make this easier, we can write a function that returns the coefficients from the model for every bootstrap sample that we take. In the following code block, we specify a function called bs(), which does exactly this (don’t worry if you don’t understand all the details - its basically just another function we can use to automate certain steps in the analysis, only that in this case we have written the function ourselves rather than relying on functions contained in existing packages).

# function to obtain regression coefficients

bs <- function(formula, data, indices) {

d <- data[indices,] # allows boot to select sample

fit <- lm(formula, data=d)

return(coef(fit))

}Now that we have specified this function, we can use it within the boot function to obtained the bootstrapped results for the regression coefficients. To do this, we first load the boot package and use the boot function by specifying the following arguments:

- data: the data set we use (the regression data set in our example)

- statistic: the statistic(s) we would like to bootstrap (in our example, we use the function we have specified above to obtain the regression coefficients)

- R: the number of bootstrap samples to use (we will use 2000 samples)

- formula: the regression equation from which we obtain the coefficients for the bootstrapping procedure

We create an object called boot_out, which contains the output from the bootstrapping.

# If the residuals do not follow a normal distribution, transform the data or use bootstrapping

library(boot)

# bootstrapping with 2000 replications

boot_out <- boot(data=regression, statistic=bs, R=2000, formula = sales ~ adspend + airplay + starpower)In a next step, let’s extract the 95% confidence intervals for the regression coefficients. The intercept is the first element in the boot_out object and we can use the boot.ci() function and use argument index=1 to obtain the bootstrapped confidence interval for the intercept. The type argument specifies the type of confidence interval we would like to obtain (in this case, we use bias corrected and accelerated, i.e., bca):

## BOOTSTRAP CONFIDENCE INTERVAL CALCULATIONS

## Based on 2000 bootstrap replicates

##

## CALL :

## boot.ci(boot.out = boot_out, type = "bca", index = 1)

##

## Intervals :

## Level BCa

## 95% (-57.3, 7.8 )

## Calculations and Intervals on Original ScaleWe can obtain the confidence intervals for the remaining coefficients in the same way:

## BOOTSTRAP CONFIDENCE INTERVAL CALCULATIONS

## Based on 2000 bootstrap replicates

##

## CALL :

## boot.ci(boot.out = boot_out, type = "bca", index = 2)

##

## Intervals :

## Level BCa

## 95% ( 0.071, 0.098 )

## Calculations and Intervals on Original Scale## BOOTSTRAP CONFIDENCE INTERVAL CALCULATIONS

## Based on 2000 bootstrap replicates

##

## CALL :

## boot.ci(boot.out = boot_out, type = "bca", index = 3)

##

## Intervals :

## Level BCa

## 95% ( 2.7, 3.9 )

## Calculations and Intervals on Original Scale## BOOTSTRAP CONFIDENCE INTERVAL CALCULATIONS

## Based on 2000 bootstrap replicates

##

## CALL :

## boot.ci(boot.out = boot_out, type = "bca", index = 4)

##

## Intervals :

## Level BCa

## 95% ( 6.5, 15.3 )

## Calculations and Intervals on Original ScaleAs usual, we can judge the significance of a coefficient by inspecting whether the null hypothesis (0 in this case) is contained in the intervals. As can be seen, zero is not included in any of the intervals leading us to conclude that all of the predictor variables have a significant effect on the outcome variable.

We could also compare the bootstrapped confidence intervals to the once we obtained from the model without bootstrapping.

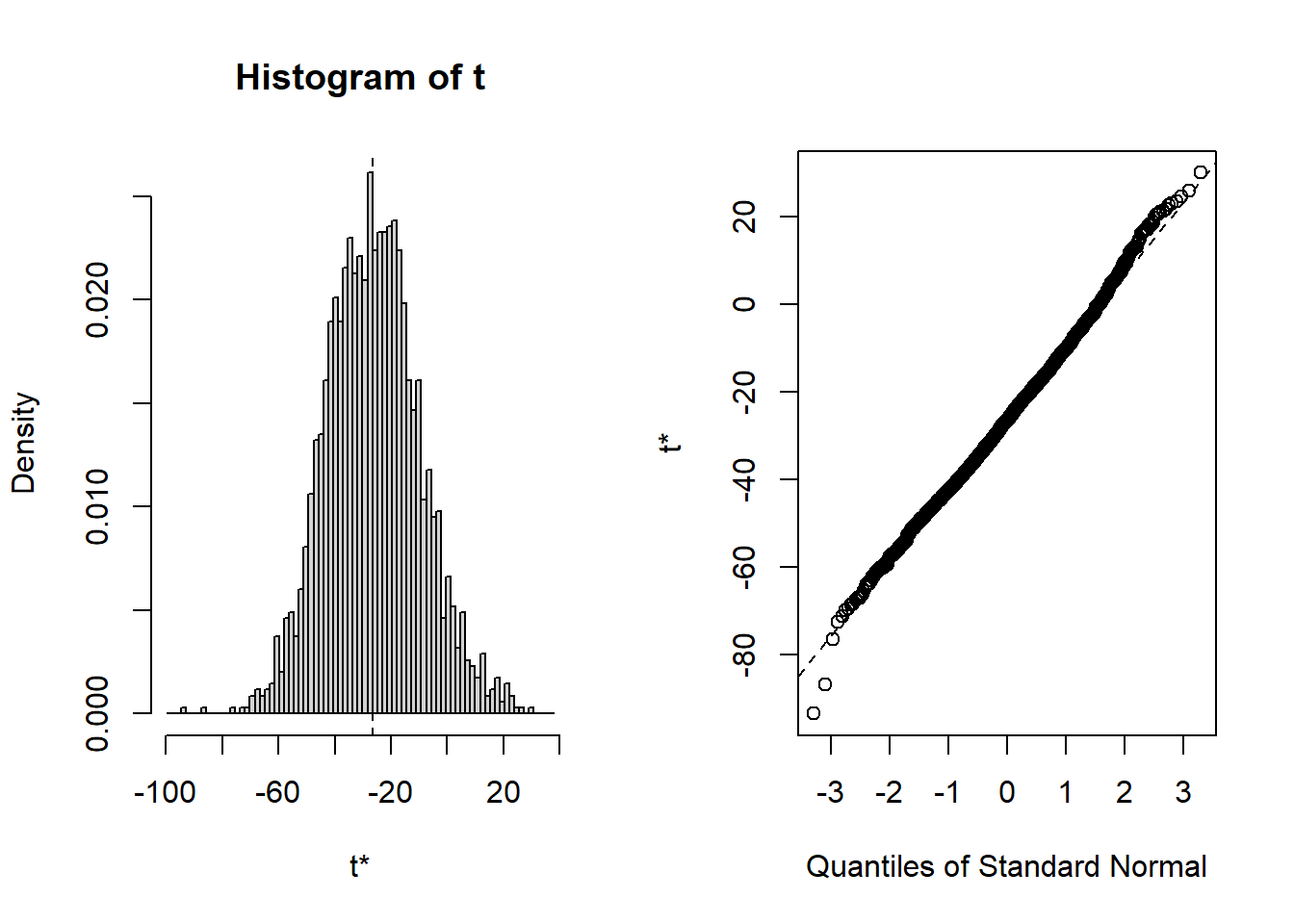

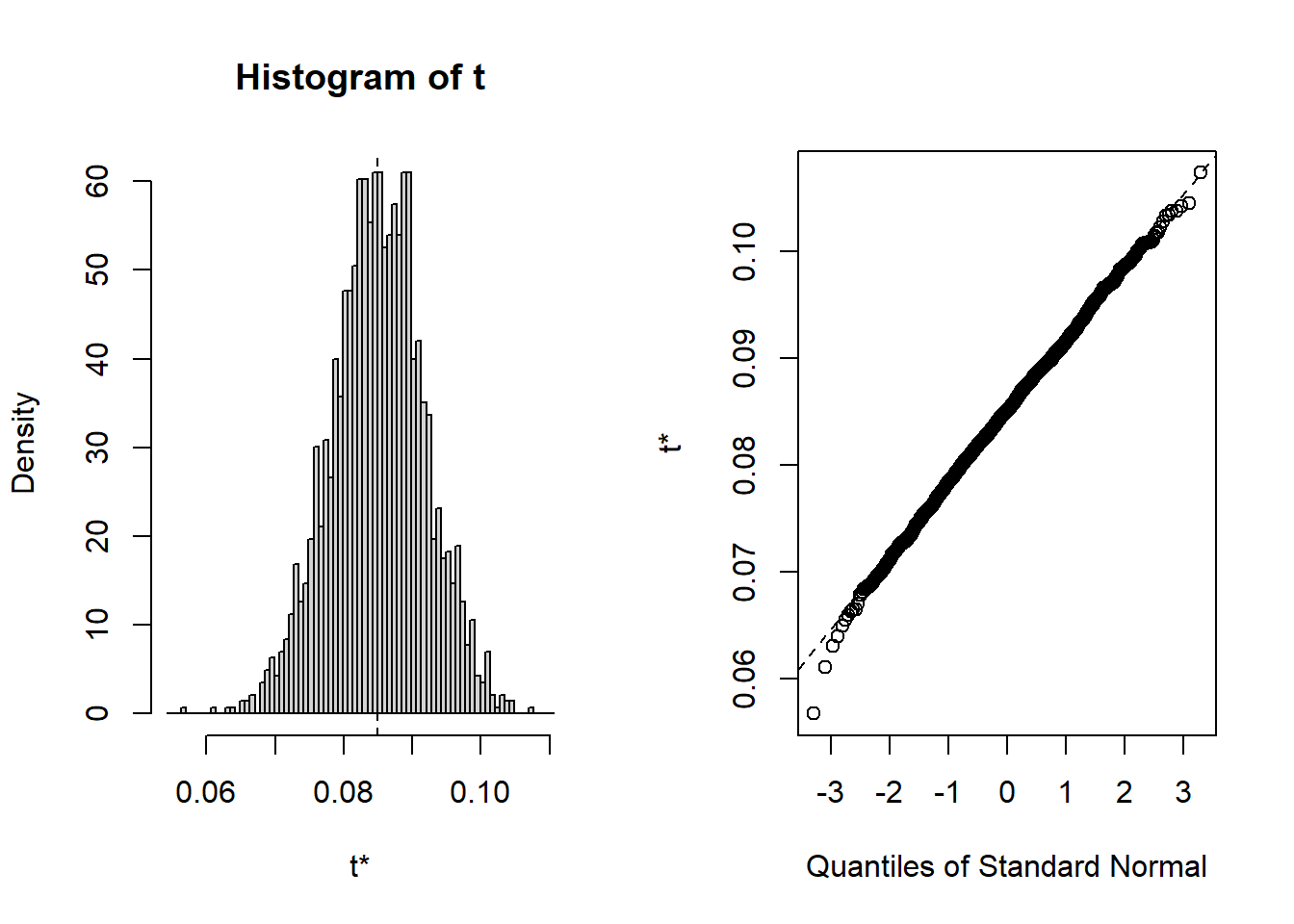

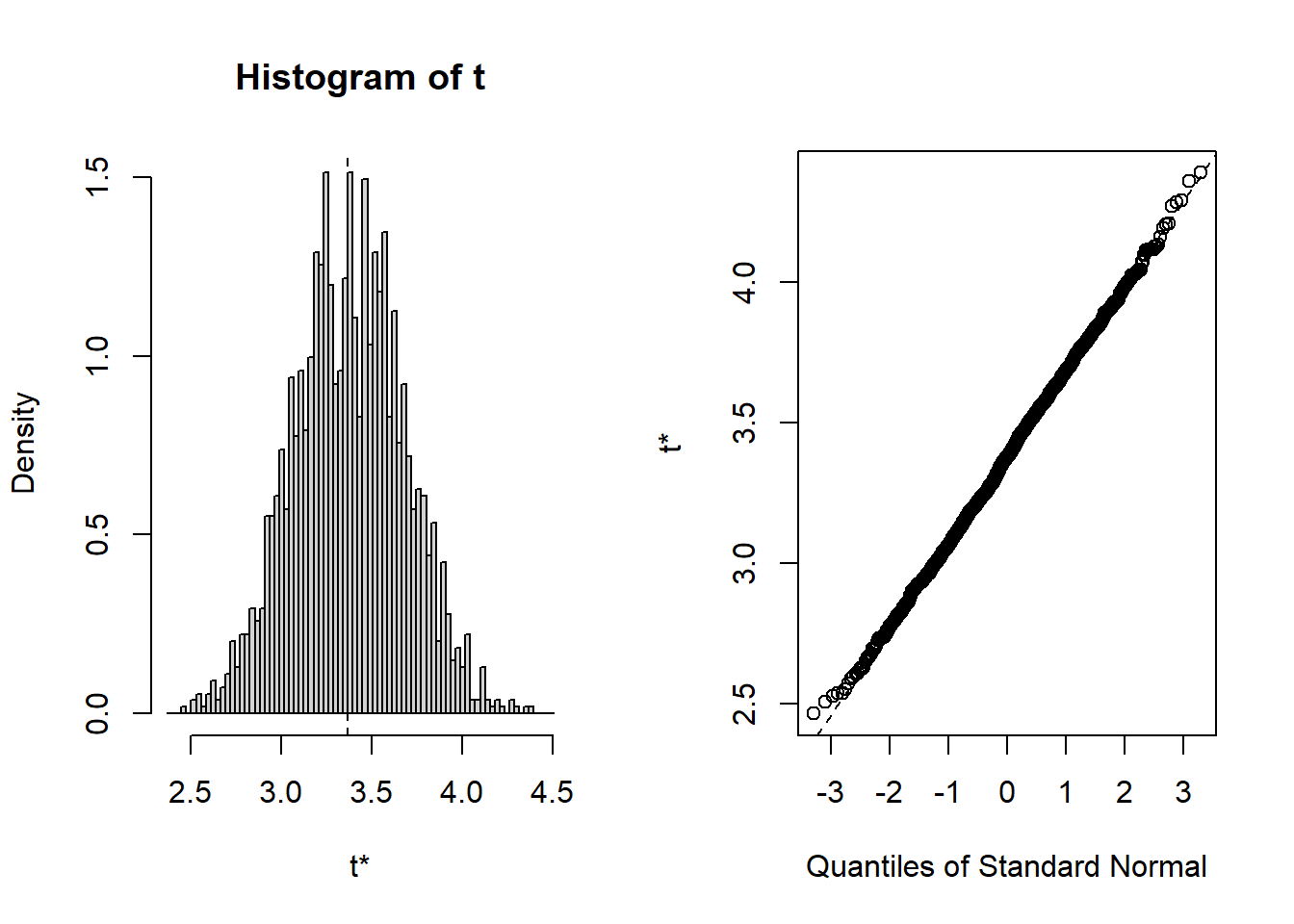

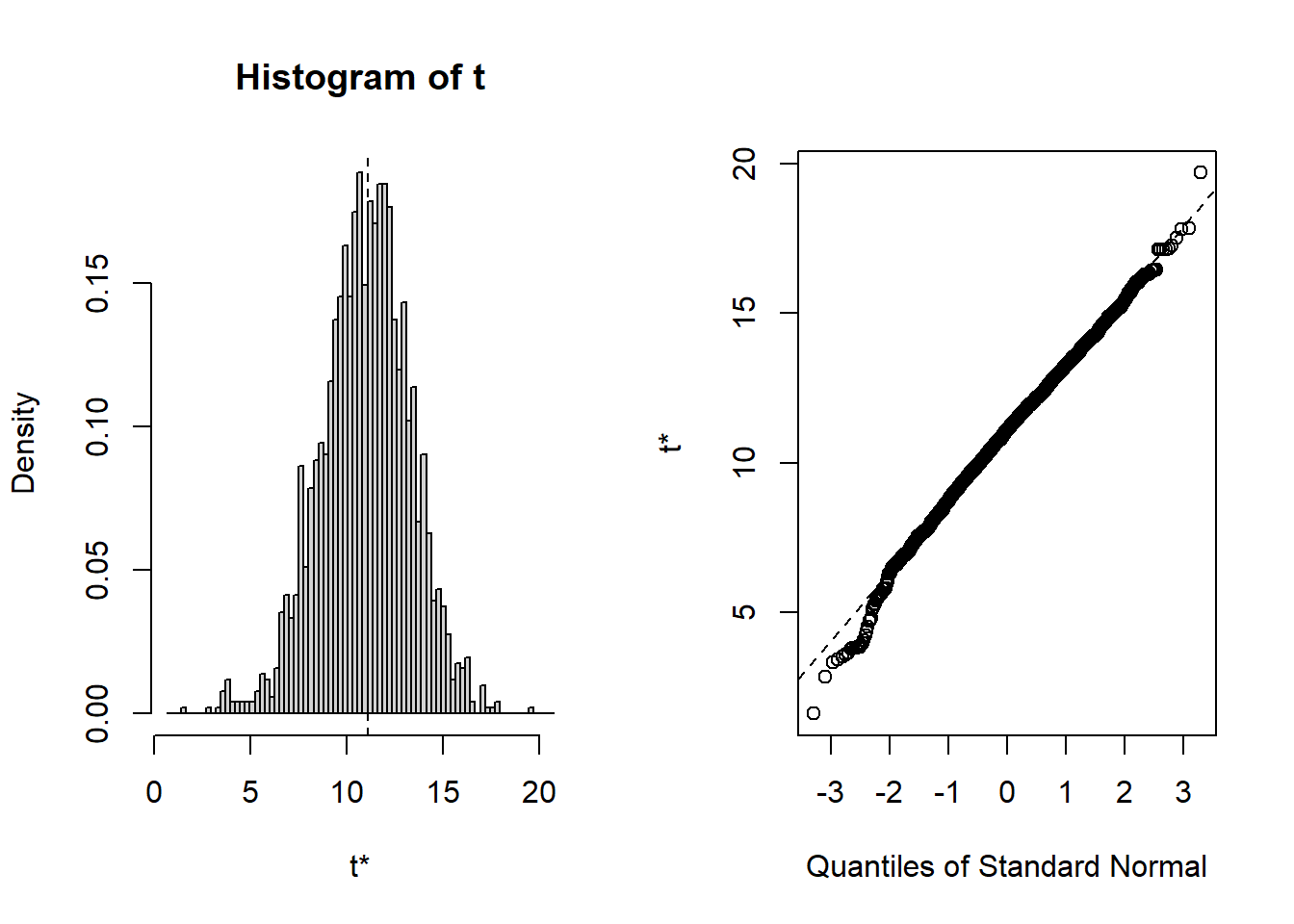

## 2.5 % 97.5 %

## (Intercept) -60.830 7.604

## adspend 0.071 0.099

## airplay 2.820 3.915

## starpower 6.279 15.894As you can see, the bootstrapped confidence interval is very similar to the once obtained without bootstrapping, which is not unexpected since in our example, the tests indicated that our assumptions about the distribution of errors is actually met so that we wouldn’t have needed to apply the bootstrapping. You could also inspect the distribution of the obtained regression coefficients from the 2000 bootstrap samples using the plot() function and passing it the respective index. Inspecting the plots reveals that for all coefficients (with the exception of the intercept) zero is not contained in the range of plausible values, indicating the the coefficients are significant at the 5% level.

To summarize, you can inspect if the assumption of normally distributed errors is violated by visually examining the QQ-plot and using the Shapiro-Wilk test. If the results suggest a non-normal distribution of the errors, you should first try to transform your data (e.g., by using a log-transformation). If this doesn’t solve the issue, you should apply the bootstrapping procedure as shown above to obtain a robust test of the significance of the regression coefficients.

7.3.6 Correlation of errors

The assumption of independent errors implies that for any two observations the residual terms should be uncorrelated. This is also known as a lack of autocorrelation. In theory, this could be tested with the Durbin-Watson test, which checks whether adjacent residuals are correlated. However, be aware that the test is sensitive to the order of your data. Hence, it only makes sense if there is a natural order in the data (e.g., time-series data) when the presence of dependent errors indicates autocorrelation. Since there is no natural order in our data, we don’t need to apply this test.

If you are confronted with data that has a natural order, you can performed the test using the command durbinWatsonTest(), which takes the object that the lm() function generates as an argument. The test statistic varies between 0 and 4, with values close to 2 being desirable. As a rule of thumb values below 1 and above 3 are causes for concern.

7.3.7 Collinearity

Linear dependence of regressors, also known as multicollinearity, is when there is a strong linear relationship between the independent variables. Some correlation will always be present, but severe correlation can make proper estimation impossible. When present, it affects the model in several ways:

- Limits the size of R2: when two variables are highly correlated, the amount of unique explained variance is low; therefore the incremental change in R2 by including an additional predictor is larger if the predictor is uncorrelated with the other predictors.

- Increases the standard errors of the coefficients, making them less trustworthy.

- Uncertainty about the importance of predictors: if two predictors explain similar variance in the outcome, we cannot know which of these variables is important.

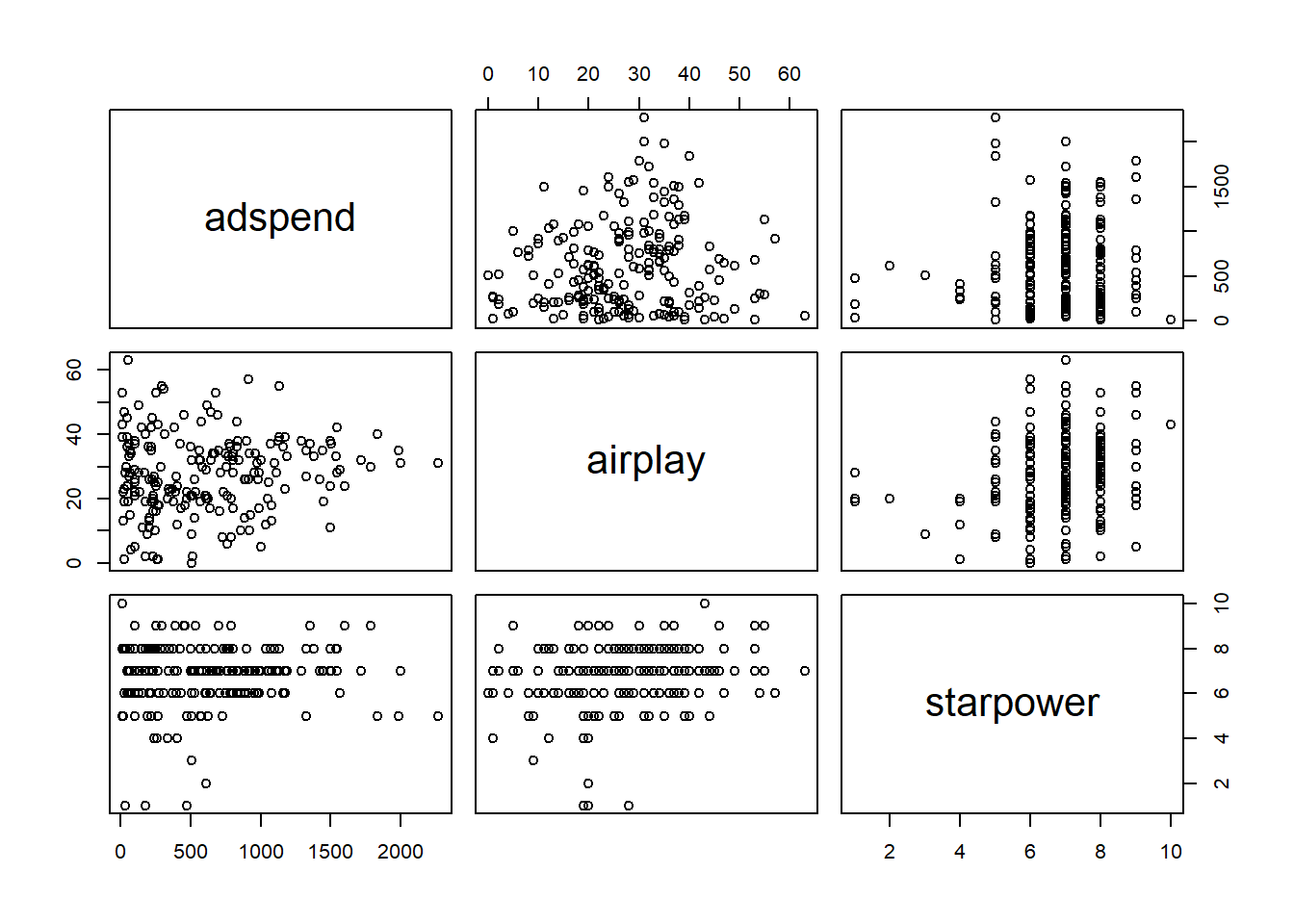

A quick way to find obvious multicollinearity is to examine the correlation matrix of the data. Any value > 0.8 - 0.9 should be cause for concern. You can, for example, create a correlation matrix using the rcorr() function from the Hmisc package.

## adspend airplay starpower

## adspend 1.00 0.10 0.08

## airplay 0.10 1.00 0.18

## starpower 0.08 0.18 1.00

##

## n= 200

##

##

## P

## adspend airplay starpower

## adspend 0.1511 0.2557

## airplay 0.1511 0.0099

## starpower 0.2557 0.0099The bivariate correlations can also be show in a plot:

Figure 7.3: Bivariate correlation plots

However, this only spots bivariate multicollinearity. Variance inflation factors can be used to spot more subtle multicollinearity arising from multivariate relationships. It is calculated by regressing Xi on all other X and using the resulting R2 to calculate

\[\begin{equation} \begin{split} \frac{1}{1 - R_i^2} \end{split} \tag{7.19} \end{equation}\]

VIF values of over 4 are certainly cause for concern and values over 2 should be further investigated. If the average VIF is over 1 the regression may be biased. The VIF for all variables can easily be calculated in R with the vif() function contained in the car package.

## adspend airplay starpower

## 1 1 1As you can see the values are well below the cutoff, indicating that we do not have to worry about multicollinearity in our example. If multicollinearity turns out to be an issue in your analysis, there are at least two ways to proceed. First, you could eliminate one of the predictors, e.g., by using variable selection procedures which will be covered below. Second, you could combine predictors that are highly correlated using statistical methods aiming at reducing the dimensionality of the data based on the correlation matrix, such as the Pricipal Component Analysis (PCA), which will be covered in the next chapter.

To summarize, you can inspect if the assumption of multicollinearity is violated by inspecting the variance inflation factor associated with the regression coefficients. Values over 4 are a cause for concern. In case multicollinearity turns out to be an issue, you can address it by 1) eliminating one of the regressors (e.g., using variable selection procedures) or combining variables that are highly correlated in one factor (e.g., using Principal Component Analysis).

7.3.8 Omitted Variables

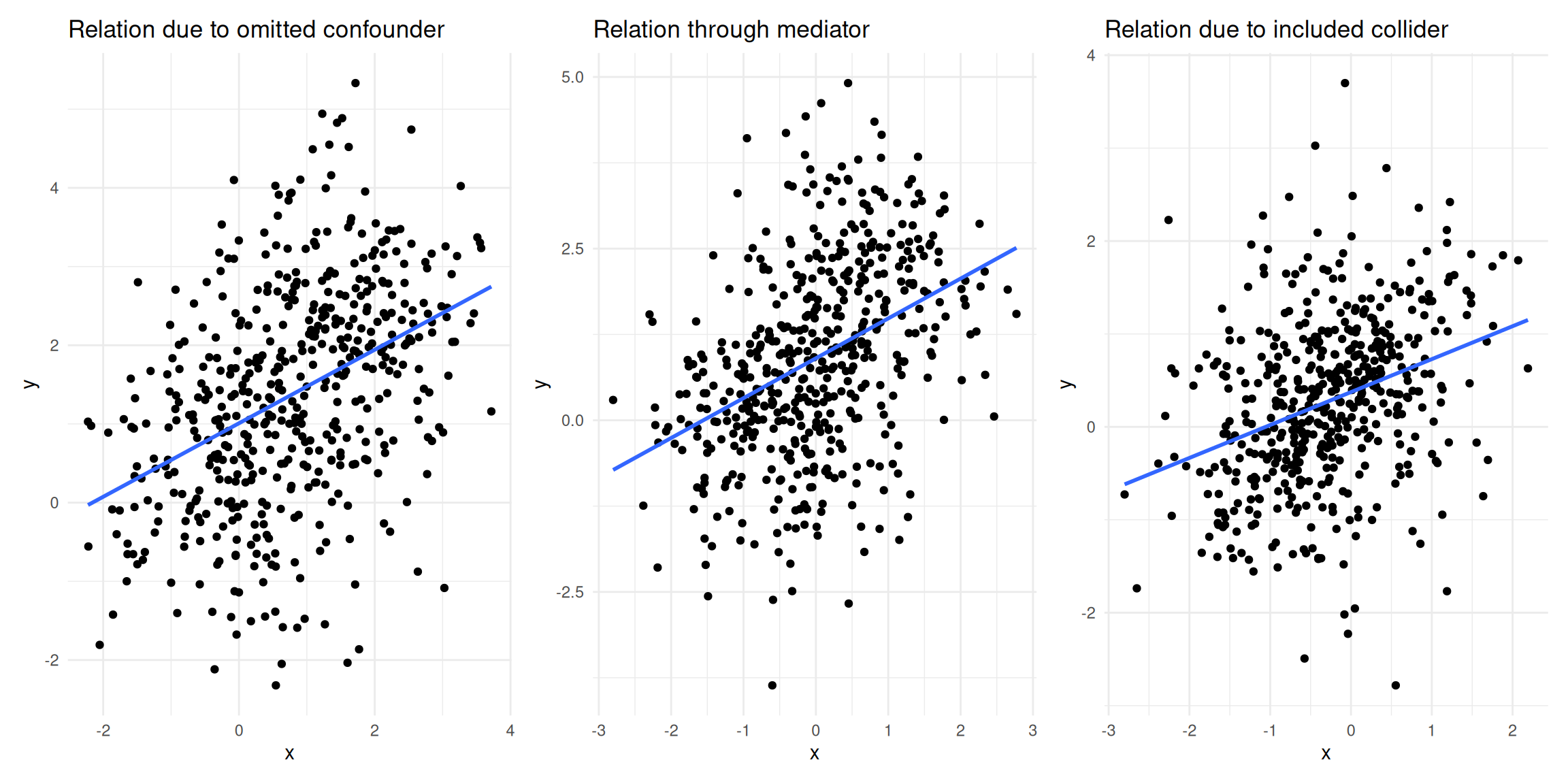

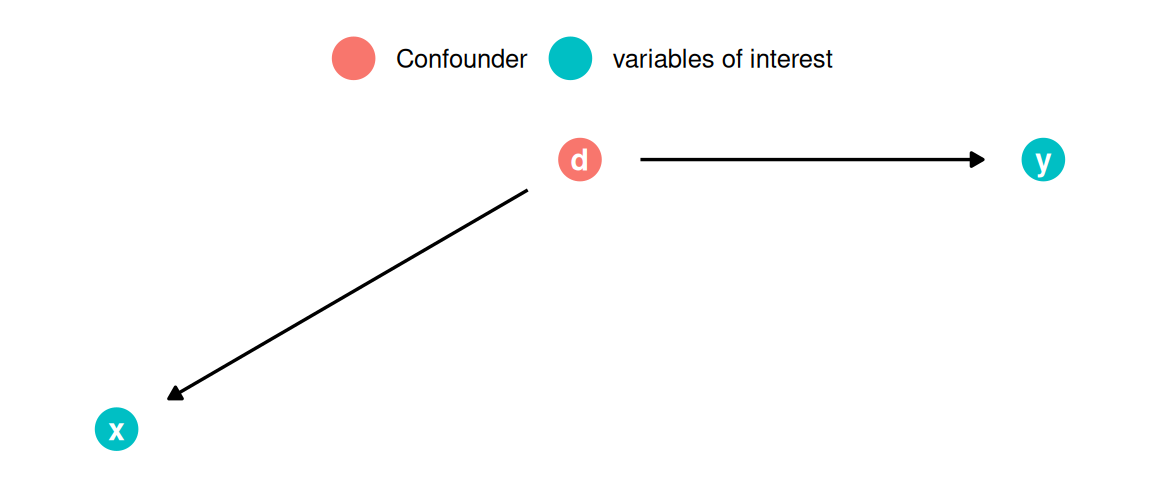

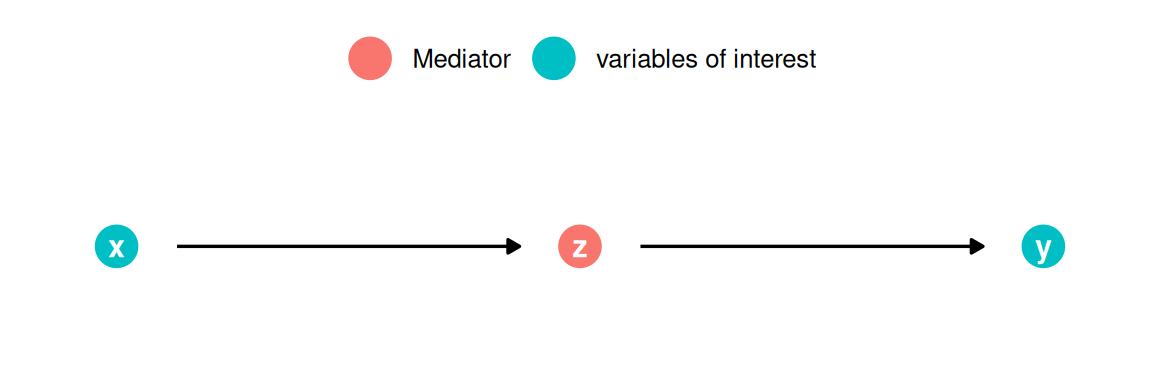

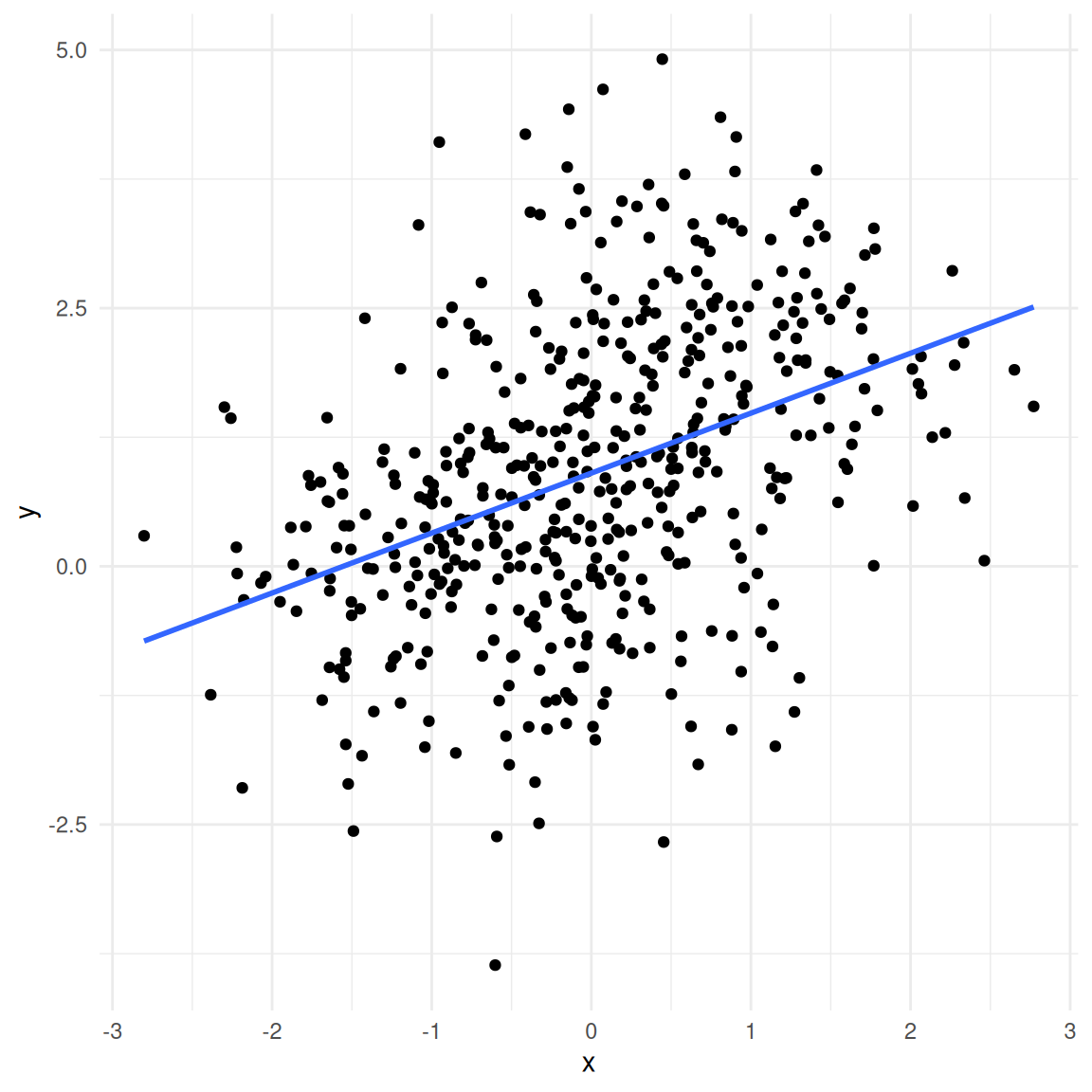

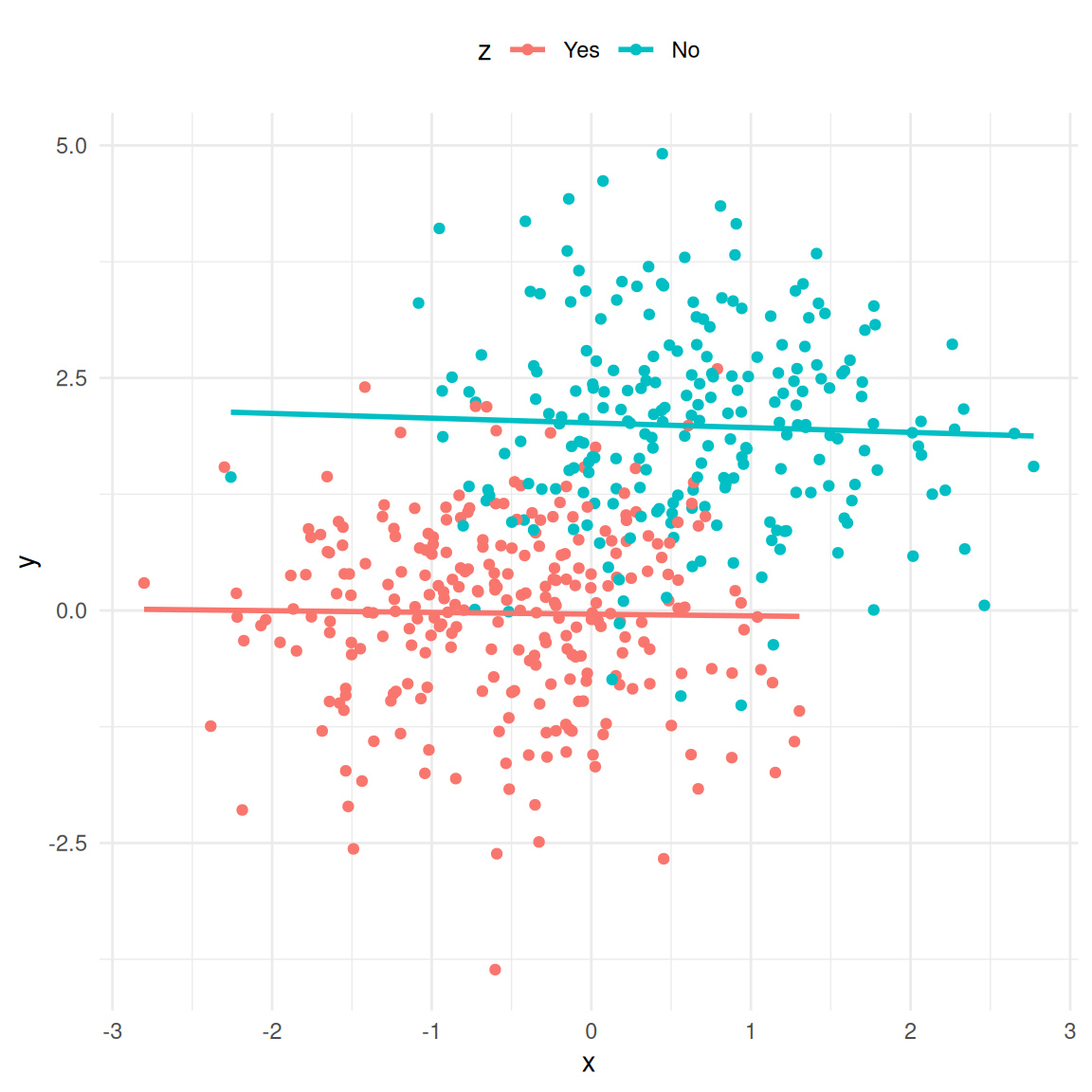

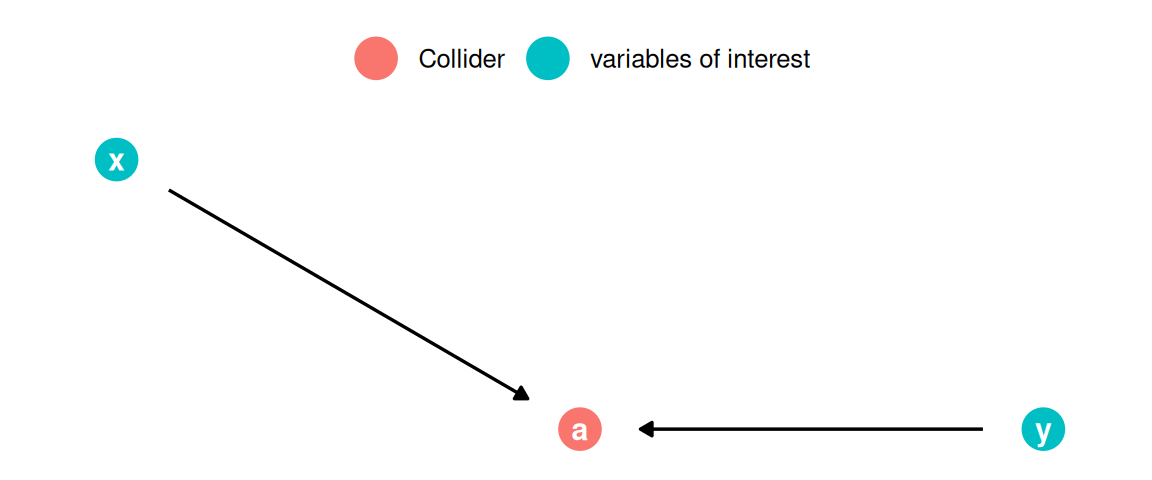

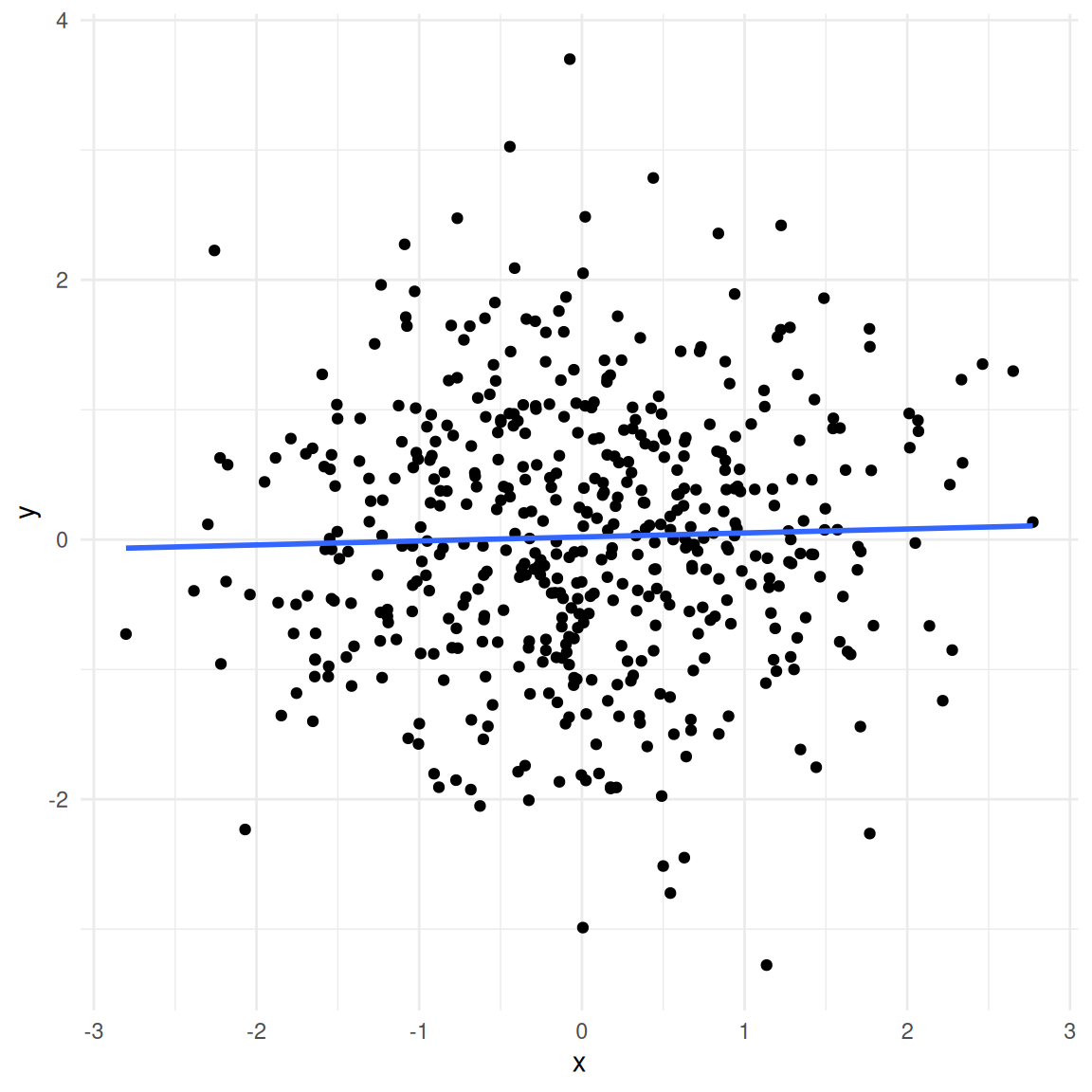

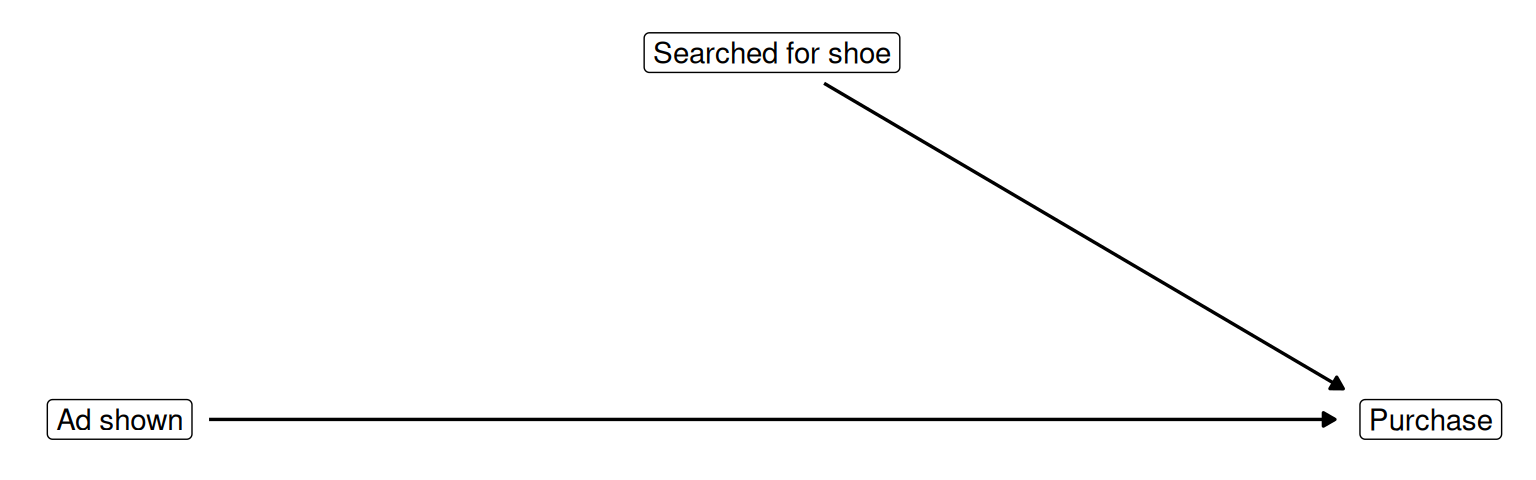

If the goal of your analysis is to explain the effect of one variable on an outcome (rather than just predicting an outcome), one main concern that you need to be aware of is related to omitted variables. This issue relates back to the choice of research design. If you are interested in causal inference and you did not obtain your data from a randomized trial, the issue of omitted variables bias is of great importance. If your goal is to make predictions, you don’t need to worry about this too much - in this case other potential problems such as overfitting (see below) should receive more attention.

What do we mean by “omitted variables”? If a variable that influences the outcome is left out of the model (i.e., it is “omitted”), a bias in other variables’ coefficients might be introduced. Specifically, the other coefficients will be biased if the omitted variable influences the outcome and the independent variable(s) in your model. Intuitively, the variables left in the model “pick up” the effect of the omitted variable to the degree that they are related. Let’s illustrate this with an example.

Consider the following data set containing information on the number of streams that a sample of artists receive on a streaming service in one month.

The data set contains three variables:

- popularity: The average popularity rating of an artist measured on a scale from 0-10

- playlists: The number of playlists the artist is listen on

- streams: The number of streams an artist generates during the observation month (in thousands)

Say, as a marketing manager we are interested in estimating the effect of the number of playlists on the number of streams. If we estimate a model to explain the number of streams as a function of only the number of playlists, the results would look as follows:

##

## Call:

## lm(formula = streams ~ playlists, data = streaming_data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -394.7 -87.9 -5.6 92.9 334.5

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 1248.065 15.814 78.9 <0.0000000000000002 ***

## playlists 3.032 0.198 15.3 <0.0000000000000002 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 135 on 170 degrees of freedom

## Multiple R-squared: 0.581, Adjusted R-squared: 0.578

## F-statistic: 235 on 1 and 170 DF, p-value: <0.0000000000000002As you can see, the results suggest that being listed on one more playlist leads to 3,032 more streams on average (recall that the dependent variable is given in thousands in this case, so we need multiply the coefficient by 1,000 to obtain the effect). Now let’s see what happens when we add the popularity of an artist as an additional predictor:

stream_model_2 <- lm(streams ~ playlists + popularity, data = streaming_data)

summary(stream_model_2)##

## Call:

## lm(formula = streams ~ playlists + popularity, data = streaming_data)

##

## Residuals:

## Min 1Q Median 3Q Max

## -278.94 -62.28 7.23 72.35 246.51

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 1120.721 17.124 65.5 <0.0000000000000002 ***

## playlists 1.923 0.185 10.4 <0.0000000000000002 ***

## popularity 37.782 3.545 10.7 <0.0000000000000002 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 105 on 169 degrees of freedom

## Multiple R-squared: 0.749, Adjusted R-squared: 0.746

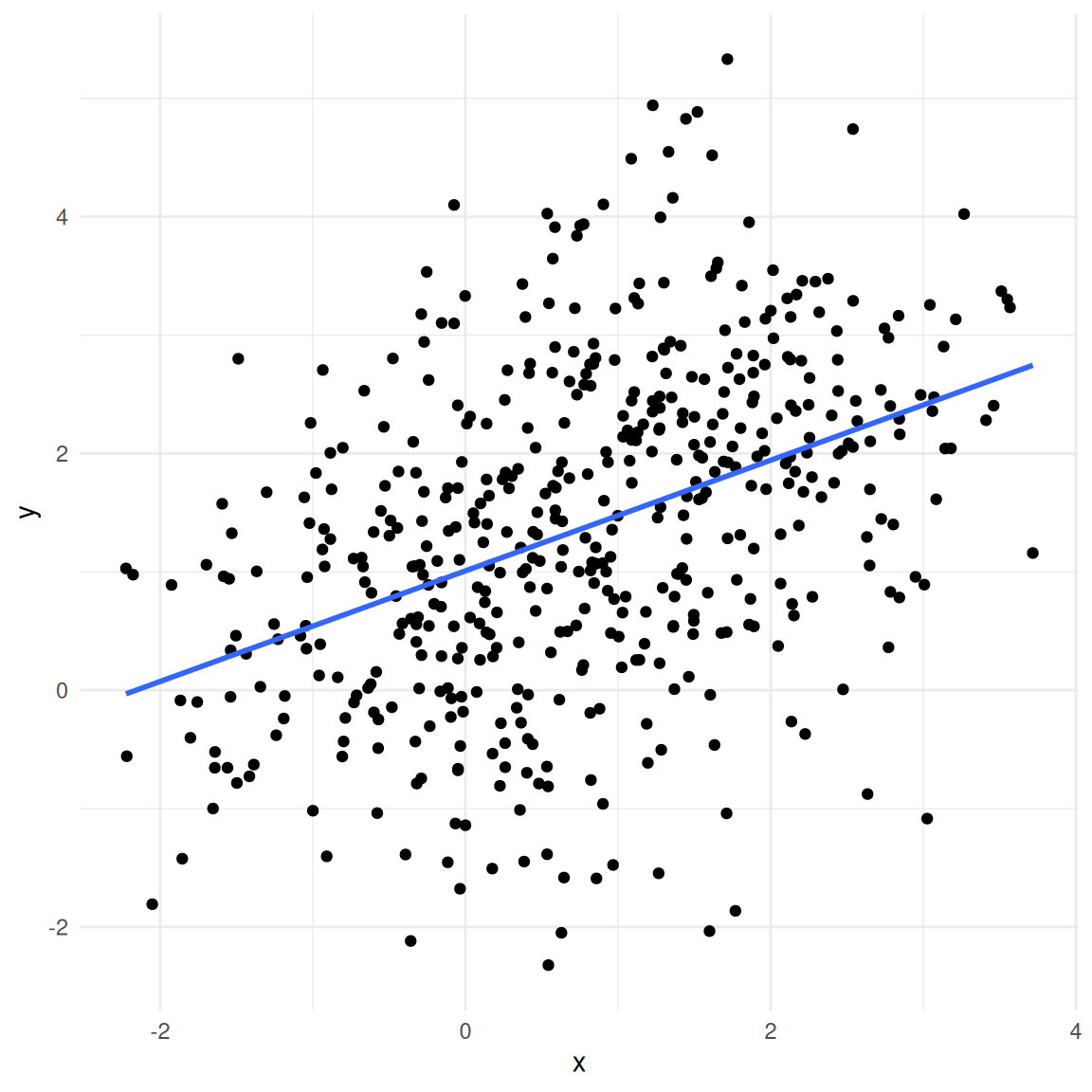

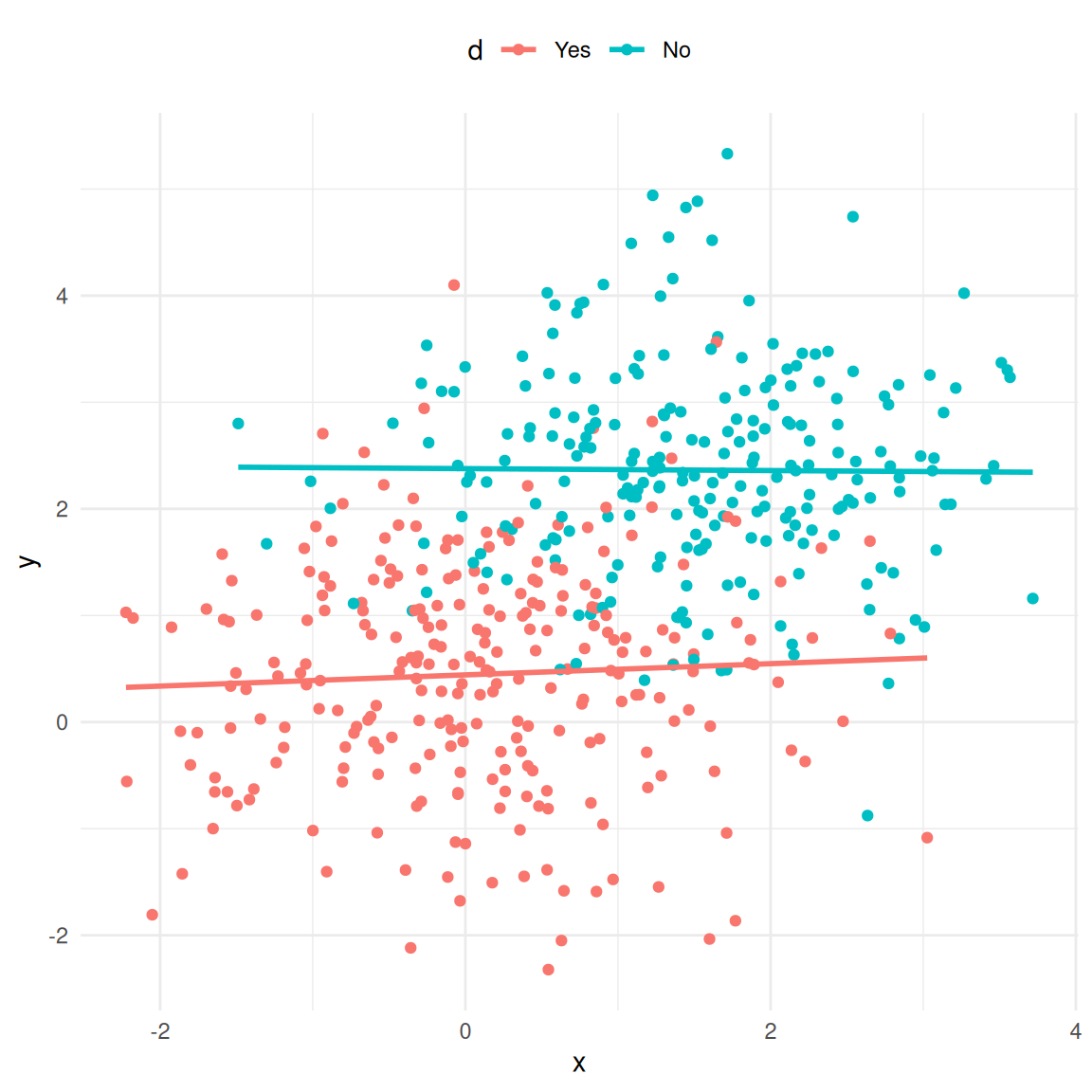

## F-statistic: 252 on 2 and 169 DF, p-value: <0.0000000000000002What happens to the coefficient of playlists? As you can see, the magnitude of the coefficient decreased substantially. Because the popularity of an artist influences both the number of playlists (more popular artists are listed on a larger number of playlists) and the number of streams (more popular artists receive more streams), the coefficient will be biased upwards. In this case, the popularity of an artists is said to be an unobserved confounder if it is not included in the model and the playlist variable is referred to as an endogenous predictor (i.e., the assumption of exogeneity is violated). As you could see, this unobserved confounder would lead us to overestimate the effect of playlists on the number of streams. It is therefore crucially important that you carefully consider what other factors could explain the dependent variable besides your main independent variable of interest. This is also the reason why it is much more difficult to estimate causal effects from observational data compared to randomized experiments, where you could, e.g., randomly assign artists to be included on playlists or not. But in real life, it is often not feasible to run field experiments, e.g., because we may not have control over which artists get included on a playlists and which artists don’t.

To summarize, if your goal is to identify a causal effect of one variable on another variable using observational (non-experimental) data, you need to carefully think about which potentially omitting variable may influence both the dependent and independent variable in your model. Unfortunately, there is no test that would tell you if you have indeed included all variables in your model so that you need to put forth arguments why you think that unobserved confounders are not a reason for concern in your analysis.

7.3.9 Overfitting

If the goal of your analysis is to predict an outcome, rather than explaining a causal effect of one variable on another variable, the issue of overfitting is a major concern. Overfitting basically means that your model is tuned so much to the specific data set that you have used to estimate the model that it won’t perform well when you would like to use the model to predict observations outside of your sample. So far, we have considered ‘in-sample’ statistics (e.g., R2) to judge the model fit. However, when the model building purpose is forecasting, we should test the predictive performance of the model on new data (i.e., ‘out-of-sample’ prediction). We can easily achieve this by splitting the sample in two parts:

- Training data set: used to estimate the model parameters

- Test data set (‘hold-out set’): predict values based training data

By inspecting how well the model based on the training data is able to predict the observations in the test data, we can judge the predictive ability of a model on new data. This is also referred to as the out-of-sample predictive accuracy. You can easily test the out-of-sample predictive accuracy of your model by splitting the data set in two parts - the training data and the test data. In the following code, we randomly 2/3 of the observations to estimate the model and retain the remaining 1/3 of observations to test how well we can predict the outcome based on our model.

# randomly split into training and test data:

set.seed(123)

n <- nrow(regression)

train <- sample(1:n,round(n*2/3))

test <- (1:n)[-train]Now we have created two data sets - the training data set and the test data set. Next, let’s estimate the model using the training data and inspect the results

# estimate linear model based on training data

multiple_train <- lm(sales ~ adspend + airplay + starpower, data = regression, subset=train)

summary(multiple_train) #summary of results##

## Call:

## lm(formula = sales ~ adspend + airplay + starpower, data = regression,

## subset = train)

##

## Residuals:

## Min 1Q Median 3Q Max

## -109.89 -30.21 -0.16 28.19 146.84

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -36.52425 20.15595 -1.81 0.0723 .

## adspend 0.09015 0.00868 10.38 <0.0000000000000002 ***

## airplay 3.49391 0.35649 9.80 <0.0000000000000002 ***

## starpower 11.22697 2.93189 3.83 0.0002 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 47 on 129 degrees of freedom

## Multiple R-squared: 0.7, Adjusted R-squared: 0.693

## F-statistic: 100 on 3 and 129 DF, p-value: <0.0000000000000002As you can see, the model results are similar to the results from the model from the beginning, which is not surprising since it is based on the same data with the only difference that we discarded 1/3 of the observations for validation purposes. In a next step, we can use the predict() function and the argument newdata to predict observations in the test data set based on our estimated model. To test the our-of-sample predictive accuracy of the model, we can then compute the squared correlation coefficient between the predicted and observed values, which will give us the out-of-sample model fit (i.e., the R2).